Technology and security enthusiast Andew ‘Zoz’ Brooks delivers a fascinating DEF CON presentation about proper OPSEC and other guidelines to stay safe online.

Technology and security enthusiast Andew ‘Zoz’ Brooks delivers a fascinating DEF CON presentation about proper OPSEC and other guidelines to stay safe online.

I didn’t know that disobedience was going to be the theme of DEF CON 22 and I submitted this talk. So I guess I didn’t fuck it up. Disobedience is what makes hackers who we are: using things in ways that were never intended or allowed. Sometimes, to show there’s a better way to do things, you need to break some rules. And a big way is violating unjust laws, civil disobedience.

I was partly inspired to give this talk because some of the biggest practitioners of criminal disobedience in this country today are the secret police. Unrepentant career criminals like Michael Hayden who presided over the Bush warrantless wiretapping, and James Clapper who lied to Congress repeatedly, disregard and weasel around the law when it’s convenient for them to do so. They are telling us that the end justifies the means, so we can play that game too.

We have a duty to civil disobedience in cases where the law is plainly wrong. And turning the United States into a surveillance state in the name of fighting terrorism, which as a public health problem rivals the bubonic plague in this country, is worse than criminal – it’s stupid. So we can’t let the surveillance state stop us from doing what’s correct.

Going back to the crypto wars, DeCSS (see right-hand image). To protect big media from hackers playing their legally purchased DVDs on their Linux laptops, DVD decryption code was illegal. That’s a perfect example of a law that’s worse than criminal – it’s stupid, because it only hurts people who are legitimately using media. And of course hackers had a duty to put a stick up the ass of the people responsible for these laws, making illegal T-shirts, making illegal ties, illegal games of Minesweeper.

Of course, there are more or less trivial injustices to be disobedient to: breaking some kind of fullshit EULA – all the way to using technology to resist truly tyrannical and oppressive regimes out there, which people are doing right now. Murdering the man – there’s an app for that (see left-hand image). The point is that using technology to push boundaries is what people who come to these conferences are supposed to do. And on the other side of this, which I’ll return to in a little bit, is that you actually have no idea whether what you’re doing is legal or illegal in many cases. None other than the Congressional Research Service has stated that they don’t know the precise number of federal laws in effect in a region at a given time.

So, not even a good lawyer knows off the top of their head whether or not their client is doing something illegal. And then take into account that laws in this country and others are interpreted by historical precedents, and now it also matters when you are accused of doing something. So forget deliberate disobedience; people break the law all the time without knowing it, so you’ve got to be careful.

Here’s one of my favorite DEF CON examples (see right-hand image). This slide is only just an illustration, it’s an example of being disobedient for good. DEF CON is full of them. I think we can mostly agree that breaking into people’s bank accounts, bank accounts that are not your own, is illegal.

One of my favorite DEF CON moments was meeting the guy who hacked into the Nigerian scammers’ back-end database, owned their bank account and got a little old lady’s money back from them. I think this photo (see left-hand image), by the way, should say “Goatse Lovers”, that’s a missed opportunity.

Leaking things is another disobedient act that’s currently in vogue and I think helpful to society. And a lot of stuff that’s been leaked lately comes down to control of the Internet (see right-hand image). People with a lot more money and power than most of us in this room are trying to lock it down. And disobedience is part of resistance to that power and control. Locked down Internet, one without the freedom to share information regardless of one’s wealth or power or regardless of what that information is, is a fucked up Internet. And so we should refuse to be obedient to that.

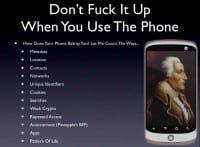

If you’re going to deliberately disobey, of course, there’s just one rule. And anyone who’s been at the Hacker Jeopardy knows what it is. So I want to get a shout out on the count of three. One, two, three – “Don’t Fuck It Up!” Thank you! So the other reason I was inspired to give this talk is because I’ve been obsessively reading every single Snowden leak that’s come out in the past year. Okay, that’s fine for me, I can feel smug, but I wanted to contribute some of my insights on that back to the community and be involved in the discussion here on that. So this talk is for everyone who hasn’t had the free time to go through all of those leaks and to really pore over this stuff. If everyone in this room knows everything that I’m going to say, I’ll be really happy. But probably that’s not the case. And especially, people don’t seem to be thinking about this stuff, because people who should know better keep fucking it up.

Remember this (see right-hand image), the good ol’ days, back when the Internet hadn’t yet transitioned to cat-based humor? Nowadays it’s more like on the Internet everyone knows you like ASCII Goatse. Google even suggests it (see image below).

I’m sorry, it’s just not a DEF CON talk without a Goatse. But seriously, the good ol’ days were never that good. I’m not even really old school, and we were packet-sniffing in the 90s. It’s been a quarter of a century of realizing that the trust assumptions that underlie the early Internet were completely wrong. And that attitude change, as slow as it has been, is a good thing. But you just shouldn’t listen to anyone who’s like: “Oh yeah, back in the day it was so much better.” It wasn’t that good, but it’s definitely worse now because now the business model of the entire Internet is stockpiling, monitoring and tracking your shit.

And the real game changer is the storage. This is the Bluffdale, Utah NSA data center (see right-hand image). Your shit out there is not just vulnerable temporarily when it’s being transmitted, but it’s stored to be mined later. Keith Alexander, when he came here at DEF CON 20, pissed me the fuck off because he came here and said: “Oh, you guys are so smart, come and work for me.” He thinks putting on jeans and a T-shirt is enough to convince us that you’re a good guy even though your agency is preventing people like us from becoming who we are, preventing the next generation of hackers. Think about, if someone walked around in our community with a tape recorder, shoving it in your face all the time, recording everything you said, you would find it hard to accept that person as part of the community. You would probably stop talking to that person entirely. But that’s exactly what’s happening. We have to remember that’s what’s happening, even though we cannot see it in our faces.

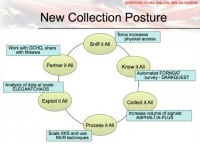

Collect it all, exploit it all, etc. And of course we always assumed and suspected they were doing some of this stuff. But thanks to our friend Snowden we now know they were doing what we long expected, and more. And you’ve got to remember the government doublespeak here, right? When they say “We don’t do this,” that means “We get our foreign partners to do this and they give it to us.” When they say “Oh, we don’t collect that under this program,” it means “Yes, we collect that under a different program.”

So it’s not just one particular TLA. There’s now a million ways to fuck it up, right? Not just in the moment but going back in time. So if anything you do makes you some kind of person of interest, they can go back and find other interesting stuff to pin on you, whether it’s for parallel construction so that they don’t have to admit how they know this stuff, or other reasons. And most of this is not the fault of technology. Think about the problems with technology, it has problems, we find bugs all the time, but the number of bugs is dwarfed by the number of errors that exist between the chair and the keyboard.

So when people say they’ve got nothing to hide – you’ve heard this a million times before – everyone’s got plenty to hide, because they are the source of many of those problems. Everyone has always had something to hide, either now or in the past. If people had nothing to hide, a lot more people would post status messages to ‘facialbook’ that said “Just jerking off.” For all the people in the audience, maybe a few that are feeling smug because they do that, you are morally consistent, but I would say, lacking in long-term planning skills.

People who were trained to do sketchy shit and not fuck it up, including organized crime and the feds – two groups to which there’s not an insignificant overlap – you’ll hear terms like “tradecraft” and “OPSEC”. Tradecraft means techniques and methods. And I’m going to throw up a few things here from my friends at the CIA. Even though I’ll make fun of them later, they spend a lot of time thinking about ways they cannot fuck it up. And the best place to go when you’re looking at CIA stuff, by the way, is analysis, not operations, because operations is where they really fuck it up. Analysis – they spend a lot of time thinking about this stuff.

One thing, if you go to the CIA Tradecraft Manual, which this is from (you can download it and read it) – there’s a big thing about evaluating biases in analysis (see image above). And this stuff is also really useful for operations. I’ll just go through a couple of these. Perceptual biases – seeing what we want to see only. I think you can think of some CIA examples about that. Biases in terms of evaluating evidence – for consistency, small samples are more consistent, they contain less information; only relying on available models when estimating probability; and then, problems with causality, for example, attributing events to fixed background context. All of these things transfer over to when we analyze our own operations when we are doing something bad.

There are also a number of activities that you can do to counteract biases, and this is where the interesting stuff happens. This (see right-hand image) is just a quick selection that has a good crossover from analysis to operations. Checking key assumptions at the beginning of the project or when the project changes. Checking the quality of information. Doing contrarian techniques like devil’s advocacy; high impact / low probability; and “what if?” analysis – how that happened; and then, of course, things that we’re all familiar with from pen-testing – red team analysis, opposing force or adversary analysis. Do these things on your operations and look for where they are applicable.

The other side of that is OPSEC. People say that a lot in this community, it stands for “operational security”, it basically means preventing the leakage of information which could lead to discovery or advantaged by the other side. This World War II image sums it up. And, incidentally, on the topic of being old-school, I showed this picture to someone under the age of 25 and they said: “Why is Gandhi the enemy?” I can’t wait till all education comes from Wikipedia or IMDB…

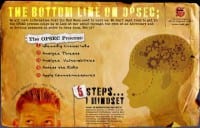

The government uses your tax dollars to produce literature to help you with OPSEC. You can go and check this stuff out. You need to understand what information is relevant: likely threats and vulnerabilities, risk assessment, and then applying countermeasures. And the point of this poster (see right-hand image) is that OPSEC doesn’t start and end with the operation itself, it covers all of your initial exploration and preparation, and then everything afterwards. So, what you really want to get into a mindset is you don’t even know you’re going to do it, and then you forget about it afterwards. OPSEC is a 24/7 job.

So, here is my variant of the 7 deadly sins, the 7 deadly fuckups (see left-hand image). What makes you a candidate for getting busted? Overconfidence – thinking “Oh, they’ll never find me, I’m using an anonymization tool,” so depending on a single tool or point of failure. Excessive trust – in surveillance days, for example in East Germany, 1 out of every 66 individuals was a government informant. What do you think that ratio is like in the hacking community? Emmanuel Goldstein’s estimate is 1 in 5. Probably that’s a high bound, but talk to Chelsea Manning, for example – I bet she is regretting the trust model in the community. Conviction that your guilt is minor, no one’s going to care: “Oh, no one’s going to care about what I’m doing, I’m just defacing a website,” for example. It’s all going in your permanent record.

Guilt by association – visiting the wrong chat room, coming to the wrong conference, being associated with the wrong people. Like the real estate people say: “Location, location, location,” – exposing where you’re coming from is always likely to fuck you up. It can expose you to many things besides just reverse exploitation, which the government has been doing. Of course, sending anything in the clear, not just personally identifiable information, but browser fingerprints, unique device IDs, locations you are or might be at in the future.

Keeping too much documentation about what’s going on – people who are really fighting the state and doing serious business know about this stuff. This is a quote from a Ukrainian separatist: “Home computers and personal cell phones should never be used for operational purposes; identifying documents should never be carried; details of military operations should never be discussed on phones or in front of family members. You may even need to do things that you don’t like to do, like abstaining from alcohol.

Like sins, you are going to commit one of these, you are going to fuck one of these up. So use your tradecraft analysis to figure out how you can recover from making mistakes. One of the things that you can use to stop fucking things up is tools. But tools can also help you fuck it up. A computer is just the tool to help you fuck things up a billion times faster than you could do by yourself. The increased confidence, the sin of overconfidence – that’s the likelihood of fucking it up. Using a tool badly or stupidly can be worse than not using it at all. This is one of my favorite tool injury pictures (see right-hand image above). This is from the water jet cutter. It puts out a stream of compressed water, 15,000 psi, breaks the sound barrier, cuts through inch-thick steel, and everyone who walks in the room is like: “What would happen if I stuck my hand in that thing?”

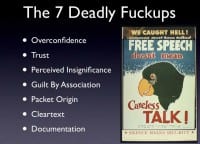

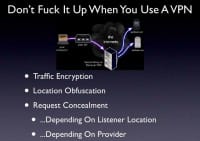

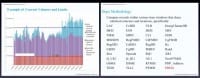

So, here’s the first tool, VPNs (see left-hand image). You are going to use an insecure network – are you safe? Two questions when it comes to tools: “Should I use it?” and “How should I use it?” What do you get from a VPN? You get some traffic encryption, but only between you and the VPN itself, not necessarily from the VPN to the remote location. You may get a little bit of location obfuscation to the remote server; they may not know exactly where you are. Maybe you get some request concealment to the ISP, between you and the VPN, not afterwards. So it really depends on where the listener is located. It’s a single-hop proxy, so anyone who is watching both ends, like a state agency, can do traffic correlation very easily. It also shifts the trust model over to the VPN provider. That’s a provider you probably have a financial relationship with; that could be traceable, depending on how you are paying for it. So think about those things.

VPN providers really vary on what they promise. Many of them say they don’t keep logs. You should know the logging policy, but it doesn’t tell you the whole story, especially if they are not located in the United States. Because they can start logging anytime they want to, for example when they receive a national security letter, which they are barred by law from telling you they’ve received. So, just because they don’t log now doesn’t mean they won’t in the future if you become interesting.

VPN clients vary on how well they hook you up. They can leak information depending on the client – I’ve seen this myself. So if you plan on hiding behind a VPN, then you’d better see what the client lets you expose. Connect to the VPN, run Wireshark (see right-hand image) or another packet sniffer on another computer and see what’s coming out of the computer that you are going to use for operations. Is everything going through the VPN or not? If this small amount of things is too much effort for you, then Internet scofflaw is probably not the job for you. You should work for the government instead.

Here’s a simple task for the lazy. Open up an SSH connection and then fire up your VPN and see if it drops. If it stays open, then stuff is still being leaked; existing connections are allowed to go through and all kinds of things could be phoning out with your real IP. A lot of VPN clients are also shitty for mobile use. Every time you put your computers to sleep or move around, the tunnel goes down and you have to reconnect (see left-hand image). When that happens, every frickin’ app on your computer phones home and tries immediately to reconnect before the VPN reconnects, and exposes your IP. Mail clients, browsers with open tabs just try to reload them, browsers that are doing all kinds of javascripts in the background that are communicating – all your shit is exposed. If this applies to you, make sure all this stuff is shut down before you close the VPN.

So, even if it’s as simple as doing a ‘killall -STOP’, thinking about everything that could possibly phone home, stopping it before you close the VPN is a good habit to get into. Of course, habits are fragile, you’ll eventually fuck it up, so try to automate that process. Another thing on that subject – randomize your MAC address. It’s already been exposed at the Canadian Government, where it’s probably illegally tracking people using airport WiFi from their MAC addresses so they could see where people were moving around in various airports. So I like to randomize my MAC address as often as not too inconvenient.

So, should you use a VPN? What kind of a list does it get you on? VPNs have their uses and their flaws. If you are going up against the big guys and they are on both sides of the VPN, traffic correlation is trivial. Simply using a VPN also makes you look interesting. This is from the XKeyscore manual from 2008 (see right-hand image). You’ve got to also remember with XKeyscore this is not a real-time traffic processor; this is a database miner, it’s a set of filters for stored data. People often say to me: “You should be on a list.” Well, I use a VPN, when I travel especially, so I’m definitely on some sort of list.

This is from Pacific SIGDEV in March 2011 (see left-hand image). Also it mentions ingesting and storing VPN data, once again, looking at identifying VPN use, and then finding out ways to get into those networks. It mentions a program called Birdwatcher; we know nothing more about this, but clearly it’s some sort of data mining program that perhaps could be put to use collecting VPN key exchangers for later cryptanalysis.

By the way, this is also from Pacific SIGDEV (see right-hand image). I’m really pleased to see in this presentation we got a category of our own right next to terrorists, criminal groups and foreign intelligence agencies, so we’re on The Big Time.

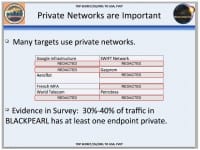

Here’s another NSA slide (see left-hand image). Blackpearl, a survey database from the taps on the undersea fiber-optic cables presumably providing high-level communication of things such as communications having a foreign endpoint, because technically they are only allowed to look at things with a foreign endpoint. This is the sort of legal weaseling. So, once again, using VPNs is something that can attract attention. This was reported as a tool for specifically targeting private networks, but that doesn’t seem to be the case. So, using a VPN puts you on a radar. Is that a reason not to use it? I don’t think so, because you might as well make things more difficult for them. But perhaps in certain cases you should be aware of it when you do your tradecraft analysis.

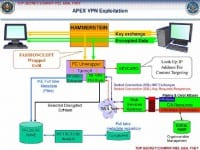

Here’s another one about intercepting and decrypting VPN traffic (see right-hand image). This is the Hammerstein slides referring to doing a man-in-the-middle attack on VPN traffic via compromised network routers with implants inserted. So these refer to selected decrypted content. The good news is that going through all this trouble probably means that it’s not all vulnerable. But at least some of it, no doubt, refers to crypto attacks on PPTP VPNs, known compromise since about 2012 – Moxie Marlinspike and David Hulton’s DEF CON 20 presentation and release of CloudCracker for PPTP.

So, a VPN is probably still worthwhile, but you’ve got to make sure it’s up to date. And don’t just rely on that one thing. One thing you can do if you’re truly paranoid is hop VPNs every few minutes. Some providers even offer this service within a single provider. Again, you’re depending on one provider, and you’re now generating really interesting traffic for the NSA. But against some listeners you’ve got some decorrelation noise in there – good for research like searches in port scans. But just don’t fuck it up believing that this one-hop proxy is going to be a magic all-in-one solution. And remember that it leaves a financial trail, and that can connect to your real identity unless you’re paying anonymously.

Let’s go multi-hop. Don’t fuck it up when you use Tor. Hopefully everyone here knows what Tor is and the main way you fuck it up when you use Tor, which is thinking that Tor encrypts your traffic by default. It doesn’t. Tor is for anonymization, not for encryption. The layers of encryption are just to protect the routing within the Onion, not to protect your base traffic you need to encrypt as well. Tor is very-very important, I think. There’s been a lot of talk recently about “Oh my God, is Tor broken?”, or “Is Tor a honeypot developed by spooks because they have federal funding?” I don’t think either of those things is true, but we are going to talk about some of that now because I think it’s really important that we do, because Tor is the main way, for example, dissidents get out and communicate out of oppressive regimes. It’s how researchers can look up suspicious information without themselves being targeted. It’s how ordinary people can search and communicate without being tracked and monitored. And it’s how all of you can do a search after DEF CON for catastrophic liver damage without raising your insurance premiums.

It pisses me off when people say that Tor is only for illegal acts. Don’t fuck up Tor by only using it when you’re doing sketchy shit. Pump a whole bunch of your normal traffic through it. Even if you are completely squeaky-clean and you are not doing anything wrong, still use Tor because that helps out everyone else. Also, the nature of Tor is for anonymity. It’s really tough to tell people that you’re using Tor for good. But if you can, if you’ve got the use that you can talk about – get it out there, tweet, hashtag something like “Tor for good”. The Tor devils will appreciate it.

But let’s talk a little bit about people who should know better, who fucked it up using Tor. We all remember this: Sabu, LulzSec and AntiSec (see right-hand image), 4chan anons trolling the web for SQL injection vulnerabilities, DDoS’ing websites, dumping user account databases, and taking down high-profile things that were going to really get them in trouble, like the CIA’s website. They were coordinating in IRC channels accessed by Tor.

The feds discovered that, monitored the channels, and waited for someone to fuck it up. Sabu committed the sin of packet origin, logs in just once without using Tor, gets owned immediately (see left-hand image). Immediately, a few seconds later, he gets turned into a snitch because what’s he going to do, right? He’s facing decades of federal imprisonment. So, even though he’d been doxed for months prior, at that point it’s confirmed – he goes snitch.

That’s not the interesting part. The interesting part is what happened to Jeremy Hammond. He gets identified from information in recorded chat logs with Sabu. The feds logged that packet metadata from his WiFi access point. They get a regular pen register, trap and trace order , standard wiretapping. They match the MAC address of his computer to packets going through a Tor entry node; correlate the times of Tor access, his Tor access on his WiFi access point, to his presence, his ID in the IRC channel. So a traffic correlation attack, but not of the normal kind that we think of when we think about Tor. So there’s not compromise necessary of Tor to acquire the circumstantial evidence that eventually put Jeremy Hammond away.

So, the moral of that story is: don’t fail unsafe with Tor – that’s the Sabu moral (see right-hand image). If it’s going to matter that you’re doing this, then don’t have two browsers open even, in case you accidentally typed something into something that’s not the Tor Browser. Make sure everything, even your DNS, goes through Tor. Use a separate machine that’s proven to only connect through Tor – it’s a very good idea. Or, if you want to firewall it, use a firewall like pfSense to make sure that all the traffic from your network goes through Tor. And then check what you’re exposing. Go to something like ip-check.info and make sure that things are not being exposed. And of course, don’t only use Tor for operations; don’t provide the correlation of Tor usage with doing bad things. And of course, OPSEC is 24/7. This is a chat or reddit with Sabu after he was a snitch, saying: “… keep your OPSEC up 24/7. Friends will try to take you down if they have to.” Yes, never a truer word spoken by a fed snitch at that time.

Another one we’ve all heard about – Harvard student (see left-hand image). A bomb threat gets called in to Harvard during exams. It takes a matter of hours before the purpose uncovered as a student is freaking out about exams. He used Tor to connect to Guerilla Mail which adds an originating IP header.

So a lot of OPSEC fails in this case, but mainly the folly of only using privacy tools when you’re up to no good. Privacy should be had for breakfast, for lunch and for dinner. Privacy is like bacon: it makes everything better.

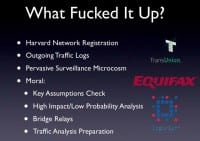

Here’s how he fucked it up (see left-hand image). Harvard’s network requires you to register your MAC address, and that’s one of the reasons why MIT is better than Harvard, because we don’t do that. But Harvard requires registration tied to MAC address, and they log the outgoing traffic. These things provided multiple potential vectors for this guy to fuck it up. Again, no compromise of Tor necessary. This is kind of a microcosm at one university of pervasive surveillance and pervasive correlation, because there’s lots of ways that those two things put together could have fucked it up for him. For example, investigators could look at who went and downloaded the Tor Browser Bundle right before the bomb threat got called in; or look at everyone who connected to a known Tor entry node at that time, or who accessed the Tor directory servers.

So, when I think about this I think about what we’ve already got in this country, a model for pervasive surveillance that everyone is familiar with. And that’s the credit agencies. And we do a kind of OPSEC with the credit agencies. We get credit before we expect to need it to build up a rep. Use privacy tools before you need them. We don’t cancel credit cards even when we don’t need them anymore, because they just sit there, keeping on building up our reputation. So don’t stop using the privacy tools when you finish doing something bad.

Just like with a credit agency, Tor usage can get you on a list. But you’ve got a good reason for being on that list. So there’s a lot of ways that this guy could have not fucked it up. For a start, he should have done, as we said in our tradecraft, key assumptions check and high impact / low probability analysis, being prepared for that inevitable interview with the cops as a Tor user. Or he could have used a bridge relay to connect to Tor, but more on this later. We know that the NSA has been tracking bridge relays, too. He could have been prepared for traffic analysis on his entry point, so if he’d gone off campus and used the Starbucks or used a burner cell phone with a data plan, then he probably wouldn’t have got busted. People do swattings and bomb threats all the time and there aren’t the resources to really track it down. You just have to make it hot. And of course he could have used a mail service that didn’t IP-identify, exposing his Tor exit node.

So, what do we know about how vulnerable ordinary Tor users are at the state surveillance? What we do know is that Tor was troublesome enough for NSA and GCHQ that they had at least two anti-Tor symposia, Reanimation 1 and Reanimation 2, most recently Reanimation 2 in 2012 (see left-hand image). So probably that’s not a straightforward backdoor. That’s good news that they had to have a conference on it. We do know that using Tor is obvious. Tor is designed to make Tor users look alike, not Tor users look like non-Tor users. So fingerprinting is already done for you. We know that attacking Tor seems to be challenging enough in 2012 that they went for the browser instead, delivering a native exploit to the version of Firefox used in the Tor Browser Bundle. I think that’s a good sign too.

This is from the famous “Tor Stinks…” presentation (see right-hand image), which I’m sure you’ve also seen, so this is going to be quick. But we have an admission that de-anonymizing all Tor users all the time is not able to be done. De-anonymizing is possible but not trivial. So you’ve got to practice your COMSEC inside of your Tor sessions. Of course they’re doing traffic correlation attacks – this doesn’t seem to be on a big scale though, and staining of Tor users either by storing cookies or by using Quantum man-on-the-side attacks to force the browser to give up identifying cookies, like Yahoo cookies, Google cookies. This is one reason why, even if you are putting everything through Tor, using the Tor Browser is good because it doesn’t store those cookies. And also there are Quantum methods for delivering exploits to the computer, like the FoxAcid program.

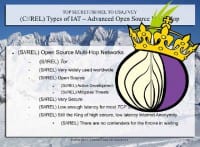

Some of that certainly should give you an idea of how safe Tor is as a single solution. Don’t ever use single solutions. But the good news for regular Tor usage is that it makes things harder, and this is the third document released at the same time (see left-hand image), saying: the system, as far as low latency anonymity goes, is still the king.

Similarly, a lot of counter-Tor efforts go into client side exploitation. So, Tails (see right-hand image) gets a positive review from the secret police: “Adds severe computer network exploitation misery to the equasion.” So, what does all this tell me? Tor does put you on the radar. And Tor entails “Do make these people’s lives harder.” And that’s a risk tradeoff you need to think about. So, how I think about it is that using it puts you on a list, a big list. And if your disobedient acts would put you on a much smaller list, the list of people warranting serious attention, then it’s probably worth being on that big list as well. And the more people who are on that big list, the better it is.

Also, security of your whole system is still more important in the big picture than any one single element. Or, to put it another way, there doesn’t seem to be a critical flaw in Tor that makes all these other attacks unnecessary. But if your life or your freedom depends on it, don’t ever trust one single element. This includes Tor and lots of other tools in your communications chain. Do your tradecraft. I like how it says on Cryptocat’s website: you should never trust your life or your freedom to software. I think that’s slightly overstating the problem, because we trust lives to software pretty much every day: every time we get in a car or in an airplane. But when you get in a car, you also put on your seat belt. It’s like the old Islamic proverb: “Trust in Allah but tie up your camel.”

Here’s some more good news: the big list and the small list. These are the recently leaked XKeyscore filter rules (see left-hand image). Basically, these ones show that security agencies are focused on making that big list as big as possible. Anyone who connects to the Tor directory servers or the Tor website gets put on that big list. In terms of the stated mission of the secret police, this is great, this is akin to looking for a needle in a haystack by piling on more hay. Great work! This is really good. It’s still upsetting and concerning that they are targeting everyone who uses Tor, especially in that it’s worse than criminal – it’s stupid way; but no more so than the rest of the blanket surveillance that we are talking about. It just reinforces “We need more people using these services.”

The other part is worse. This is what I mentioned: collecting the addresses of bridge relays by mining them out of the emails that people send when they get a bridge relay. I think this is a really scummy thing to do, and it’s worth being the way that they’re doing it. So, would the Harvard have been caught even using a bridge relay? Maybe, maybe not. We don’t know why because we don’t know how much information gets shared between these three-letter agencies. But be careful out there.

Finally, in terms of really loading up on the hay, Tails and Tor are advocated by extremists on extremist forums – that’s a comment from the XKeyscore rules. So, congratulations – we’re all extreme, have a Red Bull!

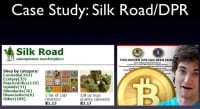

Silk Road and Dread Pirate Roberts – we all know the story (see right-hand image). Silk Road operates as a Tor hidden service for over two years before it gets busted, not a bad effort by some metrics. Not everyone knows, but we know that the feds made hundreds of drug purchases through Silk Road, slowly, carefully building the case. They let it operate probably for longer than they had to, to make sure they could get a bust. This is like standard organized crime stuff. They bust the Dread Pirate Roberts at the same time that they image and seize the Silk Road server.

So, what fucked it up? Well, we know that there were numerous OPSEC fails by the Dread Pirate Roberts: stack exchange posts, forum posts from the same account, including his real email, ordering fake IDs with his face on them – lots of things that were likely to fuck it up for him (see left-hand image). But we don’t know how this server was de-anonymized, and that’s the 180,000-Bitcoin question. How did that happen? We don’t know the answer. But here are some options. The Dread Pirate Roberts was already identified and monitored, and somehow he logs in without Tor one time, to fix the server for example, not out of the realm of possibility. The hosting company could have been identified by commercial means, like the pay tracing, and then imaged all the servers on that hosting company, like what happened with Freedom Hosting. They could have served an exploit to the Silk Road server, owned it and had it de-anonymize itself. That’s what they did to the Freedom Hosting customers. Or they could have performed a large-scale time-intensive hidden service de-anonymization attack.

We don’t know the answer, but let’s talk about the only one that involves an attack on Tor directly. Hidden services – what you need to know about them is they are a huge disadvantage in terms of correlation attacks, because the attacker can prompt them to generate traffic. They are basically two Tor circuits connected together around a rendezvous point. And anyone that connects to Tor long-term, to the same thing, is vulnerable to these kinds of things, especially the hostile relays, because the network is not that big. So, sooner or later you are going to go through a malicious node. Not such a problem for the typical user, but if you are maintaining a long-term repeat business, like a worldwide drug supply company, then it’s dangerous.

I don’t have time to go into details, but this is a paper released recently by Biryukov et al. about de-anonymizing hidden services (see right-hand image). They were able to harvest hidden services and map the popularity of a number of hidden services, including Silk Road. This is just mapping Onion addresses and the usage of them – two days for less than 100 USD in Amazon EC2 instances. They were also able to confirm that a particular Tor node acted as a guard node for a given hidden service, which allowed the de-anonymization of that hidden service – with 90% probability, in eight months for 11,000 USD, well within the realm of possibility for state actors. This relied on a bug that has since been fixed. The Black Hat talk this week that was canceled relied on a different bug, also since fixed, but they were able to stain Tor traffic to hidden services. This was very irresponsible of them, because that stain is now preserved in all of the traffic that’s being collected by state surveillance agencies. So, if Tor’s crypto was broken at a later date, those people could potentially be de-anonymized.

But the good news about it is this stuff leaves traces. This (see left-hand image) shows a spike in the number of Guard nodes when Biryukov and others were doing this, so it can’t be noticed. That’s the good news: we can find these bugs and fix them. But be aware that, yes, there are potential attacks on Tor, but not against everyone all the time, we think.

About hidden services the state actors don’t have much to say (see right-hand image). That’s not in that paper, it’s the same kind of thing, harvesting hidden service addresses to see what’s out there, and then using cloud instances or Tor relays; presumably keeping up with what’s being done in the open source community, but no reports of noticing these attacks on a continuous basis.

And let’s remember from the JTRIG Wiki (see left-hand image) conveniently released, I think, the day or the day before DEF CON slides were due, so I could put them in here. The spooks use Tor too, quite a lot. These are the British GCHQ JTRIG people using Tor for all kinds of things. So, even though they almost certainly commit the sin of overconfidence among others, they have a sense of assurance that their activities are not going to be de-anonymized all the time, for whatever that’s worth. And also, on the subject of whatever that’s worth, even though trust isn’t transitive and it doesn’t help anyone in this room, I know some of the Tor developers personally and I trust them not to run a government honeypot and not to make backdoors for the spooks, for whatever that is worth.

So, the key element to all this thing is not the de-anonymization of the Silk Road service, which is possible. The key element is being tied by identity to the operation of that service. It’s theoretically possible for the server to have been completely identified and imaged because it’s a bidirectional Tor circuit, without Dread Pirate Roberts being busted. If he had practiced his COMSEC properly, he might not have been caught. So the moral of the story there is: don’t run a massive online drug marketplace if you don’t have a plan for when that thing gets infiltrated. Maintaining anonymity with a large organization over a long period of time is really-really hard. You’ve got to do everything perfectly. And not everyone starts out intending to be an international cybercriminal, criminal mastermind, so they don’t take precautions ahead of time. Try and decide in advance where things might go. Do that tradecraft analysis.

Let’s move to phones. What does that little Benedict Arnold in your pocket do to give you away? So much frickin’ stuff (see right-hand image). The metadata of all your calls and your location information is available to all the federal agencies, being given to them straight by the phone companies. Also location from the Exif data in the photos you take. They leak your contact lists, offer up lists of the WiFi networks you’ve accessed to anyone who’s listening in that area; unique identifiers such as IMEIs, UDIDs and so on; preference cookies from browsers; sometimes the contents of your searches if you do them in the clear.

The older devices have weak crypto, especially the ones that have a mixed version base (Android). Web browsers on these tiny devices have limited RAM and cache, so they are constantly reloading frickin’ tabs as fast as they can; and so, everything that you’ve done recently, when you move to a different network, gets re-exposed. Autoconnect, the WiFi Pineapple’s best friend: “Oh, hello ATT WiFi! Hello XFINITY WiFi! I remember you!” Apps, of course, are leaking all kinds of shit. It all adds up to a unique identifier for you and a pattern of your life.

The agencies monitor this kind of stuff constantly (see left-hand image), looking especially for concurrent presence and the on / off patterns of your phones. I’m kind of famous for not carrying a cell phone. That’s because I don’t like publicly associating myself with criminal organizations, by which I mean, of course, the phone companies. But there’s one time a year I carry a phone. It’s this little 7-year-old Nokia feature phone. It must look great in the metadata store, because every time this phone is used it’s constantly surrounded by thousands of notorious hackers.

But for the secret police, smartphones are the best gift they could ever get (see right-hand image). It’s like Christmas, Hanukkah, and Steak and Blowjob Day, all rolled up into one big spy orgasm. Their perfect scenario is just a very-very simple thing. A simple photo share that happens millions of times a day – they get everything I just mentioned, and more. They know this, and yet even the spy agencies manage to fuck it up.

Here we go with the CIA: the February 2003 rendition of Egyptian cleric Abu Omar from Italy (see left-hand image). The police were able to reconstruct a minute-by-minute rundown of that abduction from the cell phone records. 25 CIA employees and one United States Air Force lieutenant colonel were named and charged by the Italian authorities for pulling this guy off the streets, illegally abducting him and spearing him out of the country. They did this because the phones were geo-located near the abduction at the time of the abduction. They found that the phones had called one another; they had even called numbers in the U.S., like family members. They never removed their phone batteries. They geo-located their phones to the hotels at night, checked them against the registration records. Many of them used their real names. Some even made sure that their hotel stay was registered against their real frequent flyer numbers so they got the miles.

So, if you are going to use a burner phone under this kind of capacity to massively correlate every phone that’s in use all the time, then you need to know what to do to not fuck it up. In fact, if you are carrying a personal tracking device, aka cell phone, you have probably already fucked it up. But here’s what you’ve got to do to use a burner phone. Agencies specifically look in the traffic to identify burners, looking for things like length of time from activation till when they go away and are never used again; patterns of use – trying to identify burners’ cycling if they get used again. Fingerprinting of phones – EFF is suing the NSA about this right now. They log the signal strength at cell towers to get your location. Every time the phone is turned on or moves, there’s a record. And the number that is used to activate the phone or the SIM is also recorded. And also the purchases – they’ll go back to security video in the Walmart or wherever you got it from.

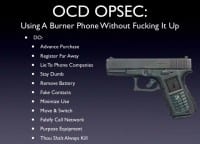

So here’s what you’ve got to do if you want to use a burner phone securely (see right-hand image). Purchase them a long time in advance before the operation. Register them far away from the operation’s area. Use false information when you register them. Go with dumb or feature phones instead of smartphones. Remove the battery when you’re not using it. Fill the phone with fake contacts. Use each one as little as possible. Switch phones when you switch locations, and leave the phone at that location so that you don’t fuck it up. Call unrelated numbers so that there’s a different pattern of network per a phone. And remember the purpose for each phone. And finally, destroy it when you’re finished, or you can do what McAfee does, when he said yesterday: “Tape it to the bottom of a long-distance 18 wheeler and let it go for a ride.” I’m not saying that’s the best way to do it, because eventually someone is going to find it and then they will do forensics on it, so think about that.

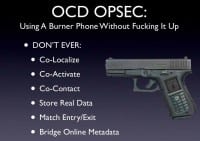

Don’t ever: turn a phone on in a location that you can be placed at; allow your phone to be on at the same time or place as another phone that you own; call the same non-burner phone from multiple burners; store any of your real contacts on that phone (see right-hand image). Matching entry / exit point – we know specifically they look for that, so don’t match the last use and first use of phones, overlap it. Don’t tie them to online services that can bridge that phone metadata, for example, two-factor authentication on Gmail accounts. Think what you would do to red team of massive database of location, time, call destination and call length metadata. Anonymity is hard. If you can’t go to this much trouble to not fuck it up, then evaluate whether the risks you’re taking are worth it.

Let’s go to messaging (see right-hand image). After all these years, email still fucking sucks. Fighting spam aids tracking because that’s why they insert sender IPs and other information into the headers. After all these years of the Wall of Sheep, webmail servers are still going to HTTP and not forcing HTTPS, so a more accurate sheep-related analogy is this – a fucking sheep got filled with goddamn worms. Mail services, even if they implement SSL, have weak server-side storage – remember the GCHQ slide with that infamous smiley face. Email is still fundamentally broken, because even if you use PGP or S/MIME, the metadata is still not encrypted, and metadata can still fuck you up.

This is a huge one – people keep their email, it’s logged insecurely on the client side either in browser caches and so on where it can be exposed, or people just having bad retention habits and saving all their email. Like someone famously said: “It doesn’t matter that I don’t use Gmail, because Google already has all my mail, because all my friends do.” Remember that. Google is part of the problem. And instant messaging is not much better. So you’ve got to remember the ‘psycho ex principle’: never say anything, never put anything in a message packet that a psycho ex could credibly use against you later. Assume everything is being saved forever, especially by the NSA if it’s encrypted, because their retention rules allow them to keep it forever if it’s encrypted.

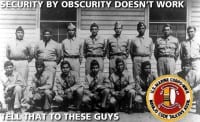

We all like to make fun of security by obscurity, right? But sometimes that’s all we have. These are the code talkers in World War II (see left-hand image), a classic example of security by obscurity that worked. At its best, it’s fully deniable; it’s arguably the safest communication because no one even knows it’s a communication. But if you are going to use it, if you are going to use security by obscurity, make sure you don’t fuck it up.

Here’s another CIA example, General Petraeus (see right-hand image). Really picking on these guys going all the way to the top. He’s having affair with his biographer. They write messages to each other in draft emails on Gmail, and then don’t send them, just delete them when they’re read. Multiple people will have access to the account. So, in spy terminology, that’s recasting email as a dead drop instead of a transit mechanism. Now, I would never begrudge anyone a booty call, but if you’re gonna fuck – don’t fuck it up!

They used the exact technique that was developed by people that CIA was already monitoring, Al-Qaeda people in the Middle East somewhere. But using multiple accesses to a single email address from different locations – that’s exactly what pervasive surveillance was designed to expose (see left-hand image). Anyone in this room, if they were given the database and said: “Write me some interesting queries,” one of the first things you would do is say: “Give me all the accesses to email accounts that are in possible journeys,” – within times that you could not physically make that location transit. Secondly, don’t rely on things getting deleted. If Google knows about it, it’s no longer safe. It’s cheaper to keep things than to delete them these days.

So, be judicious about your insecurity, understand your insecure channels. It’s okay to use them, but manage them. Do your quality of information check and your “what if?” analysis. You should understand by now in this talk: Petraeus could have still covered his tracks, even if it looked plain for all to see that they were two people having an affair, as long as it couldn’t be tied back to him. This kind of thing happens millions of times every day; hiding the noise.

Here are some common broken and compromised services (see right-hand image). Commercial webmail is basically all fucked. I advise people to run their own mail server – at least, when the feds are interested, you’ll know about it. Metadata’s still a bitch, though; gives a lot away. This is an image of the mail that Lee Harvey Oswald sent to the Kremlin. So, that’s what metadata is. Hopefully, Dark Mail will do something about this. I didn’t go to Ladar’s talk, I’m going to watch it later, but hopefully it was good.

Skype – definitely compromised; no question (see left-hand image). This is from a SIGINT Enabling document referring to backdoor in commercial service providers: by 2013, full SIGINT access to a major Internet peer-to-peer voice and text communications system. Well, what do you suppose that is? Too speculative? NSA briefing notes from the visit by the SIGINT director of German intelligence; under “Potential Landmines”, a carefully parsed statement saying: the official line is that Skype has been owned by tailored access at the end points, meaning compromising one or other of communicating party’s computers, not in transit. But a clear implication from the language is that they’ve done the deal with Skype that they didn’t want to tell the Germans about, even though they are allies.

And if that doesn’t convince you, JTRIG’s Wiki spells it out: real-time call records, bidirectional instant messages and contact lists – pwned. So, fuck Skype. I mean, you can still use it if you want to, but understand that to people with the right capabilities it’s equivalent to unencrypted. So, figure out your threat model.

Lots of chat, I think, is broken, if we’re including the secret police in our threat model (see right-hand image). Let’s just assume IRC is pretty much all collected. If you can grab all of port 80, then why wouldn’t you just grab 6667? Even if you’re using SSL from you to the IRC chat room, even if one single person in the group chat goes unencrypted it means that that stuff is completely ownable by mass surveillance. And we know that IRC is on the radar for spooks because of QuantumBot taking control of IRC bots, over 140,000 bots taken control of and co-opted. Lots of reasons, of course, that spooks might be interested in IRC bots. Persistence presence all over IRC is just one of them, but it’s something to be aware of. Remember things like when Google promises “Off The Record”; all they mean is that they don’t keep it. It’s not true OTR. Also remember that Quinn Norton’s great essay “Everything Is Broken”. Some OTR implementations don’t encrypt that first message, and she has tales of people fucking it up because of that. So don’t let your story be one of them.

So, what can we use? (see left-hand image) What might not be completely fucked? Some OTR implementations. Some people like Quinn have bad things to say about loop purple, but it’s everywhere. Cryptocat, I think, after some initial security fails really did the right thing, opened it up to community peer review and security auditing; they really want to make a good product. I’ve been using Bitmessage a lot lately. Every so often, it goes berserk, runs all my processes at 100%, but it seems to be decent as long as you can connect to it regularly, because it throws messages away after two days because of performance problems. So it’s not good for intermittent usage. I haven’t played with RetroShare too much but I’ve liked what I’ve seen – I like the peer-to-peer structure and the key management. That seems like a good direction to go in.

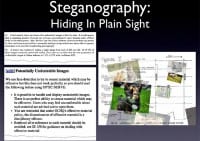

But it sums up that we really need more auditing, we need more peer reviews so that we can see what we can really trust. And it also suffers from the perspective of encrypted communications, so we need more steganography.

I’m about to be dragged off the stage. I have two things to say. Number one, we say there’s a lot of suspicion of glassholes because they might be recording us. Well, guess what? Everyone’s fucking recording shit. People have been keeping their email and keeping their IMs for so long and not deleting them. We need ephemeral messaging that’s not just in the smartphone app space. We need more steganography (see image below).

One thing… GCHQ, they had to use special staff to view the Yahoo chat videos that they were legally collecting because of all the nudity. So send plenty of nudity, make it bad. The thing on the slide is called Cats n Jammer, a friend of mine wrote it. It mixes cat pictures with things that look encrypted, things that look like security documents. So you can swap cat pictures for porn.

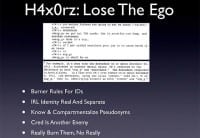

One last thing I wanted to say to the people in the audience, the h4x0rz: lose the ego, follow those burner rules for your identities, keep your in-real-life identity real and separate from the ones that you do bad things (see right-hand image). People come to conferences like DEF CON to get cred, but cred is your enemy. Don’t talk about the shit that you’re doing. This is from the criminal complaint against Jeremy Hammond; this is where Sabu goes to great trouble to connect all of Jeremy Hammond’s identities together so they can put that criminal complaint together. And so, really, burn those things.

Finally, support the EFF, ask for things. Good luck! It’s better to be lucky than smart sometimes. And never surrender to obedience. Thank you!