Ben Hagen, an acclaimed security consultant from the US who ran Application Security for the Obama re-election campaign, delivers a talk at the 29th Chaos Communication Congress event to share his insider’s view of the recent Presidential Election campaigns from a security perspective.

Hello everybody! I’m really excited to talk to you guys about security and political campaigns. I had the fortune to run the Application Security Department for the Obama 2012 campaign, and I think we can all agree that technology has an ever-increasing importance in politics in general, but especially in political campaigns. Certainly, in the US we now see lots of money-changing hands over the Internet; we see lots of community building, lots of communication, lots of advertising. Basically, technology is a really big part of campaigns in the US, but I think also across the world.

Hello everybody! I’m really excited to talk to you guys about security and political campaigns. I had the fortune to run the Application Security Department for the Obama 2012 campaign, and I think we can all agree that technology has an ever-increasing importance in politics in general, but especially in political campaigns. Certainly, in the US we now see lots of money-changing hands over the Internet; we see lots of community building, lots of communication, lots of advertising. Basically, technology is a really big part of campaigns in the US, but I think also across the world.

So, today we’re going to talk about a lot of different things (see right-hand image). I’ll give you some very brief background about myself. We’ll then talk about the 2008 and 2012 Obama campaigns; what kind of role technology played in those; and the importance of security. Then we’re going to get into the threats in terms of the threat actors and the actual threats that they might be posing to campaigns; and then talk about the technicalities of enterprise security, cloud security, application security at campaigns. So, let’s get started.

So, today we’re going to talk about a lot of different things (see right-hand image). I’ll give you some very brief background about myself. We’ll then talk about the 2008 and 2012 Obama campaigns; what kind of role technology played in those; and the importance of security. Then we’re going to get into the threats in terms of the threat actors and the actual threats that they might be posing to campaigns; and then talk about the technicalities of enterprise security, cloud security, application security at campaigns. So, let’s get started.

So, me: I went to university; I was at university in the US, it’s kind of in the middle of nowhere. It’s famous for agriculture, veterinary medicine, and computer engineering, which is a really odd combination. As an undergraduate I actually chose to study political science and Mandarin Chinese, which, you know, obviously “fits” into the veterinary sciences and computer engineering, but upon graduating I didn’t really get into the jobs I was expecting to, so I went back to school for a Master’s of Science in Information Assurance, which is a fancy way to say computer security, with the computer engineering department. They have a really great program that teaches you the fundamentals of security all the way through the stack, basically. I had always been a computer hobbyist, so it wasn’t a big transition for me, but it was a great experience.

So, me: I went to university; I was at university in the US, it’s kind of in the middle of nowhere. It’s famous for agriculture, veterinary medicine, and computer engineering, which is a really odd combination. As an undergraduate I actually chose to study political science and Mandarin Chinese, which, you know, obviously “fits” into the veterinary sciences and computer engineering, but upon graduating I didn’t really get into the jobs I was expecting to, so I went back to school for a Master’s of Science in Information Assurance, which is a fancy way to say computer security, with the computer engineering department. They have a really great program that teaches you the fundamentals of security all the way through the stack, basically. I had always been a computer hobbyist, so it wasn’t a big transition for me, but it was a great experience.

After university I got into the Security Operation Center of a Fortune 100 company. We did monitoring for their organization, but also for their customers, and that kind of thing. And if you’ve ever seen the movie War Games, the big missile command screens that you see in the back of it with everybody sitting in front, that’s basically the environment we worked in. We were monitoring networks for security incidents, doing investigation, kind of cracking through millions of events a day trying to find things that were bad. I started off as an analyst, and then I got into some development work, doing automation, ticket management, and the like.

After university I got into the Security Operation Center of a Fortune 100 company. We did monitoring for their organization, but also for their customers, and that kind of thing. And if you’ve ever seen the movie War Games, the big missile command screens that you see in the back of it with everybody sitting in front, that’s basically the environment we worked in. We were monitoring networks for security incidents, doing investigation, kind of cracking through millions of events a day trying to find things that were bad. I started off as an analyst, and then I got into some development work, doing automation, ticket management, and the like.

After a couple of years of that I got into security consulting, which is great, because you get to see the problems that lots of different people have with security. You get to go from organization to organization, talk to them about their problems, talk to them about how you can solve their problems; you get to find problems and you usually don’t have to fix them, which is kind of the sweet spot, right? It’s always fun to point things out and not be responsible for what happens afterward. In security consulting I did a lot of penetration testing, a lot of application assessments, specifically lots of web application assessments.

After a couple of years of that I got into security consulting, which is great, because you get to see the problems that lots of different people have with security. You get to go from organization to organization, talk to them about their problems, talk to them about how you can solve their problems; you get to find problems and you usually don’t have to fix them, which is kind of the sweet spot, right? It’s always fun to point things out and not be responsible for what happens afterward. In security consulting I did a lot of penetration testing, a lot of application assessments, specifically lots of web application assessments.

I’ve been based out of Chicago for about 8 or 9 years, I think. Chicago is a pretty interesting place to be in technology. The technology community there is pretty small, we all kind of know each other, and after doing about 3 or 4 years of consulting, I was having dinner with a bunch of friends in technology in Chicago, one of whom had recently started as the CTO for the Obama 2012 re-election campaign.

We started talking about his role in the campaign and what he was doing, and he told me that he would often have trouble sleeping at night, because they’re basically developing, or were planning to develop at this point, lots of really great new technology for the campaign; kind of like exciting stuff in terms of community building, communication, data, big data analytics, and that kind of stuff. And he was kind of worried about the role security would play in all of this. He was worried that we would be a big target; that the hackers of the world would be after us and that they could ruin the whole thing for him.

We started talking about his role in the campaign and what he was doing, and he told me that he would often have trouble sleeping at night, because they’re basically developing, or were planning to develop at this point, lots of really great new technology for the campaign; kind of like exciting stuff in terms of community building, communication, data, big data analytics, and that kind of stuff. And he was kind of worried about the role security would play in all of this. He was worried that we would be a big target; that the hackers of the world would be after us and that they could ruin the whole thing for him.

Naively, in response I said that I thought I could help them with that. And a couple of months afterwards I joined the campaign as a Senior Application Security Engineer, which is a little misleading, because I was the only application security person, or the only full-time security person within the entire campaign.

So, I was there by my lonesome self, figuring out what security meant here and how we could go about solving those issues. Here is a picture of the headquarters in Chicago (see left-hand image); this does not look like any office I ever worked in. That was an incredibly dynamic shoestring budget kind of environment, where lots of young people come in really excited to work there. It was really interesting, because this is the only place I worked where people were genuinely excited to come to work every day. And a lot of that is because they were working, obviously, for somebody they believed in, but they were also there because of the challenges we were facing; everybody was kind of enjoying cutting-edge stuff, whether it was in technology, communication, advertising – everybody was doing something new, so it was really great to work in that kind of environment.

So, I was there by my lonesome self, figuring out what security meant here and how we could go about solving those issues. Here is a picture of the headquarters in Chicago (see left-hand image); this does not look like any office I ever worked in. That was an incredibly dynamic shoestring budget kind of environment, where lots of young people come in really excited to work there. It was really interesting, because this is the only place I worked where people were genuinely excited to come to work every day. And a lot of that is because they were working, obviously, for somebody they believed in, but they were also there because of the challenges we were facing; everybody was kind of enjoying cutting-edge stuff, whether it was in technology, communication, advertising – everybody was doing something new, so it was really great to work in that kind of environment.

As the application security person, I had a lot of different roles there, so I was part of the technology team that developed applications, deployed them to the Internet, and I was responsible for making sure that our software that we were developing and deploying was secure – basically, that we didn’t get hacked into. That involved managing a secure development life cycle, doing application audits, application assessments, doing monitoring on our deployments, and kind of everything involving applications.

As the application security person, I had a lot of different roles there, so I was part of the technology team that developed applications, deployed them to the Internet, and I was responsible for making sure that our software that we were developing and deploying was secure – basically, that we didn’t get hacked into. That involved managing a secure development life cycle, doing application audits, application assessments, doing monitoring on our deployments, and kind of everything involving applications.

I also helped out with the enterprise security, which is sort of the traditional IT role that you see in the organization: headquarters networking, email, secure architecture of our field office networks, and that kind of thing. I helped deploy the IDS (Intrusion Detection System) within our headquarters, did monitoring on it, did training of people, helped people understand what security meant within the organization, acting as a general resource for security.

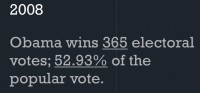

So, getting into the actual campaigns: 2008 – obviously, Obama won that. And he won it by pretty big margin; I’m not sure if everybody is familiar with the electoral system in the US, it’s pretty weird in that the popular vote doesn’t necessarily count for much. It’s the electoral votes that count for everything. Essentially, there’re 538 possible votes; you need to win a majority of those, meaning you need at least 270. If you reach that goal, then you would be elected as the next President. Obama, with 365, had a pretty clear majority in terms of electoral votes; he also won the popular vote (see image above).

So, getting into the actual campaigns: 2008 – obviously, Obama won that. And he won it by pretty big margin; I’m not sure if everybody is familiar with the electoral system in the US, it’s pretty weird in that the popular vote doesn’t necessarily count for much. It’s the electoral votes that count for everything. Essentially, there’re 538 possible votes; you need to win a majority of those, meaning you need at least 270. If you reach that goal, then you would be elected as the next President. Obama, with 365, had a pretty clear majority in terms of electoral votes; he also won the popular vote (see image above).

That was a pretty big win, and technology played kind of a groundbreaking role in this election. This was the first time we saw a lot of donations happening online, we saw a lot of online communities spread up in support of the President. He had a pretty sophisticated homepage that allowed people to discuss and comment on different news articles and communicate that way.

They also had their share of security problems, and some of them weren’t very serious, but garnered really big headlines. And I think that leads to one of the problems that you have in political campaigns: no matter how small the problem is, it’s going to cause headlines everywhere.

Here is an example: there’s a cross-site scripting bug on the homepage of the 2008 campaign (see left-hand image). I wasn’t involved with this campaign, but I’ve had the opportunity to talk to people who were, and essentially somebody decided it would be a great idea to redirect everybody’s traffic from the Obama homepage to the Hillary Clinton page, and at the time they were kind of vying to see who would be the democratic candidate for that campaign.

Here is an example: there’s a cross-site scripting bug on the homepage of the 2008 campaign (see left-hand image). I wasn’t involved with this campaign, but I’ve had the opportunity to talk to people who were, and essentially somebody decided it would be a great idea to redirect everybody’s traffic from the Obama homepage to the Hillary Clinton page, and at the time they were kind of vying to see who would be the democratic candidate for that campaign.

So, pretty small, pretty normal, kind of run-of-the-mill cross-site scripting bug, stored cross-site scripting, that redirected people because of a bug in the commenting system, basically. And, you know, cross-site scripting happens every day, but you don’t really see headlines in major newspapers regarding those issues on normal websites. We did have more serious issues.

So, both Obama, the Democratic representative, and McCain, the Republican representative in 2008 – after the election was over, it was revealed that both campaigns were compromised by some pretty sophisticated malware, and this was reported pretty widely just after the election (see left-hand image). But it was kind of overshadowed by the fact that the election was now over, so you didn’t see any huge headlines about it. But essentially, both campaigns were targeted by spear phishing campaigns targeting campaign officials, and through the course of that, malware was installed on computers throughout the campaign, and they exfiltrated documents. We believe that the intent was to steal foreign policy or economic policy documents, but it’s kind of best guess as to who was behind it and what exactly they were after. So, I think that’s kind of on the more serious issues that you can have in the campaign.

So, both Obama, the Democratic representative, and McCain, the Republican representative in 2008 – after the election was over, it was revealed that both campaigns were compromised by some pretty sophisticated malware, and this was reported pretty widely just after the election (see left-hand image). But it was kind of overshadowed by the fact that the election was now over, so you didn’t see any huge headlines about it. But essentially, both campaigns were targeted by spear phishing campaigns targeting campaign officials, and through the course of that, malware was installed on computers throughout the campaign, and they exfiltrated documents. We believe that the intent was to steal foreign policy or economic policy documents, but it’s kind of best guess as to who was behind it and what exactly they were after. So, I think that’s kind of on the more serious issues that you can have in the campaign.

Moving on to this most recent campaign, 2012, the one I actually helped with. Again, thankfully, Obama won 332 electoral votes, well above the 270 needed, but just barely winning the popular vote, with 50.96% (see right-hand image). It was a very contentious election; it was kind of up in the air for a very long time as to who was winning and how the election would turn out in the end.

Moving on to this most recent campaign, 2012, the one I actually helped with. Again, thankfully, Obama won 332 electoral votes, well above the 270 needed, but just barely winning the popular vote, with 50.96% (see right-hand image). It was a very contentious election; it was kind of up in the air for a very long time as to who was winning and how the election would turn out in the end.

I think this time around we kind of stepped up the technology game also, where we took what was done in 2008 and kind of did more of everything. We built a lot more applications; donations were a much bigger part of it, online communication was a much bigger part of it as well as advertising that whole thing.

I think a really clear way to view that transition from ’08 to 2012 technology is to look at social media (see left-hand image). So, Facebook – obviously a huge jump in terms of followers: in 2008 the Barack Obama account had just over 2 million followers. That’s jumped up to 32 million, which is one of the biggest in terms of account followers on Facebook. Twitter, similarly – in 2008 it was just getting started, not a lot of people used twitter, had about 125 thousand followers, then, again, jumped up to over 22 million for this campaign. And these were the accounts that were used by the campaign to communicate with interested persons throughout the country.

I think a really clear way to view that transition from ’08 to 2012 technology is to look at social media (see left-hand image). So, Facebook – obviously a huge jump in terms of followers: in 2008 the Barack Obama account had just over 2 million followers. That’s jumped up to 32 million, which is one of the biggest in terms of account followers on Facebook. Twitter, similarly – in 2008 it was just getting started, not a lot of people used twitter, had about 125 thousand followers, then, again, jumped up to over 22 million for this campaign. And these were the accounts that were used by the campaign to communicate with interested persons throughout the country.

Online donations in 2008 amounted to about 500 million; that jumped, again, in 2012 to 690 million, and that was a really big achievement, mostly because it was really questionable how people would approach Obama this time around. The country was in economic turmoil, lots of people were unemployed, it was really hard to know how things would turn out. Total donations in the 2012 campaign exceeded 1 billion dollars, so that’s a lot of money. And that makes us a threat; or not, it makes threats a very big part of what we’re doing with technology.

The role technology played in the election – people often quoted it as being a force multiplier. What that means is somebody who can call 100 people on their phone in a day, through the aid of technology we should be able to increase that; we should be able to increase their effectiveness. So, if they’re calling 100 people, we can make sure that people they’re talking to are more easily persuadable, or that they can actually call more people through the aid of technology.

The role technology played in the election – people often quoted it as being a force multiplier. What that means is somebody who can call 100 people on their phone in a day, through the aid of technology we should be able to increase that; we should be able to increase their effectiveness. So, if they’re calling 100 people, we can make sure that people they’re talking to are more easily persuadable, or that they can actually call more people through the aid of technology.

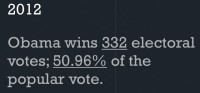

This all (see right-hand image) occurred in less than 2 years, so the campaign got off its feet in early 2011, and, of course, ended in November 2012. That’s just around the 583 day mark; we built most of the applications from scratch, deployed everything in new environments, ended up using Amazon web services for almost everything we did. On the weeks running up to the election we would have around 200 to 300 instances running at a time. That would jump, according to Peak Traffic, up into the thousands of instances, so that’s a large, complicated environment.

This all (see right-hand image) occurred in less than 2 years, so the campaign got off its feet in early 2011, and, of course, ended in November 2012. That’s just around the 583 day mark; we built most of the applications from scratch, deployed everything in new environments, ended up using Amazon web services for almost everything we did. On the weeks running up to the election we would have around 200 to 300 instances running at a time. That would jump, according to Peak Traffic, up into the thousands of instances, so that’s a large, complicated environment.

Basically, we had deployments in several different availability zones; those are different data centers. All of these things are interconnected, lots of very interesting applications. One that was most talked about is called Narwhal; it’s kind of a big data backend system that collects data, normalizes it, does processing, does interesting analytics, and acts as an API for some of the other applications within the campaign.

We also developed what we called Online Field Office, the Dashboard: essentially, it’s a fully independent social network that had a lot of the same capabilities you would see in Facebook or any other social media application, but it was meant to help people organize online and communicate the campaign goals and activities through people according to region, neighborhood or interest; you could kind of group people and choose how your communications went out. Really interesting stuff.

We also had call tools, which enable you to log onto website, and then, if you’re interested in volunteering for the campaign, you can make phone calls to potential voters and try and talk them into voting for Obama or confirming that they are interested in Obama, or trying to help them vote, that kind of thing. Not everything ended up being super popular, but a lot of these things were very effective, so the call tool, for example, on election day – over a million calls were made just through that one tool. We also had single sign-on services, a bunch of different stuff.

Here is the technology team in Chicago (see left-hand image). That’s the famous Bean in Chicago – if you’ve never been there, I highly recommend visiting, it’s a great city. Most of these guys are from different start-ups throughout the country. Almost everybody who joined the team ended up getting a significant pay cut, just to take advantage of the opportunity to work on this campaign and to do some groundbreaking stuff. I wouldn’t say that everybody was entirely politically motivated; I think a lot of us were motivated by the opportunity to work with the great team and to work on really interesting problems.

Here is the technology team in Chicago (see left-hand image). That’s the famous Bean in Chicago – if you’ve never been there, I highly recommend visiting, it’s a great city. Most of these guys are from different start-ups throughout the country. Almost everybody who joined the team ended up getting a significant pay cut, just to take advantage of the opportunity to work on this campaign and to do some groundbreaking stuff. I wouldn’t say that everybody was entirely politically motivated; I think a lot of us were motivated by the opportunity to work with the great team and to work on really interesting problems.

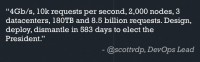

So, moving on to threats. As this role of technology increased and as we used it on a more day-to-day basis within the campaign, we began to think of the different threats we faced, or the actors that were doing these or making security problems for us, into 5 different categories: nation states, organized crime, hacktivists category, political opponents, and kind of a generic – attacks of opportunity (see image to the right).

So, moving on to threats. As this role of technology increased and as we used it on a more day-to-day basis within the campaign, we began to think of the different threats we faced, or the actors that were doing these or making security problems for us, into 5 different categories: nation states, organized crime, hacktivists category, political opponents, and kind of a generic – attacks of opportunity (see image to the right).

In the nation state range it kind of goes back to 2008, where the systems were compromised probably for the intended purpose of stealing economic or foreign policy information. Nation state actors are kind of that classic, what people call “advanced persistent threat”: basically, motivated opponents who are willing to spend large amounts of resources and time to compromise the system.

Organized crime doesn’t necessarily mean the mafia or something, but kind of the typical criminal resources intent on stealing money, probably. So, at the campaign we had a lot of money: over a billion dollars came in, we took a lot of credit card transactions, we took a lot of donations. We had an online e-commerce store. Obviously, all of that can be a target for typical criminal activity with the intent of stealing money, stealing credit card numbers, that kind of thing. Most of the threats we saw from that were kind of the typical web scanning, attacks on the actual infrastructure itself, SQL injection, that kind of thing. Thankfully, we’re not aware of anything actually working against us, which is great.

Hacktivism, this is kind of the Anonymous threat that people talk about. Most of the things we see there are denial-of-service with the intent of making a political statement, or attempts to compromise the system, steal the information and then publish it with the intent of calling notice to some sort of political cause or something like that. You know, sort of the typical modus operandi for Anonymous is to steal information, publish it on Pastebin, and then say: “Hey, look, these people suck. Don’t vote for them”, or “Read our message”, etc.

Political opponents – I’m not necessarily talking about the Republican Party in this case; I’m talking about activists who are against Obama. It’s really not in the interest for either campaign to attack each other; that would be really big news or problems if that ever came to light, so we weren’t really worried about that. We were worried about political activists kind of stating protests or trying to cause sabotage or fraud within our online systems.

And finally, attacks of opportunity, and this is kind of the general bucket. If you have a server on the Internet, even if you’re not any particular target, you’re going to get attacked by the background noise of people scanning the Internet for vulnerabilities. And this is usually people looking for vulnerabilities in commonly deployed software: phpMyAdmin, different Java exploits, different framework exploits, that kind of scanner activity that isn’t really looking for you, but it’s looking for anything it can exploit. So, always worry about that as well.

In terms of the effect these have on the campaign, we were obviously worried about theft: that’s theft of money, theft of information, theft of sensitive documents, that kind of thing. We’re particularly sensitive to sabotage, especially during key moments of the campaign, for example, during debates or during Election Day. If you’re able to impact the availability of systems, you can have a noticeable effect on how efficiently we’re able to contact people, or how efficiently we’re able to take donations, etc. So, we’re really interested in preventing attempts of sabotage. And finally, political statements: these are the people looking to deface our website or put a message online.

In terms of the effect these have on the campaign, we were obviously worried about theft: that’s theft of money, theft of information, theft of sensitive documents, that kind of thing. We’re particularly sensitive to sabotage, especially during key moments of the campaign, for example, during debates or during Election Day. If you’re able to impact the availability of systems, you can have a noticeable effect on how efficiently we’re able to contact people, or how efficiently we’re able to take donations, etc. So, we’re really interested in preventing attempts of sabotage. And finally, political statements: these are the people looking to deface our website or put a message online.

Keeping in mind all the threats we faced, let’s talk about what we did to actually mitigate them at the campaign. In terms of enterprise security, in terms of social attacks and that kind of thing – at the campaign we saw targeted spear phishing attacks targeting very select individuals within the campaign itself. These were of a higher caliber than I’d ever seen at any other organization, specifically targeting small groups of people with very, very targeted messages.

Keeping in mind all the threats we faced, let’s talk about what we did to actually mitigate them at the campaign. In terms of enterprise security, in terms of social attacks and that kind of thing – at the campaign we saw targeted spear phishing attacks targeting very select individuals within the campaign itself. These were of a higher caliber than I’d ever seen at any other organization, specifically targeting small groups of people with very, very targeted messages.

For example, a policy analyst, or a group of policy analysts, would be emailed by their manager – of course, from a random Yahoo email account or something – but the manager would say: “Hey, look, I’ve got this new information about this event that happened yesterday. You guys need to read this and have a report for me tomorrow.” In fact, it’s a spear phishing campaign; the attachment is, of course, malware designed to infect a computer, following the typical infection cycle of having a dropper that downloads a rootkit and then it’s communicating back to a central resource. We had this kind of thing on a weekly basis.

We had several instances of denial-of-service or attempts of denial-of-service; most of these we could trace back to some sort of online activism. Lots of threats were Anonymous, where you’d see people in IRC talking about how they hate Obama: “Let’s denial-of-service him!” A couple of hours later they’d have some sort of script ready for people to download, and they’d start attacking us. We never really saw a huge effect from that; it more or less came down to: if you could keep them at bay for 30 minutes or so, people would lose interest and just stop trying. And using Amazon’s infrastructure, using things like Akamai caching and the like, it was really pretty easy to avoid any noticeable effect from that.

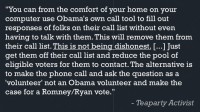

We got a pretty big footprint on the Internet; we saw constant attempts to scan us, lots of security tools being used to try and find vulnerabilities. Similarly, we had lots of attempts from opposing activists at fraud and sabotage. Here is one example (see left-hand image): this was a doctor in Florida who was a Teaparty activist; and for those of you unfamiliar, the Teaparty is kind of a very conservative, very vocal group that generally aligns with the Republicans. This guy was urging people online to log on to Obama’s call tool and either kind of click through numbers and get them removed from the system or to actually to call people, and instead of calling with a message of support for Obama, you call them and you try to convince them to not vote for Obama and to vote for Romney or somebody else.

We got a pretty big footprint on the Internet; we saw constant attempts to scan us, lots of security tools being used to try and find vulnerabilities. Similarly, we had lots of attempts from opposing activists at fraud and sabotage. Here is one example (see left-hand image): this was a doctor in Florida who was a Teaparty activist; and for those of you unfamiliar, the Teaparty is kind of a very conservative, very vocal group that generally aligns with the Republicans. This guy was urging people online to log on to Obama’s call tool and either kind of click through numbers and get them removed from the system or to actually to call people, and instead of calling with a message of support for Obama, you call them and you try to convince them to not vote for Obama and to vote for Romney or somebody else.

This is kind of the most obvious example, we saw this online, we were able to trace it back to somebody’s account on our systems, and at that point it’s pretty easy to mitigate the effect that guy has. But it did kind of point us in the direction of implementing further features to prevent fraud within the systems. We have some pretty sophisticated algorithms designed to detect abnormal behavior in tools such as the call tool to highlight and mitigate that threat.

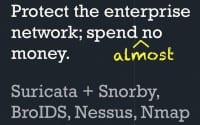

In terms of the enterprise network and actually all of the things we’re going to talk about, we almost exclusively used free open source software (see image). For the enterprise we didn’t have a ton of money to spend on security hardware; we ended up just deploying a box with Suricata, which is a great Snort-like IDS system. It operates very similar to Snort, the rules are very much the same, the difference being that it’s multi-core, so it’s much more performant and it can be GPU-accelerated, which is really awesome, so you can monitor a ton of traffic with very few resources.

In terms of the enterprise network and actually all of the things we’re going to talk about, we almost exclusively used free open source software (see image). For the enterprise we didn’t have a ton of money to spend on security hardware; we ended up just deploying a box with Suricata, which is a great Snort-like IDS system. It operates very similar to Snort, the rules are very much the same, the difference being that it’s multi-core, so it’s much more performant and it can be GPU-accelerated, which is really awesome, so you can monitor a ton of traffic with very few resources.

Snorby is an event management system, so you can take the logs out of an IDS; it’s a web application that lets you monitor, make comments, note stuff, and do investigations.

BroIDS is something else we deployed, it’s a great system. It’s not really your typical IDS, it’s more of a monitoring system that can monitor network traffic and kind of log out things that are interesting to you. We had it logging things like DNS queries, and we would push those into a database and do data mining on those, looking for activity related to malware or compromised machines. So, you can do some really creative stuff with BroIDS. Nessus – you have to pay for it, but it’s a good vulnerability assessment tool. Nmap is one of my favorite tools ever invented, so we use that a lot.

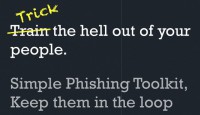

In terms of social threats to the campaign, we had a lot of success training people with something called the Simple Phishing Toolkit, and this is a web application designed to let you run your own spear phishing campaigns, and we would run this against our own staff and employees.

In terms of social threats to the campaign, we had a lot of success training people with something called the Simple Phishing Toolkit, and this is a web application designed to let you run your own spear phishing campaigns, and we would run this against our own staff and employees.

It’s a pretty frightening thing to actually use, because once you use it, you realize how effective this thing can be. I think in our first attempts of using this within the campaign we had a 25% click rate on attachments, and we had about 12% hit rate on people entering credentials online into fake websites we had put up.

We registered a random Barack Obama-affiliated web domain and had people to go to a website there, fill in their email, name and password, and click Enter. We would record the people that actually entered their information, and then we would make them take extra training, so we would walk up behind them at their desk and say: “Hey, remember that email you clicked on? You’ve got to come with us and do some extra training now.”

It ended up working: by the end of the campaign we had a much lower hit rate on those campaigns. I think it worked out pretty well, and it was kind of fun to be that evil guy who’s tricking your people into clicking stuff. In our online chat channels, when we would launch something, we’d suddenly see people pop up and say: “Hey, I got this really weird email, did you guys get this?” And people would be like: “No, I didn’t get that. What is it?” And it goes to, like, “Barack Obama” with zeros instead of O’s, and it was asking for their login information. Or we would have people emailing me in a panic, saying: “I clicked it, I clicked it, I put my name and password, and I know I did something wrong. What do I do now?” And it’s pretty satisfying.

The other general thing is just keeping people in the loop, so if you have a particular threat you’re worried about, I think it’s a mistake to hold that information back from people. I think it’s always better to inform your staff that you’re worried about something in particular happening, work through something making the rounds of people, kind of giving them all the details you can.

Campaigns are really interesting; they are a lot like corporate enterprises: they face a lot of the same threats, but they have some interesting idiosyncrasies that I haven’t seen anywhere else. So, the concept of Bring Your Own Device – basically, the campaign wants to spend as little money as possible, and if they can save money by you bringing your own laptop, they’re happy to let you do that. Same thing for phones or USB drives.

Campaigns are really interesting; they are a lot like corporate enterprises: they face a lot of the same threats, but they have some interesting idiosyncrasies that I haven’t seen anywhere else. So, the concept of Bring Your Own Device – basically, the campaign wants to spend as little money as possible, and if they can save money by you bringing your own laptop, they’re happy to let you do that. Same thing for phones or USB drives.

We had rapid growth: when I joined the campaign, we had about 100 people; within 6 months we had gone up to over 500 people in the headquarters alone. That doesn’t include field offices or volunteers, so that’s really rapid growth. We had a lot of volunteers coming through, and these are people that have been vetted in some way – they did background checks and that type of thing, but they don’t go through the same training as staff, so it’s a lot of people that come in and out of the office; we had media coming in all the time, people doing interviews constantly, etc.

We also had a very young corporate structure, so the technology team was unique in that we all had experience in our fields, we needed people that could get into the campaign and immediately start building web applications or immediately start coding. A lot of the campaign was made up of younger people who had just graduated from college and didn’t particularly have years of experience, so that youth in an organization is something I hadn’t seen before. People were basically playing their entire lives out online, social media was really popular, and controlling those messages became a challenge.

In terms of cloud security, we used AWS for almost everything – that’s Amazon’s cloud solution. I think the most powerful security tool in AWS is AWS itself. If you have the opportunity to design your applications from the ground up to take advantage of this kind of architecture, I think you have some really interesting opportunities to implement security from the ground up, so things like Elastic groups, where machines get deployed automatically based on load and that kind of thing – this presents a lot of interesting security challenges, but opportunities as well.

In terms of cloud security, we used AWS for almost everything – that’s Amazon’s cloud solution. I think the most powerful security tool in AWS is AWS itself. If you have the opportunity to design your applications from the ground up to take advantage of this kind of architecture, I think you have some really interesting opportunities to implement security from the ground up, so things like Elastic groups, where machines get deployed automatically based on load and that kind of thing – this presents a lot of interesting security challenges, but opportunities as well.

We ended up deploying Snort as an IDS solution on every instance we deployed. It gets a bit heavy in terms of resource usage, but it was great to have that insight in terms of what kind of activity each machine was seeing. ModSecurity is of course a great Apache application firewall. And again, Nmap is one of my favorite tools of all time.

Application security was a big part of what I did at the campaign. We basically ran through a very quick secure development lifecycle: I would sit down with people when we started projects and kind of discuss what kind of implications it had for security or privacy. We’d talk about how they would be addressing those issues, figure out milestones later on in the process, where we would check in and make sure that if they had any questions I could answer them.

Application security was a big part of what I did at the campaign. We basically ran through a very quick secure development lifecycle: I would sit down with people when we started projects and kind of discuss what kind of implications it had for security or privacy. We’d talk about how they would be addressing those issues, figure out milestones later on in the process, where we would check in and make sure that if they had any questions I could answer them.

And then, prior to deployment we do a code review or application assessment; typically both, where you have the code on one screen and you’re actually testing the application on the other. Burp is my all-time favorite web application tool. It costs a little bit of money, but I think it’s worth it. It’s a great web application interactive proxy, so if you haven’t checked it out, I really recommend it.

GitHub was our code repository of choice, and I think that really helps you out in doing code reviews, because you can see incremental changes, you can assign ownership to code, who wrote what, and if you know one guy is really bad at sanitizing SQL queries, you can focus on his stuff within the code, as opposed to somebody else who you know is doing stuff pretty well. So, kind of a combination of all that I think was pretty effective (see left-hand image above).

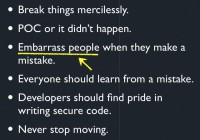

That being said, I think it always helps if you can just break everything you possibly can. And I think you should have no shame if you find a problem; break it as much as you can. I think developing proof of concepts for any issues that you find gives you big points from developers, but also from management, because you can actually prove that something is going on here.

That being said, I think it always helps if you can just break everything you possibly can. And I think you should have no shame if you find a problem; break it as much as you can. I think developing proof of concepts for any issues that you find gives you big points from developers, but also from management, because you can actually prove that something is going on here.

If possible, I like to embarrass people with my proof of concepts. My favorite thing we actually did within the campaign was cross-site scripting. I developed a generic proof of concept, where if I was able to include some remote JavaScript, the JavaScript was designed to replace the background image with a big dancing otter, play some music in the background, and then pop up everybody’s cookies. And I would send that to the developer, they would see it, I sat just across the room from them, so I could see them looking perplexed at their screen. I would then send it to everybody else on the team and have them take a look at it also, because nothing motivates somebody to fix a problem more than if all of their friends and peers are looking at their mistake they just made. I think it’s important not to humiliate them, but I think embarrassment is appropriate, so we were using that embarrassment to help everybody learn, and to learn from a mistake and to have that kind of cooperative learning going on.

With respect to that, I think it’s really important that developers are proud of the code they write, so if they write really good code, if you don’t find many problems with it, you should also tell them that and you should use them as a reference to other people who might not be developing as secure a code. So make them feel pride in what they’re doing.

If you are responsible for the security of applications on the Internet, you always have work to do. Even if something’s deployed and hasn’t had a problem in the past, you constantly need to keep up to date with what’s going on, researching new security issues and that kind of thing, so you just can’t stop doing anything.

Finally, I think volunteers are incredibly important in this kind of situation. Thankfully, at the Obama campaign we had a lot of people eager to help us out. And again, the community in Chicago is pretty small in terms of technology and even smaller in terms of security. So I invited some of my former coworkers, my friends, people I trusted to come volunteer and spend, like, a weekend with us and help me secure code, so, basically, doing hackathon kind of thing where we bring them in, point them at some applications or IP addresses, and have them hack at it. And the benefit there is you get more than a single set of eyes on stuff, which I think is really helpful.

Finally, I think volunteers are incredibly important in this kind of situation. Thankfully, at the Obama campaign we had a lot of people eager to help us out. And again, the community in Chicago is pretty small in terms of technology and even smaller in terms of security. So I invited some of my former coworkers, my friends, people I trusted to come volunteer and spend, like, a weekend with us and help me secure code, so, basically, doing hackathon kind of thing where we bring them in, point them at some applications or IP addresses, and have them hack at it. And the benefit there is you get more than a single set of eyes on stuff, which I think is really helpful.

So, I think that’s all I had for you guys, but I’d be happy to take any questions if we have time.

So, I think that’s all I had for you guys, but I’d be happy to take any questions if we have time.

Host: Ok, thank you! If anyone has a question, there are three mikes in the room. So, please stand up if you have a question.

Question: Hi. First of all I’d like to say that a few weeks back I read an article comparing your work with the work of the opponent about keeping the system running and preventing collapse, and you guys did a great job, kudos for that. My question is: when you work with security, one of the things you always encounter is the lack or not doing well of security assessment or threat assessment. Who is in charge of deciding what are the likely threats or what are the possible threats, and who decides which ones of them should be addressed?

Ben Hagen: I think it was really advantageous for us to have the developer community that we had; I think they had a very realistic view of what kind of threats were being faced on the Internet, and they could kind of integrate that into their own development lifecycle. In terms of the organization as a whole, I would say that our Chief Technology Officer and campaign management were kind of in charge of assigning risks to the larger threats that we would face, so generally, if I found an issue, I’d bubble it up to that level, and they would make decisions based on what kind of realistic threat approached the organization, or the impact that it could have in the near term.

Question: Were you compromised a lot? Like they said: “We understand, but it’s not going to happen.”

Ben Hagen: We warned people a lot. We weren’t aware of any actual compromises that we had of our data or anything like that, which is very fortunate, I think, in that regard. But I think we were constantly receiving threats from people that were very realistic, and I think the communication up the channel in terms of that was very important.

Question: When was the internal social network most effective during the campaign, maybe in some cities or some events? And what were the main issues in terms of security?

Ben Hagen: So, talking about the social network, we had an application called Dashboard; that was our internal tool for social networking, it was its own social network with its own accounts, its own login system, that kind of thing. It was very effective at organizing people at the micro level. So, people would join up onto the social network, and they would find groups that they had affinity for, for example, by interests, by location, by neighborhood, etc. It was very effective at cementing those relationships.

In terms of the social network, I think, the threats we faced were mostly fraud-related or messaging-related. We had big issues with people making fake accounts and spamming the entire board and sending messages to lots of people. So, moderation became a very important part of what we did, not with the goal of censorship, but with the goal of keeping the riffraff out of there when it tried to cause some sort of issue with it. So, I think that kind of fraud was kind of the bigger issue.

Host: We have a question from the Internet. The question is: how much did Obama himself make the job more difficult or easy? And did he request any special features?

Ben Hagen: Obama played more of an advisory role throughout the entire election. He was, obviously, the sitting President. I think most of the decisions were made by the campaign management, which is the campaign manager and senior advisors. He did play a role in terms of the messaging that we put out, general policy and that kind of thing, but he was hands off in terms of technology. He and the campaign management let us build what we thought would answer the problems that they were having. So, I think it was great to have him as a figurehead, but in terms of day-to-day business he didn’t play a huge role.

Question: First of all, thanks for your talk about the situation where you had to close down a network a little more. We get a lot of talks here about open networks, and everyone can connect with everything. I like the point that you presented here. One of the things you mentioned was that you did some internal training, like tricking people into clicking on attachments. I would think that you could get an angry mob against you quite soon. Can you tell us about that?

Ben Hagen: I think it’s important to make it more like a game, as opposed to something that creates a vindictive hatred of you or something like that. We played coy about it a lot, so we’d send these kind of things out. The training was that if you received an email that you were skeptical of, you should contact the help desk. You shouldn’t click on anything, you shouldn’t send it to anybody; you should contact the help desk immediately and get the problem resolved. I think you’re right, I think it’s a dangerous game to play with people. We kind of hid behind the fact that nobody was sure what was and wasn’t training for us. So, people knew we were actually getting these threats, we would send out information if we had a particularly wide campaign committed against us, but in terms of what we did internally, we never really let people know that there was a wide-scale thing that we were doing.

Question: At one point you mentioned that during the campaign you used AWS extensively, which is understandable by the dynamic of the network and the infrastructure. You said something about 2000 nodes in the network. Were these servers or client? What was the ratio between them?

Ben Hagen: Those are a number of servers. For example, on Election Day we had over 2000 servers grown into AWS across multiple zones, all serving the applications that we were doing. In terms of the ratio, I’m not exactly sure what that was. We had pretty aggressive scaling limits set on stuff, so things would scale up pretty readily. On Election Day we kind of threw caution to the wind and money to the wind and just said: “Scale everything up, get as much as we can,” so the ratio was probably still thousands to a machine, but we had a ton of traffic going through.

Question: And how many clients were there?

Ben Hagen: We had a lot. I think the best statement regarding that is probably what our DevOps person said: 8.5 billion requests; on a given day we had several million requests to our main websites.

Host: One more question from the Internet: how many people were volunteers and how many people were full-time employees? And did that present an additional challenge?

Ben Hagen: Sure, I think it did present a challenge just in terms of the disparity, in terms of experience, and how much time they spent on the campaign. So, obviously, if you spend a lot of time there and you are actual staff, I think you’re motivated in different ways than a volunteer. They’re both great and incredibly helpful, but there’s certainly a challenge in rectifying that difference.

In terms of full-time staff at the headquarters, I believe we had between 500 to 700 at different parts of the campaign; that’s out of the one location in Chicago. I think across the country the number was more in the low thousands or something like 2000 or 3000 paid staff in the country. In terms of volunteers, that’s a lot more; I think a lot of it depends on what you call a volunteer. I know the bigger end of numbers; people say we had 2.2 million volunteers if you take into account people with online accounts, or people that had shared information. Realistically, it’s probably more in the tens to hundreds of thousands.

Question: It would be interesting to know what kind of technologies you were using for your web applications, like Python, Ruby, .NET, or something else?

Ben Hagen: We had a lot of different stuff we used; probably the three most common stacks were Ruby, Apache, Rails, obviously, on Apache. We did a lot of Python code with Flask, Python and Nginx. We also did a few applications in PHP, also built on Apache. Back-ends were generally Amazon-provided services, like RDS, which is essentially a hosted MySQL application.

Ben Hagen: We had a lot of different stuff we used; probably the three most common stacks were Ruby, Apache, Rails, obviously, on Apache. We did a lot of Python code with Flask, Python and Nginx. We also did a few applications in PHP, also built on Apache. Back-ends were generally Amazon-provided services, like RDS, which is essentially a hosted MySQL application.

Question: How much of your system was on the cloud? And why did you decide to choose Amazon web service instead of having a private cloud like OpenStack? And you talked about open source: which networking device were you using if you had a physical setup?

Ben Hagen: So, in terms of our footprint on the Internet and our web applications, it’s something like 99% of it was in the cloud – almost everything. We worked with Amazon, because they have the most mature offering with a lot of different services that you can use. So, if you need a database, they have a database; if you need queuing, they have queuing; if you need scaling, they have scaling. I think private clouds are really interesting, OpenStack is really interesting, but in terms of having the capacity to scale dramatically in a very short period of time, you really need to go with one of the bigger providers that has the infrastructure built out already. And if you’re relying on a private cloud or something, you have some sort of limitation at some point. You might have a data center that can go this far, but it can’t go even further. Amazon lets you basically go basically infinitely large, which is something we needed on occasion. In terms of hardware in the campaign, for the security stuff that we were setting up it was just kind of a stock server that had 4 CPUs in it with some raided storage. Nothing special, basically; just kind of base 1U servers.

Question: I assume that you had a pretty decent SIP infrastructure. I was wondering if you saw any interesting exploits that involved SIP specifically, where people were trying to exploit the phones?

Ben Hagen: We saw scanning, we saw SIP-focused scanning; we were actually using a lot of Microsoft’s Lync back-end for SIP, which is an interesting conglomeration of different services. Aside from scanning, I don’t think we saw anything particularly interesting from it.

Question: Did you see any impersonation attacks? You know, “My alternative Barack Obama site, give me your credit card numbers;” and how quickly were you able to take them down if they were hosted some place dodgy?

Ben Hagen: I think we certainly did see that, and I think the Romney campaign saw the same thing, and I think you have very limited options other than communicating with law enforcement or communicating with the hosting providers to have that kind of thing taken down. We kind of relied on the community in terms of security community, but also like reddit or any number of places where very quickly you can bubble that thing up, and monitoring those kind of sources for fake impersonation sites is a really great way to find it quickly. And I think the only options you have are to warn people or to approach law enforcement and have them take it down.

Question: What did your preparations for disaster recovery look like?

Ben Hagen: Amazon makes things interesting, and we had a number of potential disaster situations come up in the course of the campaign, where we had a hurricane coming down on one of the major Amazon data centers. Preparations for that generally involved replicating as much of the infrastructure as possible into a different availability zone, so getting as much as possible working in another data center, essentially. In terms of the campaign itself, we had kind of the typical corporate disaster recovery, with secure offsite storage of critical files and disaster recovery of individual laptops, with essential backup of information, imaging of computers, that kind of thing. So, nothing terribly sophisticated on the corporate side; I think the interesting disaster recovery stuff is with the cloud services.

Question: Could you elaborate a little bit more on how you detect those fraud calls where someone is using your system in order to make Romney calls? How did you detect that and how did you prevent that from happening?

Ben Hagen: Basically, we were looking at the velocity of potential calls coming from individual users, and then also the response they were making to calls. So, essentially, if you made a call, we ask that you record information like: did somebody answer the phone? Were they not at home? Did they call and were they a supporter, were they pro-Obama, pro-Romney? There are several possible answers to making a call. Basically, looking at the typical spread of answers and looking at the velocity with which people can make the calls and actually get legitimate answers gives you a lot of information regarding what is and is not a valid user. So, detecting fraudulent activity becomes pretty easy as long as you’re not using kind of sophisticated impersonation tactics. We assume they didn’t have the insight in terms of what the typical behavior would be like so that we could rely on that more.

Host: There is another question from the Internet, and it’s a follow-up to the previous one: I’d like to know how the procedures, how things were documented, and how you tested which impact the disasters might have.

Ben Hagen: Speaking of documentation, we would provide as detailed synopsis of how to actually implement disaster recovery situations as possible; basically, step by step was the goal. That obviously doesn’t always happen, but the goal was so that everybody in DevOps should be able to handle any kind of emergency because it’s been documented, DevOps being the development operations, the people that are actually deploying things to the Internet.

Ben Hagen: Speaking of documentation, we would provide as detailed synopsis of how to actually implement disaster recovery situations as possible; basically, step by step was the goal. That obviously doesn’t always happen, but the goal was so that everybody in DevOps should be able to handle any kind of emergency because it’s been documented, DevOps being the development operations, the people that are actually deploying things to the Internet.

In terms of deciding the impact to different scenarios, we had things we called “game days”, where we set aside a weekend or a day of a weekend, and we forced developers and DevOps people to contend with random issues with their applications. For example, on a staging network or a replicating environment, we would say: “Your IDS MySQL instance is down. Your application needs to be able to recover from that. Let’s try it out.” And we’d kill the database connection, see how it responded and try to get as friendly a response out of that as possible, either failing over to another application, redirecting a user, or presenting kind of static information. So, going through that entire stack of possible problems and figuring out the most graceful way to fail was a big part of those game days and the big goal in preparation for things like Election Day.