The well-known whitehat hacker and internet security researcher Moxie Marlinspike (born Matthew Rosenfeld) speaks on privacy threats at Defcon.

The well-known whitehat hacker and internet security researcher Moxie Marlinspike (born Matthew Rosenfeld) speaks on privacy threats at Defcon.

Мy name is Moxie Marlinspike, I’m from the Institute for Disruptive Studies. And I would like to take some time to talk about privacy. What I’d like to do is start by looking into the past, talking about the threats that we saw, the things that we thought were important, the projects that we thought were worth working on. And then I wanna talk a little bit about how I feel like trends have changed, and then look into the future, and talk a little bit about the things that I think might be important moving forward, things that I’m interested in working on and that maybe other people might be interested in working on.

So looking into the past, the sort of technology narrative of the 90s was largely dominated by a clicker that doesn’t work – by Netscape Navigator web browser. When it was first introduced, it was almost revolutionary, and a lot of people moved to capitalize on that knowledge. In particular, one of the major players that wanted to protect their interests was Microsoft. When they introduced Internet Explorer, the narrative changed just from the idea of a browser to this browser war between Netscape and Internet Explorer, and we all know how the browser wars turned out.

The eavesdroppers problem here was that cryptography is not a banana, which is to say that it’s difficult to treat information as objects. You know, if I have a banana and share it with my friend, there is still only one banana in the world. If they then share it with a friend of theirs, there’s still only one banana in the world. However, information works differently: every time I share information, I’m copying it and there is a chance for an exponential explosion.

This sort of fundamental dilemma was made worse by cypherpunks’ mantra – ‘Cypherpunks’ Write Code’. The idea was that a lot of good work had been done in academia and research circles developing public-key cryptography and other encryption systems outside the Government round, but not a lot had been done to actually put it into practice. And what cypherpunks wanted was actual software that people could download and use right now to communicate securely. And so they kind of went nuts: some people moved to Anguilla – an island in the Caribbean that had very favourable laws in terms of exporting cryptography, and started writing crypto code and trying to ship it throughout the world.

This sort of fundamental dilemma was made worse by cypherpunks’ mantra – ‘Cypherpunks’ Write Code’. The idea was that a lot of good work had been done in academia and research circles developing public-key cryptography and other encryption systems outside the Government round, but not a lot had been done to actually put it into practice. And what cypherpunks wanted was actual software that people could download and use right now to communicate securely. And so they kind of went nuts: some people moved to Anguilla – an island in the Caribbean that had very favourable laws in terms of exporting cryptography, and started writing crypto code and trying to ship it throughout the world.

There were more creative strategies, like in 1995 Filip Zimmermann published a book in conjunction with “MIT Press” called “PGP: source code and internals”. The deal was that the book was just the entire PGP source code printed in a machine-readable font, ‘cause, you know, digital representations of cryptography were weapons, but if you printed it in a book – that was speech. So they printed this whole thing in a book in very small print run, and then, you know, just shipped it to every country in the world where they wanted to see this. And then, the people there just scanned it back in because it was a machine-readable font, and now PGP had been distributed completely legally all over the world.

There were more creative strategies, like in 1995 Filip Zimmermann published a book in conjunction with “MIT Press” called “PGP: source code and internals”. The deal was that the book was just the entire PGP source code printed in a machine-readable font, ‘cause, you know, digital representations of cryptography were weapons, but if you printed it in a book – that was speech. So they printed this whole thing in a book in very small print run, and then, you know, just shipped it to every country in the world where they wanted to see this. And then, the people there just scanned it back in because it was a machine-readable font, and now PGP had been distributed completely legally all over the world.

So, you know, this kinda stuff continued and the strategies got more and more diverse, and cryptography got more and more ubiquitous, until 2000 when suddenly the Clinton Administration repealed all of the significant laws limiting the export of cryptography. So it sort of seemed like the game was over and that the world was won.

If you go back and look at the cypherpunk predictions about what would happen once cryptography was ubiquitous, the first prediction that they made was simply that it would become ubiquitous, that it would inevitably spread throughout the world. And this turned out to be their most prescient prediction – this was really one of the first times that we saw that information really does want to be free. But if you look at their predictions about what happened once it was ubiquitous, they were somewhat less prescient: that anonymous digital cash would flourish; that intellectual property would disappear; that surveillance would become impossible; that governments would then be unable to continue collecting taxes and that governments would fall.

If you flash forward ten years from when these predictions were made, cryptography is the thing that allows you to securely transmit your credit card number to Amazon.com so you can buy a copy of Sarah Palin’s book on “Going Rogue”.

Sure, some of these ideas have been eroded somewhat, but surveillance is probably at an all time high, while privacy is probably at an all time low. So what happened? You know, it sort of seemed like we were gaining a victory in this war, and it seemed like we won the war. And now, here we are in the strange situation.

Well, I think part of thesis here is that in many ways I feel like the cypherpunks were preparing for a future, and the future that they anticipated was fascism. But what we got was social democracy. And that’s not necessarily better, it’s just different. Let me give you an example. How many people in this room would feel good about a law which required everyone to carry a government-mandated tracking device with them at all times? Not even one person, right? So that’s fascism, right? That’s the fascist future. Now, let me ask you another question. How many people here carry a mobile phone? I’m guessing, actually, a hundred percent of the people in this room. And so that’s social democracy. So what is the difference between the government-mandated tracking device and a mobile phone? You know, a mobile phone is just a tracking device that reports your real-time position to one of a few telecommunications companies which are required by law to hand that information over to the government. So logistically they are the same, you know. So what is the difference? You can turn it off, but you don’t. Choice is the big difference, you know. You choose to carry a cell phone, and you wouldn’t choose to carry this government-mandated tracking device.

So let’s talk about that. Never in my wildest dreams did I think that I would have a cell phone. Why would I, you know? It’s a mobile tracking device, it’s a mobile bug that operates in an insecure protocol. Why would I want one of these? And yet, I have one and I carry it with me all the time, every day. Well, I think if we look at the way that people tend to communicate and coordinate in groups, often there are sort of informal mechanisms and channels that people use to communicate and to make plans and stay in touch. And if I introduce a more codified communications channel, there’s a well-known problem called the ‘No Network Effect’, where I invent this thing (maybe like the GSM cellular network) and I start using it, but it’s difficult to use because the value of that network is in the number of nodes that are connected to it, and if I’m the only one using it then it’s really not worth very much. If however, I somehow manage to get everyone to start using this thing, then it becomes very useful and very valuable. But there’s an interesting side effect, which is that the old informal methods of communication and coordination are destroyed. The technology actually changes the fabric of society. I mean, there are many trivial examples of this in the mobile phone world.

So let’s talk about that. Never in my wildest dreams did I think that I would have a cell phone. Why would I, you know? It’s a mobile tracking device, it’s a mobile bug that operates in an insecure protocol. Why would I want one of these? And yet, I have one and I carry it with me all the time, every day. Well, I think if we look at the way that people tend to communicate and coordinate in groups, often there are sort of informal mechanisms and channels that people use to communicate and to make plans and stay in touch. And if I introduce a more codified communications channel, there’s a well-known problem called the ‘No Network Effect’, where I invent this thing (maybe like the GSM cellular network) and I start using it, but it’s difficult to use because the value of that network is in the number of nodes that are connected to it, and if I’m the only one using it then it’s really not worth very much. If however, I somehow manage to get everyone to start using this thing, then it becomes very useful and very valuable. But there’s an interesting side effect, which is that the old informal methods of communication and coordination are destroyed. The technology actually changes the fabric of society. I mean, there are many trivial examples of this in the mobile phone world.

We see that mobile phones have changed the way that people make plans. It used to be that people made plans, you know; they were saying “I’ll meet you on the street corner at this time and we’ll go somewhere”. And now, people say “I’ll call you when I’m getting off work”. So if you don’t have that piece of technology, you can’t participate in the way the society is communicating or coordinating. And so what actually ends up happening is now if I decide that I don’t want to participate in this codified communications channel, I’m, once again, victim to the ‘No Network Effect’, because what I’m trying to do is go be a part of a network that has been destroyed, that no longer exists. And I’m, once again, the only one using it, and I’m part of a network that has very little value.

We see that mobile phones have changed the way that people make plans. It used to be that people made plans, you know; they were saying “I’ll meet you on the street corner at this time and we’ll go somewhere”. And now, people say “I’ll call you when I’m getting off work”. So if you don’t have that piece of technology, you can’t participate in the way the society is communicating or coordinating. And so what actually ends up happening is now if I decide that I don’t want to participate in this codified communications channel, I’m, once again, victim to the ‘No Network Effect’, because what I’m trying to do is go be a part of a network that has been destroyed, that no longer exists. And I’m, once again, the only one using it, and I’m part of a network that has very little value.

So yes, I made a choice to have a cell phone. But what kind of choice did I make? And I think that this is the way that things tend to go now. What ends up happening is the choices turn out very simple. Do I have a piece of consumer electronics in my pocket or not? And over time, the scope of that choice slowly expands until it becomes a choice to participate in society or not. On some level today, to choose not to have a cell phone means, in some sense, to choose not to participate in society.

You know, if you think about the old world – the way the things used to work – you imagine that some Google executive tracked down the person who is maintaining this list and, you know, showed up with a briefcase of cash, and there was some shady backroom deal, hands were shaken – and, you know, ‘Google Analytics’ was removed from this list.

And so again, what they’ve done is they expanded the scope of the choice that you have to make. It used to be a very simple small choice – “Do I wanna be tracked by Google or not?”. Simple enough: you can either block the JavaScript or not block the JavaScript. Now, the choice becomes larger – “Do I want to visit this website or not?”, and that’s a much more difficult choice to make.

So why is this significant? Well, this guy’s name is John Poindexter, and he’s incidentally the guy who was found to be most responsible for the Iran-Contra scandal. He was convicted of lying to Congress but then never went to jail. And in 2001, he started a Government program called ‘Total Information Awareness’. He made a speech when he announced the program, where he said that “…data must be made available in large-scale repositories with enhanced semantic content for easy analysis”. Essentially, what he wanted to do was have the Government siphon off all Email traffic, all web traffic, all credit card history, everybody’s medical records and throw it into one big sink, just put it in one big pile – don’t worry about analysing it or processing it in real time. And then, develop the technology to really efficiently mine this data, to pull out the interesting statistics, relationships profiles that they are interested in at any point in the future. So you just collect this big sink of data and then, at any point in the future, you can go back and pull out anything that you want from it.

So why is this significant? Well, this guy’s name is John Poindexter, and he’s incidentally the guy who was found to be most responsible for the Iran-Contra scandal. He was convicted of lying to Congress but then never went to jail. And in 2001, he started a Government program called ‘Total Information Awareness’. He made a speech when he announced the program, where he said that “…data must be made available in large-scale repositories with enhanced semantic content for easy analysis”. Essentially, what he wanted to do was have the Government siphon off all Email traffic, all web traffic, all credit card history, everybody’s medical records and throw it into one big sink, just put it in one big pile – don’t worry about analysing it or processing it in real time. And then, develop the technology to really efficiently mine this data, to pull out the interesting statistics, relationships profiles that they are interested in at any point in the future. So you just collect this big sink of data and then, at any point in the future, you can go back and pull out anything that you want from it.

So this was the totalitarian future, this was the cypherpunk nightmare that, you know, they had been worried about. This is what they have been thinking about and preparing all this time. And people freaked out – I mean, this was a significant story in the news, people were up in arms, and in fact even Congress was like “What are you guys doing?”, and eventually the program was shut down.

Now, clearly, their intent is different. They are not John Poindexter, they’re trying to sell advertising. But make no mistake about it – they are in the surveillance business; that is how they make money: they surveil people and use that to profit.

And so the effect is the same. Who knows more about the citizens in their own country, Kim Jong-Il1 or Google? I think it’s Google, I think it’s pretty clearly Google. So once again, there’s this question: why are people so concerned about the surveillance practices of Kim Jong-Il, or the John-Poindexters of the world, and not as concerned about people at Google? Well, again I think it comes back to this question of choice, right? You choose to use Google and you don’t choose to be surveiled by John Poindexter or Kim Jong-Il. But once again, I think the scope of this choice is expanding and that it’s going to become harder and harder to make that choice as it’s a choice between participating in society or not. I mean, already if you were to say “Well, I don’t want to participate in Google’s data collection, so I’m not gonna email anybody that has a Gmail address”, that’s probably pretty hard to do. I mean, you would be in some sense removed from the social narrative, you would be cut out from the part of the conversation that’s happening that is essential to the way the society works today.

So I would say the trends have changed. Now we’re dealing with a situation where technology alters the actual fabric of society and information, as a result, accumulates in distinct places – and the ‘eavesdroppers’ now just moved to those distinct places.

The past was really direct: we saw the ‘eavesdroppers’ trying to embed surveillance equipment into every consumer communications device. And the present is much more subtle: instead of doing that, they just moved to the few distinct places where information tends to accumulate: places like room 641A2 in the AT&T WorldCom facility where the NSA has been operating the fibre optic splitters. The past was direct, you saw people like ‘Total Information Awareness’ directly trying to take your data. And the present is a lot more subtle: it starts by soliciting rather than demanding your data, and the ‘eavesdroppers’ just moved to those points where the data collects.

The past was really direct: we saw the ‘eavesdroppers’ trying to embed surveillance equipment into every consumer communications device. And the present is much more subtle: instead of doing that, they just moved to the few distinct places where information tends to accumulate: places like room 641A2 in the AT&T WorldCom facility where the NSA has been operating the fibre optic splitters. The past was direct, you saw people like ‘Total Information Awareness’ directly trying to take your data. And the present is a lot more subtle: it starts by soliciting rather than demanding your data, and the ‘eavesdroppers’ just moved to those points where the data collects.

So when I’m thinking about the future, the first thing that I want to think about is these choices that aren’t really choices, and I want to deal with those problems. I want to acknowledge that the choices are expanding and in some sense they are becoming demands. So some projects are along those lines.

I started by thinking, okay so what’s up with Google? The main problem is that they have an awful lot of data about you. They record everything, they never throw anything away. They have your TCP headers, they have your IP address, they issue you a cookie, they know who you are, they know where you live, they know who your friends are, they know about your health, your political leanings, your love life. They know not just about what you’re doing, but they have some significant insight into the things that you’re thinking about. They’ve also done a really good job of controlling this debate by defining the terms. They say things like, you know “We care about privacy, so we anonymize your information after nine months”. What they mean by ‘anonymize’ is drop the last octet of your IP address. That’s not anonymity, but they’ve done a very good job of being able to define that as anonymity so that they could just start throwing that word around. They also did this brilliant thing with this ‘Google Dashboard’ where they say “Oh, you know, we’re putting privacy under your control”.

First of all, they only show you some of the information that they are most obviously capable of collecting about you. They don’t show you any of the other correlational stuff that they could easily derive about you. And the most diabolical thing about it is that to get privacy you have to have to be tracked, because to control your privacy using ‘Google Dashboard’ you have to stay logged in all the time and maintain a cookie. So it’s like, you know, they’ve turned the tables on you. And, you know, they have warned us: Eric Schmidt3 said this famous thing: “If there’s something you don’t want anyone to know, maybe you shouldn’t be doing it in the first place”. So they’ve warned us.

And lastly, we now know that the ‘Aurora’4 attacks on Google were at least partially about intercept. One thing we’ve learned from those attacks is that the Government is running intercept systems on their networks, and not only that but other ‘eavesdroppers’ are trying to get access to those intercept systems. So what we are seeing is as more and more data accumulates in these places, it becomes more and more valuable. And so ‘eavesdroppers’ move to those places and even ‘eavesdroppers’ without a legal backing also try to move to those places. So I think we are going to continue to see that as a problem as these become more and more valuable over time.

So one project that I started working on is called ‘GoogleSharing’. The basic premise of ‘GoogleSharing’ is that this choice that we are given is a false choice, and that we shouldn’t accept it, we should just reject it. So what we should say is, you know, it’s not really possible for us to stop participating with Google, and so instead what we want to do is come up with technical solutions that allow us to continue to participate and still maintain our privacy.

The way it works is it’s a two-part system, it’s a Firefox add-on as well as a custom proxy server. The add-on sits in your web browser and it watches your web requests and all of your non-Google traffic just goes directly to the Internet, totally unmolested, not modified or impacted at all. Then, if it sees a request to a Google service that does not require a login – these are things like ‘Google Search’, ‘Google News’, ‘Google Maps’, ‘Google Groups’, ‘Google Images’, ‘Google Shopping’, but not things like ‘Google Mail’ or ‘Google Checkout’ – then it shuns off that traffic to ‘GoogleSharing’ proxy server. The ‘GoogleSharing’ proxy server maintains a collection of identities, and each identity is a unique HTTP header offset – these are, you know, like the fingerprint of your web browser – as well as a cookie that was issued by Google. And so these are maintained in this pool and every time a request comes in, one of these identities is randomly chosen from the pool and the identifying information from the request is stripped off and the stuff from the identity is tacked on, and then it’s forwarded on to Google who processes the request, responds to the proxy and the information is proxied back to you. So, you know, the upshot is that Google can’t track you because these cookies are constantly moving around and your traffic does not come from your IP address. Additionally, we encrypt this first link using SSL1 between your web browser and the proxy – so that means that actually you can get SSL protection for services that Google does not provide SSL access to. You know, Google now has SSL protected search but none of the other stuff like ‘Maps’ or ‘Shopping’ or ‘Groups’ or ‘Images’ are SSL protected. But you get that with ‘GoogleSharing’. And how does it look? It looks exactly alike.

The way it works is it’s a two-part system, it’s a Firefox add-on as well as a custom proxy server. The add-on sits in your web browser and it watches your web requests and all of your non-Google traffic just goes directly to the Internet, totally unmolested, not modified or impacted at all. Then, if it sees a request to a Google service that does not require a login – these are things like ‘Google Search’, ‘Google News’, ‘Google Maps’, ‘Google Groups’, ‘Google Images’, ‘Google Shopping’, but not things like ‘Google Mail’ or ‘Google Checkout’ – then it shuns off that traffic to ‘GoogleSharing’ proxy server. The ‘GoogleSharing’ proxy server maintains a collection of identities, and each identity is a unique HTTP header offset – these are, you know, like the fingerprint of your web browser – as well as a cookie that was issued by Google. And so these are maintained in this pool and every time a request comes in, one of these identities is randomly chosen from the pool and the identifying information from the request is stripped off and the stuff from the identity is tacked on, and then it’s forwarded on to Google who processes the request, responds to the proxy and the information is proxied back to you. So, you know, the upshot is that Google can’t track you because these cookies are constantly moving around and your traffic does not come from your IP address. Additionally, we encrypt this first link using SSL1 between your web browser and the proxy – so that means that actually you can get SSL protection for services that Google does not provide SSL access to. You know, Google now has SSL protected search but none of the other stuff like ‘Maps’ or ‘Shopping’ or ‘Groups’ or ‘Images’ are SSL protected. But you get that with ‘GoogleSharing’. And how does it look? It looks exactly alike.

You can use all their services – ‘Google Maps’, ‘Google News’, whatever you like – totally transparently, the only difference is that in the bottom right-hand corner there is a little status telling you that ‘GoogleSharing’ is enabled. Additionally, Google has sort of given us a win here by allowing us to make SSL protected searches, and so now what we can do is have the client pre-fetch cookies from the ‘GoogleSharing’ proxy server and then make an SSL connection directly to Google. And so now, the ‘GoogleSharing’ proxy server is proxying the data and sharing cookies around and identifying information around but cannot actually see the requests because they are SSL protected all the way to Google. So now you don’t have to trust us not to examine your requests. So anyway, this project is available online, it’s been active for about six months now, we have about 80,000 users and you can get the add-on from from Googlesharing.net.

You can use all their services – ‘Google Maps’, ‘Google News’, whatever you like – totally transparently, the only difference is that in the bottom right-hand corner there is a little status telling you that ‘GoogleSharing’ is enabled. Additionally, Google has sort of given us a win here by allowing us to make SSL protected searches, and so now what we can do is have the client pre-fetch cookies from the ‘GoogleSharing’ proxy server and then make an SSL connection directly to Google. And so now, the ‘GoogleSharing’ proxy server is proxying the data and sharing cookies around and identifying information around but cannot actually see the requests because they are SSL protected all the way to Google. So now you don’t have to trust us not to examine your requests. So anyway, this project is available online, it’s been active for about six months now, we have about 80,000 users and you can get the add-on from from Googlesharing.net.

Another project that I think is interesting is this thing called ‘Facecloak’, it was developed by Prof. Urs Hengartner from the University of Waterloo. The idea, or his basic premise, is that using Facebook what you’re trying to do is share information with your friends or friends of friends. But you’re not actually trying to share information with Facebook and there’s no reason to give them the information.  And so he developed this interesting sort of proof-of-concept Firefox add-on that sits in your web browser and anytime you type anything into Facebook you can prefix it with two ‘@’ symbols, and when you do that it will just transparently encrypt it before sending it off to Facebook. And then he has these mechanisms that allow you to really easily share keys with your friends. That way, if they are also running the add-on, then it will transparently decrypt it before displaying it. So everything works just totally the same and everything appears as normal to everybody in your friends group, but Facebook never gets the data. So I think that this is, you know, an interesting project and an interesting theory that I would like to see more of. You could possibly apply it to things like Twitter. You know, Twitter is one part broadcast and one part conversation. For the conversation, there’s no reason for Twitter to actually have the data.

And so he developed this interesting sort of proof-of-concept Firefox add-on that sits in your web browser and anytime you type anything into Facebook you can prefix it with two ‘@’ symbols, and when you do that it will just transparently encrypt it before sending it off to Facebook. And then he has these mechanisms that allow you to really easily share keys with your friends. That way, if they are also running the add-on, then it will transparently decrypt it before displaying it. So everything works just totally the same and everything appears as normal to everybody in your friends group, but Facebook never gets the data. So I think that this is, you know, an interesting project and an interesting theory that I would like to see more of. You could possibly apply it to things like Twitter. You know, Twitter is one part broadcast and one part conversation. For the conversation, there’s no reason for Twitter to actually have the data.

My second thesis there is that the crypto war was largely about data freedom. At that time, you were talking about trying to get information out into the world and other people trying to prevent you from getting that information out into the world. And so it was very easy to extrapolate a future of data control from that. In those moments, it was easy to think that, well, we’re gonna be dealing with this forever, you know. And so a lot of projects were born out of that reality. A lot of the anonymity and privacy projects that we have today are things like darknets, data havens, hidden services. Does anybody here use those things? Two people. Not many, right? Because I think that’s not really the future that we got. You know, we got this other future that we’re talking about, it’s somewhat more subtle, more complicated – this social democracy.

And one thing that I’ve noticed is that privacy advocates – the people who work on these privacy projects – are really in love with ‘the other’. These are people like Iranian dissidents or Chinese dissidents in far-away parts of the world. And I think the interesting thing about it to me is if you look closely – you know, Iranian dissidents or Chinese dissidents – they really have very little in common with what privacy advocates are doing. And yet, they’re still sort of obsessed with these struggles. I guess I would suggest that it seems like that’s because these are the few places in the world that are still speaking that language of data control, that language of information freedom that all of our projects were sort of born out of. And so they just happen to sort of dovetail even though they don’t really connect with the lives of the people that are working on the projects.

And I would say that even those places are beginning to realize that the strategy of data control is not entirely effective. Iran recently announced that they were launching a national email service. You know, unlimited storage, nice web interface… But I think that even they realize that the Google strategy is more effective than their strategy of deep packet inspection and trying to lock everything down.

I also think the loss of the crypto war was less about giving up and more about changing strategies, particularly the strategy of Key escrow, I think, has eventually become a strategy of key disclosure. We’ve seen laws like RIPA2 in the United Kingdom and other parts of Europe that essentially say “Okay, we’re gonna let you use cryptography, we’re not gonna try to regulate that because that’s a really hard problem, we have failed to do that in the past. And so instead, we’re gonna let everybody use whatever cryptography they want but if at any point in the future we want to see what the encrypted traffic is, we’ll come to you and we’ll ask for your key. And if you don’t give it to us, you go to jail”. And so this is a problem of key disclosure. I think that this is a problem of not having forward security in the secure protocols that we’re using today.

Again, if I’m looking into the future, the first thing I wanna do is deal with the choices that aren’t really choices. The second thing I wanna do is worry a little bit less about information freedom. And the third thing is I wanna worry a lot more about forward security and this key disclosure problem. What happens when you show up at customs? You know what happens when you’re living in the open and someone comes knocking on your door.

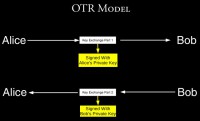

Nikita Borisov, Ian Goldberg and Eric Brewer wrote a pretty nice paper – I think in 2004 or 2006 – called “Off-the-record communication, or, why not to use PGP”. And in this paper, they make a pretty simple observation. They say – okay, everyone’s familiar with the PGP1 model, you have an email you want to send to Bob, you encrypt it with Bob’s public key and you send it to Bob. The next time you wanna send an email to Bob, you encrypt it with Bob’s public key and you send it to Bob. You could do this for twenty years.

Additionally, since this session key is constantly rolling forward, the old MAC keys are constantly rolling forward as well, and every time they roll forward you can just broadcast them in the clear, and now anybody could just as likely have created an old message. So you get authenticity, but you also get deniability.

These are two principles that I think are going to become more and more important in the future as we roll forward.

Some projects that I’ve been working on in that line – one is called ‘Whisper Systems’. And the idea is to try and bring forward secure protocols into mobile devices. So these are these two spaces: mobile devices are this place of choice that isn’t really a choice, and forward secure protocols is this thing that’s becoming increasingly important with this new strategy of key disclosure.

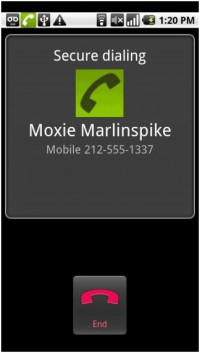

So one of the apps we have is called ‘RedPhone’, and basically it’s an encrypted voice application for mobile devices. The way it works is through VoIP4. And there’s this problem – doesn’t VoIP suck? It tends to; it tends to really suck in the mobile environment. And so, looking at this we wondered – okay, what is so bad about VoIP in the mobile environment? And we realized that the problems often (almost always) come down to the signalling layer. So the way it usually works is there’s some Asterisk server out on the Internet and you do signalling through this thing, you have to maintain a TCP connection and then do ‘SIP’ (Session Initiation Protocol) to notify the other client that you want to try and call them and other stuff. It’s a big problem because in the mobile environment your connection status is usually pretty flicky and you are moving in between networks. Maybe you still have a connection, maybe you don’t. Maybe you think you do but you don’t. And also, it doesn’t allow your device to go to sleep ‘cause you have to maintain this either UDP or TCP connection and so your device can’t ever really power down, so it’s bad for your battery.

So one of the apps we have is called ‘RedPhone’, and basically it’s an encrypted voice application for mobile devices. The way it works is through VoIP4. And there’s this problem – doesn’t VoIP suck? It tends to; it tends to really suck in the mobile environment. And so, looking at this we wondered – okay, what is so bad about VoIP in the mobile environment? And we realized that the problems often (almost always) come down to the signalling layer. So the way it usually works is there’s some Asterisk server out on the Internet and you do signalling through this thing, you have to maintain a TCP connection and then do ‘SIP’ (Session Initiation Protocol) to notify the other client that you want to try and call them and other stuff. It’s a big problem because in the mobile environment your connection status is usually pretty flicky and you are moving in between networks. Maybe you still have a connection, maybe you don’t. Maybe you think you do but you don’t. And also, it doesn’t allow your device to go to sleep ‘cause you have to maintain this either UDP or TCP connection and so your device can’t ever really power down, so it’s bad for your battery.

Well, what we realized is that the mobile environment actually has an entire signalling infrastructure already built in – the telecoms made it for us, and they use it to signal mobile devices. And so potentially we could just leverage that. Now, instead of like some Asterisk server allowing you to communicate with other devices using SIP, we just use SMS which is a signalling piece that’s already build into the mobile environment. The nice things about this are that you don’t need to maintain some constant network to some server, so your phone can go to sleep; you don’t the equivalent of like a Skype ID or a SIP account or something like that – you can do addressing based on your normal phone number because we are using SMS for the signalling; and the third thing is that you don’t need to run a VoIP server or set up an account or anything like that – you just install this small piece of software and now you’re ready to call anybody whose number you know. So then the question is – okay, how do we provide mobile security? Normally, VoIP has just a simple RTP string of voice data between two devices, and we have what’s known as ZRTP5 string – that’s a protocol that was developed by Philip Zimmermann, and it’s actually a pretty nice protocol. The way it works is that you do some ephemeral key exchange, and then from that key material you derive what’s known as a ‘Short Authentication String’.  And once the call is set up, at the bottom of the in-call screen you display the ‘Short Authentication String’ – in this case, the two words ‘flatfoot Eskimo’. Now, if there’s a man in the man-in-the-middle attack, the key material between the two devices would be different: you have one key on one side of the main-in-the-middle, and one key on the other side of the man-in-the-middle. And so these two words would be different on the two phones that are trying to talk to each other. So what happens is now you set up a call and you just read these two words to each other (‘flatfoot Eskimo’), and if they are the same on both sides, you know that the call is authentic, and so you don’t need certificates, certificate authorities, you don’t need fingerprints, digital signatures, Web of Trust – none of that stuff. You just read the two words to each other and you know you have an authentic call. It’s also kind of fun – it’s like refrigerator magnet poetry or something like that – the two words are always like a profound haiku, you know: “Flatfoot Eskimo – yeah, that’s how I’m feeling today”. So it’s actually kinda fun to read those things to each other.

And once the call is set up, at the bottom of the in-call screen you display the ‘Short Authentication String’ – in this case, the two words ‘flatfoot Eskimo’. Now, if there’s a man in the man-in-the-middle attack, the key material between the two devices would be different: you have one key on one side of the main-in-the-middle, and one key on the other side of the man-in-the-middle. And so these two words would be different on the two phones that are trying to talk to each other. So what happens is now you set up a call and you just read these two words to each other (‘flatfoot Eskimo’), and if they are the same on both sides, you know that the call is authentic, and so you don’t need certificates, certificate authorities, you don’t need fingerprints, digital signatures, Web of Trust – none of that stuff. You just read the two words to each other and you know you have an authentic call. It’s also kind of fun – it’s like refrigerator magnet poetry or something like that – the two words are always like a profound haiku, you know: “Flatfoot Eskimo – yeah, that’s how I’m feeling today”. So it’s actually kinda fun to read those things to each other.

The other app we have right now is called ‘TextSecure’, it’s an encrypted text messaging application using a protocol that’s derivative of OTR – this thing that gives you nice forward security and deniability properties. And it works just like the normal ‘Stock SMS App’ for Android, we’ve cloned it feature for feature. So the idea is you can install this and fully replace the default messaging app and use it to message any way you like and at the same time if someone else is running this, you get an encrypted session. The way the session works is as follows: every message you exchange includes one-half of a new key exchange, and so the key material that you’re using is constantly rolling forward. If someone were to record all of your SMS traffic and later try and compromise your phone and get something to decrypt all of that – they can’t because those keys are gone.

So anyway, these projects are a small hope of mine that we can come up with technical solutions to reduce the scope of the choices that we need to make. You can download all of this stuff for free – all the Internet apps and ‘GoogleSharing’ – online. Feel free to contact me, and thank you for listening to me talk.