Software engineer Daniel Selifonov taking the floor at Defcon 21 to touch upon aspects of full disk encryption, including the motivations, methods, and hurdles.

Hi! We’re here to talk about full disk encryption; why you’re not really as secure as you might think you are. How many of you encrypt the hard drives on your computer? Yeah, welcome to Defcon! So, I guess it’s, like, 90% of you at least. How many of you use open source full disk encryption software, something that you could potentially audit? Okay, not as many of you. How many of you always fully shut down your computer whenever you’re leaving it unattended? Okay, I’d say about 20%. How many of you have ever left your computer unattended for more than a few hours? A lot of hands should be up. And, obviously, another question of course is how many of you leave your computer unattended for more than a few minutes? Also pretty much everyone.

Hi! We’re here to talk about full disk encryption; why you’re not really as secure as you might think you are. How many of you encrypt the hard drives on your computer? Yeah, welcome to Defcon! So, I guess it’s, like, 90% of you at least. How many of you use open source full disk encryption software, something that you could potentially audit? Okay, not as many of you. How many of you always fully shut down your computer whenever you’re leaving it unattended? Okay, I’d say about 20%. How many of you have ever left your computer unattended for more than a few hours? A lot of hands should be up. And, obviously, another question of course is how many of you leave your computer unattended for more than a few minutes? Also pretty much everyone.

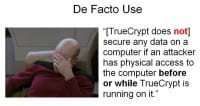

So, why do we encrypt our computers? It’s surprisingly hard to find anyone actually taking about this, which is really weird. And I think it’s really important to articulate our motivations: why we are doing something, a particular security practice (see left-hand image). And if we don’t do that we don’t have a sensible goalpost to see how we’re doing. There’re plenty of details in the documentation of full disk encryption software of what they do, what algorithms they use, and so forth. But almost nobody is talking about why. And I argue that we encrypt our computers because we want some control of our data, some assurances about confidentiality and integrity of our data, that nobody is stealing our data or modifying our data without us knowing about it. Basically, we want determination over our data; we want to be able to control what happens to it.

There are also situations where you have liabilities for not maintaining the secrecy of your data. Lawyers have to have attorney-client privilege; doctors have patient confidentiality; people who are in finance and accounting have all sorts of regulatory rules they need to comply with. And so, if you’re leaking data – you know, there are companies which have to notify their customers that “Oh, someone left a laptop unencrypted in a van and it got broken into and stolen, so your data might be out there on the Internet.” It’s really all about physical access to our computers and we want to protect them because, really, full disk encryption doesn’t do anything if someone just owns your machine. But it also gets to a greater point of, if we want to build secure networks, if we want to have secure intranets – we can’t do that unless we have endpoints that are secure. You can’t build a secure network without the foundations of secure endpoints.

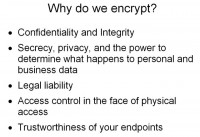

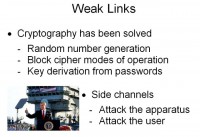

But by and large, we figured out that disk encryption had theory aspects of the stuff. We know how to generate random numbers reasonably securely on a computer; we know all the block cipher modes of operation that we should use for full disk encryption to get these sorts of nice security properties; we know how to derive keys from passwords securely. So, mission accomplished, right? We can all stand on an aircraft carrier (see left-hand image) and, you know… The answer is No, it’s not the whole story; there’s still a whole lot of cleanup that you need to do.

Even if you have absolutely perfect cryptography, even if you know it can’t be broken in any way, you still have to implement it on a real computer, where you don’t have these nice black box academic properties of your system. And so, you don’t attack the crypto when you’re trying to break someone’s full disk encryption. You either attack the computer and trick the user somehow or you attack the user and convince them to either give you the password or get it from them in some other means by a keylogger or whatever.

De facto use doesn’t really match up with the security models of the full disk encryption software. If you’re looking at full disk encryption software, they’re very much focused on the theoretic aspects of full disk encryption. Here’s a quote from the TrueCrypt web page, their actual documentation, that they do not secure data on your computer if someone has ever manipulated it or is manipulating it while it’s running. I wish I was making this up. Basically, their entire security model is like: “Oh, if it encrypts the disk correctly, if it decrypts the disk correctly – we’ve done our job.” (see image above)

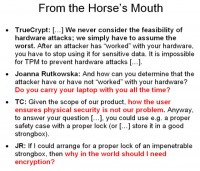

This (see left-hand image) is an exchange between the TrueCrypt developers and another security researcher by the name Joanna Rutkowska, where she brought up this attack and tried to talk to them and see what their reaction was of feasibility. And so, this is what they said: “We never consider the feasibility of hardware attacks; we simply have to assume the worst.” And she asks: “Do you carry your laptop with you all the time?” They say: “…how the user ensures physical security is not our problem.” And she asks very correctly: “Why in the world should I need encryption then?”

So, ignoring feasibility of an attack – you can’t do that! We live in the real world where we have these systems that we have to deal with; we have to implement them; we have to use them. And there’s no way that you can compare a 10-minute attack that you conduct with just software, like a flash drive, to something where you need to pull apart the hardware and manipulate the system that way. And regardless of what they say, physical security and resistance to physical attack is in the scope of full disk encryption. It doesn’t matter what you disclaim in your security model. At the very least, if they don’t want to claim responsibility of that, they need to be very clear and unequivocal about how easily the stuff can be broken.

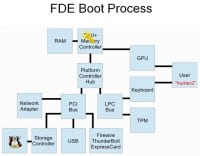

This is sort of an abstract system diagram of what is mostly in a modern CPU (see right-hand image). So, as we know, the bootloader gets loaded from the secondary storage on the computer by the BIOS and it gets copied into main memory through data transfer. The bootloader then asks the user for some sort of authentication credential like a password or a key smartcard or something like that. The password is then transformed by some process into a key which is then stored in memory for the duration of the computer being active. And the bootloader, of course, transfers control over to the operating system, and then both the operating system and the key remain in memory for the transparent encryption and decryption of the computer. This is a very idealized view. This assumes that nobody is trying to screw with this process in any way. I guess we can all think of a few different ways where this can be broken. So, let’s enumerate a few things that might go wrong if someone is trying to attack you.

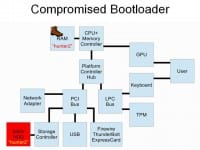

I break attacks into three fundamental tiers (see right-hand image). First off, non-invasive, which is something that you might be able to execute with just a flash drive; you don’t even need to take the system apart, where you could attach to it some other hardware component, like a PCI card, ExpressCard, or Thunderbolt (the new adapter that gives you basically naked access to the PCI bus). Secondly, we’ll consider attacks where screwdriver might be required, where you might need to remove some system component temporarily to deal with it in your own little environment. And also, soldering iron attacks, which is the most complicated, where you are physically either adding or modifying system components like chips on the system in order to try to break these things.

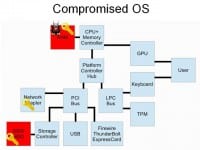

One of the first types of attacks is the compromised bootloader (see left-hand image), or also sometimes known as an ‘evil maid’ attack, where you need to start executing some unencrypted code as part of the system boot process, something which you can bootstrap yourself with and then get access to the rest of the data that’s encrypted on the hard drive. There are a few different ways that you can do this. You could physically alter the bootloader on the storage system. You could compromise the BIOS, you could load a malicious BIOS that hooks the keyboard adapter or hooks the disk reading routines and modify it the way that’s resistant to removing the hard drive. But in any case, you can modify your system so that when the user enters their password it gets written to disk unencrypted or something like that.

You can do something similar of the operating system level (see right-hand image). This is especially true if you are not using full disk encryption – if you are using container encryption. There’s the whole operating system that someone can manipulate. This could also happen from attack on the system like an exploit, where someone gets root on your box and now they can read the key out of main memory. That’s kind of a perfectly legitimate attack. And that key can be either stored on the hard drive in plaintext for later acquisition by the attacker or sent over the network to the command and control systems.

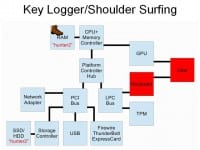

Another possibility, of course, is capturing the user input via keylogger, be it software, hardware, or something exotic like a pinhole or maybe a microphone that records them typing in sounds and trying to figure out what keys they pressed (see left-hand image). This is kind of a hard attack to stop because it potentially includes components that are outside of the system.

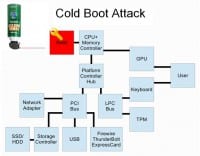

I’d also want to talk about data remanence attacks, more colloquially known as ‘cold boot attacks’ (see right-hand image). So, if you had asked five years ago even people who were very security savvy what the security properties of main memory were, they would have told you when the power is down you lose the data very quickly. And then, excellent paper from Princeton, 2008 discovered that actually at room temperature – you’re looking at several seconds of perfectly good, very-very little data degradation in RAM – if you cool it down to cryogenic temperatures by, say, using an inverted can duster, you can get several minutes where you’re getting very little bit degradation in main memory. And so, if your key is in main memory and someone pulls out the modules from your computer, they can attack your key by finding where it is in main memory in the clear. There are some attempts for resolving this in hardware, like “oh, the memory modules need to be scrubbed,” but it’s not going to help you if someone takes the module out and puts it either on another computer or a dedicated piece of hardware for extracting memory module contents.

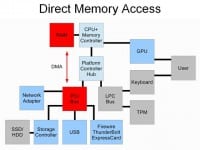

And finally, there’s direct memory access (see left-hand image). Any PCI device on your computer has the ability, in ordinary operation, to read and write the contents of any sector in main memory. They can basically do anything. This was designed back when computers were much slower, where we didn’t want to have the CPU babysitting every transfer from a device it’s doing from main memory. So devices gain this direct memory access capability, and they can be issued a command by the CPU and they can just finish it, and the data would be in memory whenever you needed it.

And this is a problem because PCI devices can be reprogrammed. A lot of these things have writable firmware that you can just reflash to something hostile. And this could compromise the operating system or execute any other form of attack of either modifying the OS or pulling out the key directly. There’s forensic capture hardware that is designed to do this in criminal investigations: they plug something in your computer and pull out the contents of memory. You can do this with FireWire, you can do this with ExpressCard, you can do this over a Thunderbolt now, the new Apple adapter. So, these are basically external ports to your internal system bus, which is very-very powerful.

So, wouldn’t it be nice if we could keep our key somewhere else in RAM? Because we’ve sort of demonstrated that RAM is not terribly trustworthy from the security perspective. Is there any dedicated key storage or cryptographic hardware? There is. You can find things like cryptographic accelerators and use them in web server so you can handle more SSL transactions per second. They are tamper resistant, or certificate authorities have these things that hold their top secret keys, but they are not really designed for high-throughput operations like using disk encryption. So, are there any other options?

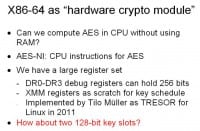

Can we use the CPU as sort of a pseudo hardware crypto module? Can we compute something like AES in the CPU using only something like CPU registers? Intel and AMD added these rather excellent new CPU instructions which actually take all the hard work of doing AES out of your hands; you can just do the block cipher primitive operations with a single assembly instruction. The question is then: can we store our key in memory and can we actually perform this process without relying on main memory? We have a fairly large register set on x86 processors, and if any of you have actually tried adding up all the bits that you have in registers – it’s something like 4 KB on modern CPUs. So, some of it we can actually dedicate to key storage and scratch base for our encryption operation.

One possibility is using the hardware breakpoint debugging registers. There are four of these in your typical Intel CPU, and in x64 these are each going to hold 64-bit pointer. That’s 256 bits of potential storage space that most people will never actually use. The advantage, of course, to using debug registers is: one – they are privileged registers, so only the operating system can access them; and you get other nice benefits, like when the CPU is powered down either by shutting off the system or putting in sleep mode you actually lose all register contents, so you can’t cold-boot these. A guy in Germany, Tilo Muller, actually implemented this thing as TRESOR for Linux in 2011, and he did performance testing on it and it’s actually not any slower than doing your regular AES computation in software.

How about, instead of storing a single key, we can store 128-bit keys? This gets us into more of the crypto module space. We can store a single master key which never leaves the CPU on boot-up, and then load and unload wrapped versions of keys as we need them for additional task operations.

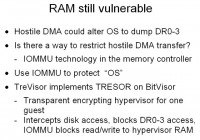

The problem is, we can have our code or our keys stored outside of main memory, but the CPU is ultimately still going to be executing the contents of memory. So, with DMA transfer or some other manipulation you could still alter the operating system and get it to dump out the registers in main memory, or if they are somewhere more exotic, like debug registers.

Can we do anything about a DMA attack angle? As it turns out, yes we can. Recently, as part of new technologies for enhancing server virtualization, for performance reasons people liked being able to attach, say, a network adapter to a virtual server so it would need to go through a hypervisor. So, IOMMU technology was developed so that you can actually sandbox a PCI device into its own little section in memory, where it can’t arbitrarily read and write anywhere on the system. So, this is perfect: we can set up IOMMU permissions to protect our ‘operating system’ or whatever we’re using to handle keys, and protect it from arbitrary access.

And, again, our friend from Germany, Tilo Muller, has implemented a version of TRESOR on a microbit visor called BitVisor which, basically, does this. It lets you run a single operating system and it transparently does this disk access encryption; the guest doesn’t even have to care or know anything about it, which is great. Disk access is totally transparent to the OS. Debug registers cannot be accessed by the OS, and IOMMU is set up so that the hypervisor itself is secure from manipulation.

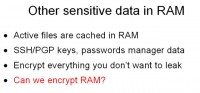

But as it turns out, there are kind of other things in memory that we might care about other than disk encryption keys (see left-hand image). There’s the problem that I hinted at earlier, where we used to do container encryption and now we all do full disk encryption for the most part. We do full disk encryption because it’s very-very difficult to make sure you don’t get accidental rights of your sensitive data to temporary files or caching in a container encryption system. Now that we are re-evaluating main memory as a not secure, not trustworthy place for storing data, we need to treat it in much the same way. We have to encrypt everything we don’t want to leak, things that are really important, like SSH keys or private keys or PGP keys or even password manager or any ‘top secret’ documents that you’re working on.

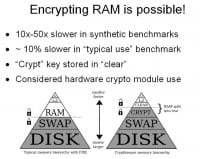

I had this really-really silly notion: can we encrypt main memory? Or at least most of the main memory where we are likely to keep secrets so that we can at least minimize how much we are going to leak. And, once again, surprisingly or perhaps not so surprisingly, the answer is Yes (see right-hand image). A proof of concept in 2010 by a guy named Peter Peterson actually tried implementing a RAM encryption solution. It wouldn’t encrypt all RAM, it would basically split main memory into two components: a small fixed size “clear” which would be unencrypted, and then a larger sort of pseudo swap device where all the data was encrypted prior to being kept in main memory. It ended up being obviously quite a bit slower in synthetic benchmarks. But in the real world, when you ran, for instance, a web browser benchmark, it actually did pretty well – 10% slower. I think we can live with that. The problem with this proof of concept implementation was that it stored the key to decrypt in main memory, because where else would we put it? The author considered using things like the TPM for bulk encryption operations, but those things are even slower than dedicated hardware crypto system, so it would just be totally unusable.

But you know what? If we have the capability to use the CPU as a sort of pseudo hardware crypto module, it’s right in the center of things, so it should be actually fast enough to do these things. So, maybe we can actually use something like this.

Let’s say we have this sort of system set up. Our keys are not in main memory and our code responsible for manipulating the keys is protected from arbitrary read and write access by malicious hardware components. Main memory is encrypted, so most of our secrets are not going to leak even if someone tries to execute a cold boot attack. But how to we actually get a system booted up to this state? Because we need to start from a turned off system, authenticate ourselves to it and get the system up and running. How do we do this in a trustworthy way? After all, someone could still modify the system software to trick us into thinking that we are running this “great new” system, but in reality we are just not doing anything.

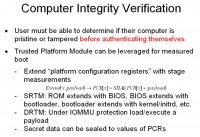

So, one of the very important topics is being able to verify the integrity of our computers (see left-hand image). The user needs to be able to verify that the computer has not been tampered with before they authenticate themselves to it. There’s a tool that we can use for this, the Trusted Platform Module. It’s kind of got a bad rap – we’ll talk about it a little bit more – but it has a capability to measure your booting sequence in a couple of different ways to let you control what data will be revealed to the system from the TPM to particular system configuration states. So you can basically seal data to a particular software configuration that you are running on your system. There are a couple of different implementation approaches to do this, and there’s fancy cryptography to make it really hard to get around it. So, maybe we can do this.

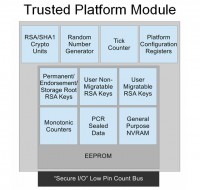

What is a TPM anyway? It was originally sort of like the grand part solution to digital rights management by media companies. Media companies would be able to remotely verify that your system is running in some “approved” configuration before they would let you run the software and unlock the key to your video files. It ended up being really impractical in practice, and so nobody is actually even trying to use it for this purpose anymore. I think a better way to think about it is, really, just a smart card that’s fixed on your motherboard: it could perform some cryptographic operations, RSA/SHA, has a random number generator, and it has physical attack countermeasures to prevent someone from very easily getting access to the data that’s stored in it (see right-hand image). The only real difference between it and the smart card is that it has the ability to measure the system boot state into platform configuration registers; and that’s usually a separate chip on the motherboard. So, there are some security implications of that.

There’re some kind of fun bits, like monotonic counters – numbers that you can only request the TMP increases, and then it can check what the value is. There’s a small non-volatile memory range that you could use for, really, whatever you want; it’s not very big, like a kilobyte, but could be useful. There’s a tick counter which lets you determine how long the system has been running since last startup. And there are commands that you could issue to the TPM to make it do things on your behalf, which include even things like clearing itself, if you feel the need to.

We want to then develop a protocol that a user can run against the computer so that they can verify that the computer has not been tampered with before they authenticate themselves to the computer and begin using it. What sort of things can we try sealing to platform configuration registers that would be useful for this sort of protocol? A couple of suggestions that I have are seeds to one-time password tokens, either the time or the even variety; maybe some sort of unique image or animation, like a photograph of you somewhere – something unique that cannot be easily found elsewhere (see right-hand image). You might also disable the ‘video out’ in your computer when you are in this challenge-response authentication mode.

You also want to seal a part of the disk key, and there are a couple of reasons you want to do that. It assures, within certain security assumptions, that the system is only going to be booting into some approved software configuration that you control as the owner of the computer. Ultimately, that means that anyone who wants to attack your system needs to do it either through breaking the TPM or they need to do it within the sandbox that you’ve created for them. This, of course, is not very cryptographically strong or anything like that; you’re not going to have a protocol which allows a user to securely authenticate a computer to the same level of security that you have, say, with AES. But unless you can do something like RSA encryption in your head, it’s never going to be perfect.

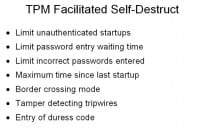

I mentioned that there’s a self-erase TPM command that you can issue as in the software. Since the TPM requires the system to be in a particular configuration before it will release secrets, you can actually do something interesting, like self-destruct (see left-hand image). You could develop the software and set up your protocol to limit, say, the number of times the computer has been started up unsuccessfully, have a time-out once the password has been waiting on the password screen for some period of time, or limit the number of passwords you can enter or the amount of time since the computer has been started up – say, if it’s been in ‘cold storage’ for a week or two. You could also restrict access to the computer for periods of time so that when you’re going to be traveling to a foreign country you want to lock down your computer for the duration of the trip; when you’re at the hotel or whatever on the other end, then you can unlock it, but not before.

You could also do fun things like leave little canaries on the disk which appear to contain the critical values for your policy but are really just tripwires, and you’re really just using the internal TPM values. You could also create self-destruct password or duress code to automatically issue this reset command. Since the two options that an attacker would have would be to break the TPM or run your software, you can kind of make them play by these rules and you can actually do an effective self-destruct. The TPM is intentionally designed to be very hard to copy; you can’t clone it very easily. So you could use things like monotonic counters to detect right blockers, any disk restore or replay attacks. And once the TPM ‘clear’ command is issued, it’s game over for an attacker who might want to get access to your data.

There are some similarities to a system that Jacob Appelbaum discussed at the Chaos Communication Congress many years ago, 2005. He proposed using a remote network server for many of these options but admitted it was going to be brutal and kind of potentially difficult to use. Since the TPM is an integrated system component, you can get a lot of these advantages by using the TPM instead of remote server. A hybrid approach is potentially possible. You could have a system set up, say, as in IT department, where you temporarily lock down the system and it can only become available again once you plug it into the network, call your IT administrator and they unlock the system again. I’m kind of hesitant to expose a network stack this early in the boot process, just because it massively increases your attack surface. But it’s still a possibility.

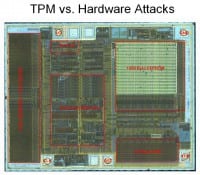

I’ve sort of qualified all my statements that an attacker can only do this – that’s of course under the assumption that they cannot break the TPM very easily. This (see right-hand image) is actually an optical microscope scan of a TPM, or smart card done by Chris Tarnovsky. He has actually done some really great work in figuring out how hard these things are to break. He has enumerated the countermeasures and sort of figured out what it would take to actually break these things, and actually he has gone and done it and tested it. So, there are things like light detectors, there are active meshes, there are all sorts of really crazy circuit implementations to try to throw you off the track of what it’s actually doing.

But if you spend enough time and have enough resources and you’re careful enough, you can actually get around most of these, so you can de-encapsulate a chip, put it in an electro-microscope workstation and go wild, find where the unencrypted data bus is and just glitch it and get the thing to spill out all the secret data. But nonetheless, this sort of an attack, even if you’ve done all the R&D, is going to take hours with an expensive microscope, and you’re still going to spend lots of R&D to figure out what the countermeasures are on the chip so that you can actually break it without frying the one chip of your attack target.

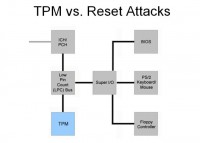

There are also reset attacks (see left-hand image). I mentioned earlier that the TPM is a separate chip on the motherboard in almost all cases. It’s very-very low in the system hierarchy. It’s not up in the CPU like it is for DRM enforcement in video consoles. If you manage to reset it, you are not going to irreversibly affect the system that badly. It’s usually a chip that’s off the LPC bus on a computer, which itself is sort of a legacy bus that’s off the southbridge. On modern systems, really, the only sorts of things you are going to find are the TPM and things like the BIOS, legacy keyboards, we used to have floppy controllers, but I guess not really anymore. And if you find a way to reset, say, the Low Pin Count bus, you will reset the TPM into a ‘fresh system boot’ state. You’ll lose your PS to keyboard, but not really a big deal. And you’ll be able to play back the trusted boot sequence of the TPM that has data sealed to without actually executing that boot sequence, and you could use this to extract data.

There are a couple of attacks that have tried to exploit this. If you are using an older mode in the TPM called Static Root of Trust for Management, you can do this pretty easily. I have not seen any research on a successful attack against the newer Intel Trusted Execution Technology version of the TPM activation. It’s likely still possible – this is an area that probably needs more research – to intercept the LPC bus and what it’s communicating to the CPU; that might be another way that you can attack the TPM.

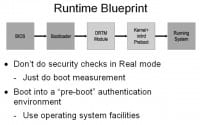

So, let’s look at a blueprint (see right-hand image), what I think we should have for getting a system from a cold boot up into when we have our running trustworthy configuration. There are a lot of really vulnerable legacy system components in RPC architecture. You can do all sorts of things in the BIOS, like hooking the factor table and modifying disk reading rights, or capturing keyboard input, masking all CPU feature registers. There are plenty of options when you want to mess with people. In my opinion, you really want to get out of BIOS controlled mode, out of real mode – into protected mode as soon as possible, and really, just do your measurement stuff.

So, once you get into this ‘pre-boot’ mode, which is really just your operating system, like a Linux initial RAM disk, then you start executing your protocol and start doing these things. I mean, once you’re using operating system resources, what someone does at the BIOS level as far as interrupt the tables, doesn’t really affect you anymore. You really don’t care. And you can do sanity checks on your registers, like if you know you’re running on a Core i5, you know it’s going to be supporting things like No-eXecute bit and debug registers and other stuff that people might try to mask out in capability registers.

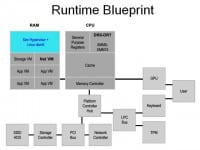

So, here (see left-hand image) is the runtime blueprint: what we actually want the system to look like once we’re in the running configuration. There was a previous project, TreVisor, which implemented many of the security aspects of doing disk encryption using CPU registers and having IOMMU protections on your main memory. The problem is that BitVisor is a very specialty not very commonly used program. Xen is sort of like the canonical open-source hypervisor, where there’s a lot of security research going on, people are making sure it’s not broken. In my opinion, we should use something like Xen as the bare level hardware interface, and then use a Linux dom0 administrative domain on it to actually do your hardware initialization.

Again, in Xen all of your paravirtualized domains are actually running in non-privileged mode, so you don’t actually have direct access to things like the debug registers. So, that’s one thing that’s already done. Xen exposes things like hypercalls that give you access to the source stuff, but it’s something you can disable in software.

So, the approach that I’m taking (see right-hand image) is we’ll do that master key approach in debug registers; we’ll dedicate two debug registers, the first two, to store 128-bit AES key, which is our master key. This thing never leaves the CPU registers as soon as it’s entered in by the process that takes the user credentials. And then we use the second two registers as virtual machine specific ‘whatevers’ – it could be either as ordinary debug registers or, in this case, we could use it to encrypt main memory. In this particular case, we still need to have a few devices that are directly connected to the administrative domain. That includes the graphics processing unit, which is a PCI device; you know, the keyboard, the TPM – all this stuff needs to be directly accessible. So you can’t really apply IOMMU protections on this.

But things like the network controller, storage controller, arbitrary devices in the PCI bus – you can set up IOMMU protections on those so that they have absolutely zero access to administrative domain or your hypervisor memory spaces. You can get access to things like the network by actually putting things like the network controller into dedicated virtual machines (see right-hand image). These things get the devices mapped but have IOMMU protection set up, so that device can only access the memory space of this virtual machine.

You can do the same thing with your storage controller and then actually run all of your applications in virtual machines that have absolutely zero direct hardware access (see left-hand image). So, even if someone owns your web browser or sends you a malicious PDF file, they don’t actually get anything that would let them seriously compromise your disk encryption.

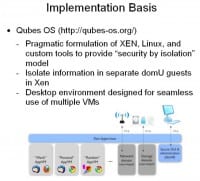

I can’t take the credit for that architecture design. It’s actually the design basis for the really excellent project called the Qubes OS (see right-hand image). They basically describe themselves as a pragmatic formulation of Xen, Linux and a few custom tools to do a lot of the stuff I just talked about. It implements these non-privileged guests and does a nice unified system environment. So it feels like you’re really running with one system, but it’s actually a bunch of different virtual machines under the hood. I use this as the implementation of my code base.

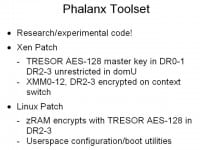

And so, the tool I’m releasing – it’s, really, a proof-of-concept experimental code; I call it Phalanx (see right-hand image). It is a patched Xen to do the implementation of the disk encryption stuff that I’ve described. Master key and the first two debug registers, second two debug registers – totally unrestricted. For security reasons, the XMM registers which are used as scratch base are encrypted, as well as the key when you’re doing some VM context switches. And I’ve also done a very simple implementation of crypto using zRAM, because – hey, it’s been mainlined, it does pretty much everything except crypto, so adding encryption on top of it, just a really tiny little bit of code, is great. The most secure code is the code you don’t have to write. The nice thing about zRAM is that it gives you a bunch of the bits that you need to securely implement things like AES Counter-Mode.

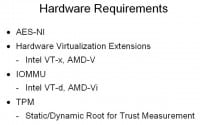

Hardware-wise, you do have a few system requirements (see left-hand image). You need a system that supports the AES New Instructions, reasonably common but not every system has it. Chances are, if you have an Intel i5 or i7 – almost all of them support it. But there are some oddballs, so check out Intel ARK to make sure it supports all the features you need. Hardware virtualization extensions – these were very-very common as of around 2006. IOMMU is a little bit more complicated to find if you are looking for a computer. It’s not listed as a sticker specification, you kind of need to dig for it; and there are a lot of people who should know better but don’t – about what the difference is between BTX and BTD and so forth. So, you might need to hunt for a system that supports this stuff. And you want a system, of course, with the TPM because otherwise you can’t implement this measured boot thing at all. Usually, you want to be looking at business-class machines, where you can verify this sort of stuff exists. If you look for Intel TXT, it will have almost everything you need. The Qubes team actually keeps a really great hardware compatibility list on their Wiki, which actually has details for a lot of systems that do this sort of stuff.

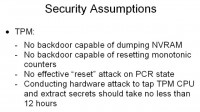

So, security assumptions: in order for the system to be secure, we have a few assumptions about a few of the system components (see right-hand image). The TPM, of course, is a very critical component for assuring the integrity of the boot. You need to make sure that there’s no backdoor capable of dumping NVRAM or manipulating monotonic counters or putting the system into a state where it’s not actually trusted (we just think it is). Based on the remarks by Tarnovsky who has reverse-engineered these chips, I’m sort of setting a bound of roughly 12 hours of exclusive access to the computer that’s required if you want to do a TPM attack on it to pull out secrets.

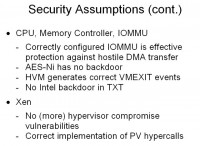

There are a few assumptions about the CPU, memory controller, and IOMMU, mainly that they are not backdoored and they are correctly implemented. Some of these might not necessarily be very strong assumptions, because Intel could easily backdoor some of these things, and we have no way of finding out. And some of the security assumptions about Xen: it’s a piece of software that actually has a very good security record, but nothing is perfect and occasionally there are security vulnerabilities. In the case of Xen, given its privileged position in the system, that’s actually kind of a big deal and you really want to make sure that it’s secure.

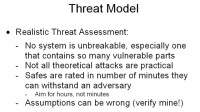

And so, under those security assumptions, we have a sort of framework for a threat model (see right-hand image). We want to do a realistic threat assessment where we realize that not every system is unbreakable, especially when there are so many legacy components that were designed without any consideration of security. But at the same time, also, not all theoretical attacks are practical and you can’t lump very simple attacks with difficult, complex hardware attacks. And I think a good analogy is thinking about safe security.

We all know that every safe can eventually be broken; it’s a question of how much time you have to reverse-engineer and break it. But eventually it can be broken. I think we need to think about our systems in the context of having physical security defenses in terms of hours rather than minutes that we have right now. And, as always, if I’ve screwed up, if I’ve made an assumption that you don’t think holds – prove it, verify it, verify mine, make sure I’m right, or wrong.

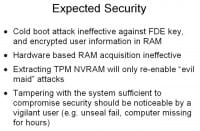

Expected security – this (see right-hand image) is what you’ll actually get. Cold boot attack is not going to be effective against keys, and stuff that you have in main memory is going to be restricted by whatever you have in clear. Hardware based RAM acquisition is not going to be effective because they are going to be IOMMU sandboxed to nothing, so they are not actually going to get your application state or your system state. And even if you manage to extract the secrets out of the TPM, all it’s going to do is get you back to where we are right now, where, although it’s easily broken, you still go all the way down to zero. And I’m setting an assumption here that if you have a good security habit policy, which is reasonable, say, 12 hours of no contact to the computer, you should be okay. As long as you’re reasonably vigilant and not excessively vigilant, you should be okay.

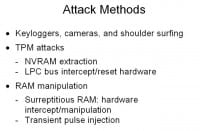

A couple of attack methods (see left-hand image) which are really the main ones that I would use if I were trying to break into a system. Keyloggers are still going to be very much not defended against. You can do some mitigation of this by using one-time tokens, but it’s still, again, going to be imperfect. TPM attacks, as I mentioned before – either NVRAM extraction or LPC bus intercept/reset hardware. Find some way of tricking the TPM into getting into a configuration that it thinks is trustworthy but is actually not. And RAM manipulation – if you have something which looks like RAM, cracks like RAM, acts like RAM but isn’t actually RAM, it pretends to be RAM for most of the time, but once you manipulate it externally, then there’s really nothing you can do because you’d be able to manipulate the contents of the system, no problem. You could also try things like transient pulse injection, which is how George Hotz broke the hypervisor security on the PS3.

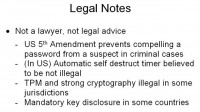

Now, real quick about legal notes (see right-hand image); I’m not a lawyer, obviously, not legal advice. As far as I know, if you have self-destruct, it is not illegal yet, but there’s been no legal test case of this; might be interesting to find out, but I’m not sure I’d want to be that test case either. It’s illegal in certain jurisdictions; you can’t use the TPM in, say, China; you can’t use the TPM in Russia. And some countries, like the United Kingdom, have mandatory key disclosure. You will go to prison if you do not hand over your key – the RIPA Act.

Future work and improvements (see left-hand image): production version, stable version – right now it is not stable. If you put your computer to sleep it will eat your data, among a couple of other problems – I’m working on it. And there are some other things that might be fun to do in the future. OpenSSL keys are really important, so if there’s some API that you can do to, basically, let you swap out your contents of memory very-very quickly, your exposure time is very small.

Conclusions: the best security in the world goes unused if it’s unusable (see right-hand image). The model needs to account for realistic use patterns. And it’s not just disk encryption; you really need to think about it holistically from the perspective of the whole system. It’s challenging to do this, but I think it’s possible and we should try. Thank you!