This article highlights the issues raised at the Florida State University lecture for “Offensive Security” regarding SSL and TLS protocols, namely their background, infrastructure, flaws and known crypto attacks.

The outline for today’s talk is we’re going to go over SSL and TLS and cover its history, its flaws, the important attacks, and I guess the lessons learned or ignored from all of its faults. And then we’re going to go over attacks for it; sslstrip is what I want to show in demo, also sslsniff and some other crypto attacks that came out very recently.

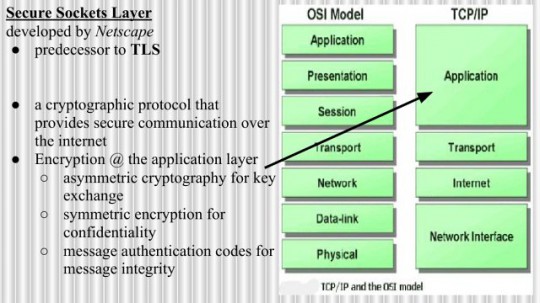

So, SSL is Secure Sockets Layer. It was developed by Netscape. It is a predecessor to TLS. It’s essentially a cryptographic protocol that provides secure communication over the Internet. The encryption is done at the application layer. I list the TCP/IP model as opposed to the OSI model. I guess, conceptually, the encryption in the OSI model would occur at the session layer, and perhaps in the presentation layer, because the browser handling of SSL also provides that little presentation of either that lock on the URL bar or that green little segment to the left – basically the same: “This is Microsoft verified, signed by VeriSign, this is a valid certificate, your browser trusts this”.

Essentially, there’s three parts to it. There’s a first initial handshake to set up SSL, and then there’s the second handshake that uses asymmetric cryptography to establish a session key. And the session key is the symmetric key. And so, the third part of SSL, the last part, is the final handshake that begins to rest the communication for the session over asymmetrically encrypted channel using that session key. It also uses the net in the remainder of communication message and authentic codes to provide message integrity.

TLS is Transport Layer Security. It’s defined in RFC 5246. It is seen as the successor to SSL, whereas it’s actually derived from an earlier version of SSL. It is, basically, in theory, the same as SSL conceptually. It’s basically a cryptographic protocol that provides secure communication over the Internet, and the encryption happens at the application layer. It uses asymmetric cryptography to establish a key, and that key is a symmetric key, and that key is used to provide encryption to establish confidentiality for the rest of the communication. It also uses message authentication codes for message integrity.

These two things are used everywhere. They’re used in web browsers, they’re used in email; apparently, Wikipedia says they’re used in Internet faxing – I’m not sure if that still exists; they’re used in instant messaging, they’re used in Voice over IP and other things.

SSL came from the 1990s, early 90s, basically, the dawn of time. It was developed by the engineers at Netscape who were tasked with a task of providing a protocol for making secure HTTP requests. At the time there was very little known about secure protocols. There were no best practices, there were no good examples, academic examples on: “Here’s a protocol we’ve established, here’s an attack, here’s how we defended against it, here’s an attack against that defense”, and so on.

Also they were under intense pressure to get the job done, facing common deadlines that we face today. They had to make a lot of 4am decisions. And this gave us SSL. It’s actually really amazing that it lasted this long and still works as well as it does. But now the fundamental system engineered back in the 90s faces serious problems with authenticity. We’re seeing, because of various events, a really diminishing trust; hackers are much smarter than they were back in the 90s; and we also now know much more about how to secure things.

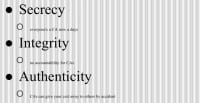

So, what does a secure protocol need to provide? It needs to provide secrecy – in other words, someone can’t eavesdrop and find out the contents of your communication. It needs to provide integrity – it means that malicious man in the middle can’t manipulate your traffic, flip bits here and there, change a plus sign to a negative sign – perhaps, instead of taking money from him, have money go from you to the attacker. And also it needs to provide authenticity – in other words, a message sent from Alice is definitely sent from Alice. This is, basically, non-repudiation and authenticity there.

Here’s the basics to the SSL/TLS handshake (see right-hand image). The following is sent in plaintext: the client’s SSL version number, his cipher settings and his session data, along with some other stuff. The server responds with something very similar. The server responds with its SSL version number, its cipher settings, its session data, as well as its public key certificate.

The client uses the provided certificate to authenticate the server’s identity. If this fails, the user is warned that an encrypted and authenticated connection cannot be established. Often browsers will pop up a warning saying: “I cannot authenticate this certificate, or this is a self-signed certificate that doesn’t stem from one of the root certificates that we trust in our browser settings”. Usually users proceed anyways.

At this point, using the public key certificate, some encrypted communication begins. And this is supported by asymmetric key encryption. Essentially, depending on how the cipher settings are chosen, the details here can vary, but essentially the handshake here establishes a shared symmetric key. And then the remainder of the communication uses that shared symmetric key to provide confidentiality and uses message authentication codes to provide integrity.

It’s made in the very beginning; it’s influenced by the user’s browser version and that browser’s settings. Maybe it’s an old version of Firefox and it doesn’t support the latest SSL and TLS. By default the decision usually chooses the most recent version of whichever protocol between the two. So if the server supports version 1, 2 and 3, and the client supports 1 and 2, but not 3 yet, they’re going to choose 2.

HTTPS uses these certificates. Essentially, the certificate authorities say: “This key that was provided by the server belongs to this website and the browser does trust it”.

Like I said, implementation details can vary, and they can definitely vary over versions. And so, the client authenticates the server based on the server certificate. Usually the server does not require the client to authenticate itself. There’s some other mechanism behind that, like there’s a login prompt on the page that the user authenticates through – that’s totally sufficient. There are versions of SSL and TLS that require mutual authentication, but they are not very commonly encountered by the average web user.

All of this is basically automated to not involve the user, unless there is a certificate of unknown origin signed by unknown certificate authority or chain or certificate authorities that can’t be traced to a set of certificate authorities that the browser trusts.

So let’s talk about this issue of trust (see right-hand image). Essentially, at some point, it was decided that trust is just too hard for the normal user to think about. So the browser vendors decide, basically, the trust for you. It’s pretty nice of them. Let’s look at the trust decided for you. This is just using the certificate information and my version of Chrome on my home machine, just a default setup of Chrome. Default setup of Chrome ships with 40 trusted root certificate authorities and 25 intermediate certificate authorities that are trusted to sign certificates. And then users can always add their own certificates, and sometimes the default option is just to click Yes.

Let’s think about this. Personally, I don’t even know 40 people that I would trust with that information. I certainly wouldn’t trust more than 3 people in my life with my banking information. And so, 40 organizations in the world are securing your banking data. Think about that for a second.

Certificates are composed of a public and a private key. I should mention that there was a point where there was only one root certificate authority, VeriSign. Back in the day the Internet was small and there was one root CA. And the model worked, the model made sense: you have one guy that everyone trusts and he’s supposed to have, basically, top secret security, impossible to hack. And it all makes sense, it all works.

But now we have hundreds of certificate authorities, and that’s not necessarily a problem in itself, because we’re approaching a billion IP addresses that are used for websites. You can’t expect one single entity to police a billion different websites to make sure that: “Hey, they’ve kept the private key secure, they’re acting legitimately, they’re playing by the rules”. That’s actually infeasible, that’s impossible.

So, before we go further into that, we need to cover, basically, what certificates are. Certificates are basically composed of a public and a private key. This is, essentially, asymmetric key cryptography. The public key certificate, commonly referred to as just the certificate, is a digitally signed statement that binds the value of that public key to the identity of the person, device or service that holds the corresponding private key.

It’s usually supported through X.509 standard certificates. It’s important to note that these were designed back in the 80s. As a result, the X.509 standard is commonly perceived to be messy. They’re also designed to be very flexible and very general. Throughout the years since it was introduced, people have exploited its generality and its flexibility to make certificates behave in totally whacky ways that no one actually intended them to behave. Like I said, in the 1990s there was not a vast body of knowledge on how to properly secure protocols. So, take it 10 years earlier – they never foresaw any of these problems occurring. And yet, we still use it.

The art of, basically, binding the value of the public key to the identity of the person, service or device holding its private key comes down to, essentially, signing (see right-hand image). Certificates can be used to sign two different things. One is to provide secure communication that offers non-repudiation and authenticity. In other words, Bob sent this message, I can prove that Bob did send this message. Bob can’t claim: “Hey, I didn’t send that message”. So it’s non-repudiation, and the first part that I said is I can prove Bob sent that message, so that’s authenticity.

The private key of a certificate can be used to sign a message and is decrypted or verified with the public key. A private key can be used to sign other people’s public keys, and this is how certificate chains are established in a chain of trust – essentially, trust relationships. So, in essence, VeriSign signs this certificate for Microsoft and your browser sees that: “Hey, VeriSign trusts Microsoft, I trust VeriSign, thus I trust Microsoft”. And this flaunts the foundation of the public key infrastructure.

Let’s talk about the public key infrastructure (see right-hand image). It is, essentially, a set of hardware, software, people, policies and procedures needed to create, manage, distribute, use, store and revoke digital certificates. Now, I should note, before I get all doom gloom for the rest of the hour, that certificate blacklists exist and they work. It requires properly checking the blacklists though; some browsers may not be configured to do that as frequently as they should.

So this is the topology for general public key infrastructure (see right-hand image). The most important part of it is the certificate authority. It is responsible for binding the public key with the respective users’ identities. The user identities must be unique, in theory, whilst in practice we see that not being the case; unfortunately, more often than we desire. And the CA uses its own private key to sign the user’s public key to say: “Hey, I trust this user”.

The validation authority, shorthanded as VA, is supposed to be a third party that exists to provide and vouch for user information. It validates that user A is who he says he is. It is involved in the registration and issuance of these certificates.

The registration authority exists to ensure that the public key is bound to the individual and to no one else. This ensures non-repudiation.

Certificate authorities can sign the public key for other certificate authorities (see right-hand image), saying that: “I, VeriSign, or I, Mozilla, trust this organization to establish other trust relationships in the form of acting as a certificate authority itself”.

It shouldn’t work in reverse; the model should totally fail in reverse; you should not have trust cycles. Trust cycles, when you try to validate a chain of trust, can cause an infinite loop, depending on the implementation. Also they completely break the model; it just makes things not work. It logically falls apart. So this should never occur.

However, if you have enough money, it can, and if you find the right CA that is willing to do shady stuff. I won’t say it on the record here, but there are some CAs out there that will sell, basically, some form of their root certificate if you’re worth at least 5 million dollars, and some other stuff.

So, here’s the process, if you own a website, to get a certificate:

1. I ask the certificate authority to issue a certificate in my name.

2. The certificate authority validates my identity and then issues the certificate.

3. I then present the certificate validating my identity to the user who wants to access my website.

4. The user doesn’t know me, so he has the authority to verify my identity, because a certificate authority is mentioned in the certificate that is provided to my user.

5. The certificate authority checks that my certificate is valid; in other words, it hasn’t been altered when the website owner got it, and it hasn’t expired, and some other checks: it hasn’t been revoked as well.

6. After validating the certificate provided to the user, certificate authority tells the user that that certificate is valid.

7. And finally, the user now trusts the website.

So, let’s talk about getting a certificate for your website. The average website owner isn’t as sophisticated as the people in this room. They aren’t as aware of the security issues that users and website owners typically face on the Internet. When shopping for a certificate, back in the day there was one certificate authority, VeriSign. They could charge whatever they wanted, and they made bank.

Competition was introduced, and so then the question was posed: “Who do I trust more, party A or party B to be my CA and what is it worth to me? How do I put value to that trust? Is there some sort of metric I can use to put the level of the trust I need should only cost this much money?” It’s a very difficult question. It’s an extremely vague question, because trust can really vary the definition from person to person.

There’s a lot of websites out there that allow you to compare the certification services provided from CA to CA. And so, the details here can vary between using 128-bit encryption keys to 156-bit encryption keys to 512 to 1024 and so on. However, in most of the columns it’s not really translated here; it’s just either some vague value of low and high that’s not really scientifically measureable and is used to describe its assurance level.

Now, to a normal website owner that doesn’t really mean that much. It doesn’t mean that much to us as scientists. I might consider 2048-bit encryption keys to be low insurance for my needs. In essence, the website owner has to make a decision, and it’s usually driven primarily by how much money I’m willing to spend. Maybe next year I’ll be able to afford a better one, but for now I’m not willing to allow 570 dollars per month to secure my website.

In essence, getting a certificate means forking over money, providing identifying info about yourself to the CA, and promising to play nice. This entire transaction boils down to your buying their trust. You can manipulate it and interpret it any other way you want, but it always boils down to your buying their trust.

There are some instances where, you know, there’s research done about each person who is registering for a certificate, registering for any certificate from a CA, make sure bad guy is not getting them. That usually does happen. But if they can’t find any bad stuff about you, it generally comes down to a decision that as long as they pay the money, they get their certificate. There may be some standards. For some enterprise certificates you may have to prove that you’re an actual company that exists in a physical location, an office building, has servers, has staff and personnel, and is worth at least that much money as a company, perhaps in publicly traded stocks and assets. So there are restrictions. But at the end of the day you’re buying their trust as long as you meet the standards.

So, how do website owners decide? Really, the user doesn’t notice any difference. When was the last time any of you clicked on the certificate information for a website you visited to see it was signed by the exact same certificate chain and root CA as the last time? After all, these guys own a business selling trust, they are the ones securing the Internet, so they must all be trustworthy. So, why not buy the cheapest? And this is, essentially, a common decision that has shaped the nature of the public key infrastructure market. The market can’t decide that’s the actual value. There really can’t be any hard assignment of this much trust is worth this much dollar. So, naturally, market forces drive it to: the people who offer trust for the cheapest get the most customers.

So, who can become a certificate authority? Any ideas? You, me, anyone really. What’s the problem here? The problem is when you visit a website and they are their own certificate authority and they sign their own certificate, they’re self-signed, you get a warning. The warning says: “This is a self-signed certificate.” Probably 90% of users proceed anyways.

So, the problem with being a CA is that you have to get someone to trust you. Now you already got 90% of all people who trust you anyways. But where are we going to get the last 10%?

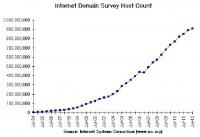

We need to take a step back and look at the big picture and look at the hurdle of securing the Internet. So, this is a recent graph (see right-hand image) taken from the Internet Systems Consortium, and we’re approaching 1 billion Internet domains. They’re definitely not all secured by SSL. If you took 1 billion and multiplied it by the price of the cheapest certificate authority that’s probably not free – say it costs 50 bucks. That’s a 50 billion dollar industry. It’s definitely not that big, and it’s a huge price to pay for securing the Internet. And then you get into the details that some people want to be more secure than others, they want more money, etc. So it’s a fuzzy matter.

Back in the day, when there was one certificate authority the task of securing the Internet was easier, definitely, than it is today. We can’t expect a handful of certificate authorities to secure 1 billion identities to make sure that each one of the one billion applicants is who they actually say they are, they don’t have a bad background, they actually exist, their company actually exists in a physical location, they have staff, and after they get a certificate, they don’t just dissolve everything because it was all fraud. So it requires constant monitoring. This is a hard problem.

When CA started to sign other CAs, we saw an explosion of trust. Essentially, what happened is a network that looks like this (see right-hand illustration). You have a root CA, it signs for intermediate CA, and in this case you can all be certificate authorities – join the party, it’s the cool thing to do. Meanwhile, that old root CA was like: “I remember being a CA before it was cool.”

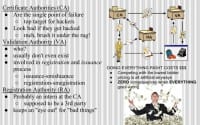

This is how it works in practice (see left-hand image). Certificate authorities, especially the root CAs, are the single point of failure in the system. They are a top target for hackers. And they look really bad if they get hacked, so they just brush it under the rug if that happens, sometimes. Validation authorities – who? In practice, given the number of certificate authorities out there, they probably don’t even exist most of the time, probably not even a task of an intern working at that CA. Now, the registration authority – probably sometimes that work is done by an intern working at CA. It’s supposed to be a 3rd party, the same as validation authority, and that intern probably just keeps an eye out for bad things when he’s not getting coffee.

The problem is, doing everything right costs money, and you’re always competing with the lowest bidder, because market forces are driving all the customers towards the lowest cost service. The price is all completely artificial anyways. What is trust? How do you put value in trust? How do you actually tie it to some monetary value? It’s like it’s printed on paper, exchangeable with the trust I would have in someone protecting my family, the trust I would have in police, the trust I would have in my bank to have proper physical security on guards and insurance to ensure that if they get robbed, all my money isn’t lost. How do you put value in that? It’s all artificial. And as we will see, there are absolutely zero consequences most of the time when everything goes wrong. So this is like one of the best businesses to get into, at least if you’re a CA. There are some instances we’ll talk about where everything went wrong and they went completely bankrupt.

Let’s talk about the blues (see right-hand image). In the 1980s the x.509 certificate standard was designed. It was designed to be flexible and general. That was great at the time. Now it’s horrible. It was also back then and still is now ugly as hell. And there is a long history of implementation vulnerabilities because it’s a messy standard. Messy standards are hard to implement because they’re hard to understand. All developers are not created equal, with a different skill set, a different way of looking at things logically, and they come out usually with a slightly different understanding of the concept problem or model. So this leads to implementation differences, which leads often to vulnerabilities.

Then in the 1990s SSL was conceived by Netscape, and I strongly urge you all to do the required reading, which is just watch a 30-minute video, the Defcon talk given by Moxie Marlinspike on how broken SSL is. He actually found the engineering team that actually put together the SSL, found one of the guys, the core team leader, basically, and the guy hadn’t been on there for the past 15 years. So he found him in the phone book and called him and he interviewed him.

I am paraphrasing at this point; he asked him about SSL and certificate authorities and how that all was thought up. And the guy said: “It was all actually a bit of a hand weight.” This is not the security vulnerabilities you’re looking for.

Fast forward to today: in 2009 there were three major vulnerabilities that affected the whole world just due to certificate authorities’ mistakes: “Whoops, I published my private key in my public_html directory…”

In 2010, due to efforts and research done by the Electronic Frontier Foundation, there is growing evidence of governments compelling certificate authorities to do their bidding. If a certificate authority exists in Russia, the Russian government can compel a certificate authority to do its bidding: “Hand over your private key.” In China – the same thing, the US – the same thing. Now it’s becoming actually common for governments to own and run their own certificate authorities. And these are making it into the trusted authorities run by your browser.

Let’s have a little intermission and peruse this nice diagram provided by the SSL observatory, this is run by the EFF – it’s a charitable organization all about Internet security, rights and defending vulnerability researchers and hackers. They are really interesting.

This PDF (www.eff.org/files/colour_map_of_CAs.pdf) is a map of… remember that explosion of CAs? This is the resulting explosion of CAs from just two of those forty root certificates. This is CAs, they’ve resulted from a chain of trust stemming from either Mozilla or Microsoft, and it is massive, and arrows are going everywhere. And there are some interesting players in this list. I’m surely never going to find them here in the demo. There’s Ford Motor Company, there’s something just called Government CA – I don’t have to scroll far to get some really weird ones. Then there is TxC3Cx9 – what? Do you trust that person? Would you trust that person securing your bank account? How about C=AT, ST=Austria – right? How about x code? I mean, that looks legit. And somehow VeriSign is signed by Mozilla and Microsoft. I don’t understand how it works. Self sign?

It’s right here on the EFF front page. Right here they say: “And you can also peruse our second map of 650-odd organizations that function as certificate authorities that are trusted directly or indirectly by Mozilla or Microsoft.” Let’s follow this source and see. Hexagon is a root CA trusted by Microsoft only and Microsoft and Mozilla products; this is correct. So this is 650 people or entities that are out of the box with Mozilla and Microsoft products you are set up to trust.

Let’s see if we can find some more interesting ones. A lot of European ones; Buypass? As you can see, it’s kind of a mess. And to say to that average user that you are expected out of the box to trust these people, it can be seen as kind of preposterous. I certainly don’t even trust 40 people to act as root CAs.

However, the problem is with narrowing it down. The Internet has grown to a totally international thing. Back in the day VeriSign signed the certificates for the majority, all in the US or all in North America. So there’s very little international political problems. And so we see in this list that there are obviously foreign certificates that your browser is set up to trust. The customers of Microsoft and Mozilla are all over the world. Websites run in, say, Russia, probably wouldn’t trust a certificate authority that’s stationed in the US and could potentially be subject to US law and cohesion. And very similarly, customers in Russia, some of them, would not trust certificate authorities established even in Russia. The same for the US – many Americans wouldn’t trust anything signed by anything involving the US government or that could be involved with the US government. So, about that trust: it’s a bit of a mess.

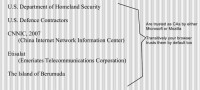

So, here (see right-hand image) are some interesting certificate authorities on this list: U.S. Department of Homeland Security, some US defense contractors, CNNIC, which stands for China Internet Network Information Center, Etisalat, which is a major telecommunications corporation run in the UAE – I think they are the fifth largest telecommunications corporation in the world; and also the Island of Bermuda. The Island of Bermuda can secure the Internet. That’s interesting.

That brings me to scoping issues (see right-hand image). Maybe DHS should just sign for the sites in the US, and likewise, state run certificate authorities in China should just sign for sites in China. However, like I mentioned, naturally there’s going to be citizens that don’t trust certificate authorities right in their own country. I mean, and you can’t fault them; everyone’s entitled to their own opinions, and things are different around the world.

So, about securing the Internet: it’s kind of messy. What are we to do, really? I mean, as a browser vendor, I would have to have it set up so customers around the world would be able to use the Internet in the same way as everyone else. So I’d have to have a diverse set of certificate authorities that the browser will trust, so things don’t get broken in Germany, so things don’t get broken in South America, and so on.

And on top of that there could be policies and laws in nations that require certificate authorities to be used in certain ways. Like, as a citizen of Germany you can’t use certificate authorities existing in these countries. Or you must use certificate authorities in this list. So you have to be mindful of those potential policies.

Once you start adding international laws in the mix, it becomes a mess. To give a perspective on how absolutely insane international cyber law is – this is off top of my head because I don’t know any specifics – but many North African countries, as well as Middle Eastern countries, came together and signed a treaty, rather a pact, stating that if one of the citizens in their own country breaks the cyber law in one of the treaty members countries, they can be extradited for prosecution there. What if you have a religious law based on profanity and decency, and it extends to cyber space. Your daughter’s spring break pictures might end her up in a Turkish prison, or some other prison. That’s off-topic, but that’s just to give you a perspective of how crazy this can get.

So, about securing the Internet. Let’s go over some important certificate authority attacks (see right-hand image). Now in this first slide I used attacks in finger quotes, because the following people that we know about so far were just able to attain major certificates without any hacking.

Mike Zussman obtained login.live.com, which is run by Microsoft, because he just asked for it. He didn’t provide any fraudulent information; they were just like: “Ok, here”. There’s no VA or RA in the mix, it was a certificate provided to existing user, and they gave it to that Mike Zussman guy. And all he was doing was security research. Apparently he didn’t have to do much research, really, at all to prove that: “Hey, this system doesn’t work”.

Eddy Nigg was able to do the same with Mozilla.com. No validation authority stopped him. He was simply investigating unethical CA practices and basically hit the jackpot on his first try. You can read about it there.

Then, at some other point, VeriSign issued a code signing certificate for the entity in Microsoft Corporation unknowingly to unknown hackers that still have not been found out today. This allowed them to sign kernel mode drivers, Windows updates, applications, etc.

Now let’s talk about actual attacks (see right-hand image). A really noteworthy one was in 2010; RSA got hacked. It’s not really SSL, but the RSA sold this service called SecureID. In essence, it’s providing the same thing SSL does; a secure communications protocol that provides secrecy, integrity and authentication. And in this model there’s one root certificate authority, RSA.

Now, in 2010 this totally makes sense. For a Fortune 500 company I don’t want to use the SSL system and can probably afford the best price for my corporation. Everyone in this room realizes that yes, this system is broken, when you sign it’s easier to lockdown and easier to trust. So, this became a popular program. However, when RSA was hacked, SecureID was compromised, and, essentially, a massive hack hit 760 companies. It hit 20% of the Fortune 100 list, so 20 companies in the Fortune 100, because 20% of the 760 is… well, you can do the math. So, it also hit many more of the Fortune 500. It hit Google, Facebook, Microsoft.

… Which brings me to Comodo (see right-hand image). The reason I’m going to talk about Comodo so much is solely because of the drama. When Comodo got hacked in March 2011, it was a big deal. They came out and news reports stated that Comodo suspects that it was hacked by Iran. It’s a pretty bold claim; attribution when getting hacked is hard. People can be behind proxies, IPs can be spoofed, people can move around, people can hack others and frame them. So they outright claimed that they believed that Iran hacked them. And they published the IP address for the hacker and the longitude and latitude, and this is in Iran. The attacker made off with some important certs that allowed them to sign things: mail.google.com, www.google.com, login.yahoo.com, login.skype.com, etc.

Immediately after the attack was discovered, the CEO issued the following statement: “This attack was extremely sophisticated and critically executed… it was a very well orchestrated, very clinical attack, and the attacker knew exactly what they needed to do and how fast they had to operate”. Again, I urge you to watch the video for today, because it covers this in depth. He also claimed that all the IP addresses involved in the attack were in Iran, and this sparked a debate on cyber war (see right-hand image above).

So, Comodo secures about 25% of the Internet. If one state was to compromise 25% of the Internet, imagine what they could do. They could do a lot. They can attack a lot of people. They can have a lot of power. And he went on to say in a later statement, after many statements, the following: “All of the above leads us to one conclusion only: that this was likely to be a state-driven attack”. So… drama, because you’re now talking about, essentially, cyber war.

After that first CEO statement the attacker posted something on Pastebin. It was basically a response to the CEO, and he posted many following responses to the CEO and rambled a lot. I believe the majority of his responses are impacted by knowing buzzwords and having bad English, because it’s my opinion that it’s a script kiddie who just can talk a really big game. He strung together technical concepts and attack concepts that don’t make sense. I urge you to check out the ramblings, because it’s actually pretty hilarious.

Surely there must be consequences to something like this. An amateur breaking 25% of the Internet, and Comodo got attacked 3 times later that year. Surely something happened to Comodo, right? I mean, we’re placing a lot of trust in them. They’re securing your bank account. If you had money in a bank downtown and 25% of your money was just stolen, and they were caught and they were investigated – wouldn’t you do business with someone else? Turned out nothing happened, no one cared, and CEO of Comodo was named Entrepreneur of the Year at RSA 2011. No consequences; it’s like you’re too big to fail.

… Which brings us to DigiNotar (see right-hand image). DigiNotar ran into an issue when they noticed that it signed a rouge *.google.com certificate. And this was presented to a number of users in Iran. When this came to the attention of DigiNotar, because it didn’t intentionally issue this certificate, they quickly revoked it and they claimed: “Hackers!”

If an attacker did this, which, it turns out, an attacker did, they were able to compromise the private key for DigiNotar and they were able to sign any certificate. And DigiNotar was a root CA that, at the time, your browser trusted; it was one of those 40.

This particular case is important, because the entire Dutch government runs off of DigiNotar certificates. So, the attackers were able to compromise the entire Dutch government! Problem? Even if they didn’t attack the great people of the Netherlands, it still poses a serious problem and is a big wake up call. What if this happened to the entire American government or the entire government of China and so on?

This time the attacker posted more stuff on Pastebin and he named himself as Comodo hacker, saying it’s the same guy: “I can 0day your mobo, fear me, and I have tons of SSL certs, I can sign for anything”.

What happened is the Dutch government seized the company and took over it. That same month the company was declared bankrupt. The lesson learned here is: if you’re a CA, you can often be too big to fail. However, when you fail, if you cause someone bigger than you to fail, then you’re screwed. It’s game over. Essentially, this was highlighted as a complete compromise of the CA system.

2011 was a bad year. In Israel StartCom was rumored to be breached – I don’t think it actually happened. GlobalSign was also rumored to be breached by the same hacker that got DigiNotar, but their reports concluded there was no evidence of any breach.

I’m bringing these rumors up because it’s important to know that in a network of trust rumors can diminish the actual trust. And think about how that should affect your decisions if you were to make these decisions on your own. If there is some rumor that, say, you’re working with your teammate and your systems just got completely hacked and you’re working on the stuff that’s for your Master’s thesis or dissertation, and it all gets stolen. You probably wouldn’t share any more information with them. However… we’ll talk about that later.

Another important thing that I want to talk about now, not because it happened, but because of how it was handled, was VeriSign was repeatedly hacked in 2010 (see right-hand image). When they were discovered by the security and incident response team, the CEO and the management was not notified. So there was no public statement. In fact, there never was any public statement made by VeriSign saying: “Sorry customers, we were hacked”.

Now, it’s important to know that at the time, in the summer of 2010, VeriSign sold its SSL business to Symantec. But no one really knows when the attacks began again in 2010. VeriSign at the time, when it had its SSL business, secured over 50% of the Internet, which means .com, .net, .gov. Essentially, an attacker with a signed certificate for those top level domains could impersonate almost any company on the Internet.

These reports came out only because new SEC guidelines required reporting security breaches to investors. These new guidelines have subsequently resulted in an explosion of reports and filings about security breaches and breach risks. And you can read about that on that Reuters post.

VeriSign also runs other important services like DNS. And their DNS system processes 50 billion queries a day. So, instead of going to google.com, if I compromise that as a hacker, I can make you go to a website that I have malware on to own your box just by visiting it, perhaps.

At that scale, being able to own the Internet, people start throwing around the word “cyber”. And “cyber” to politicians means: “Oh my god, cyber war, I don’t understand anything”. Attacks of this scale would be extremely valuable to advanced persistent threats. Advanced persistent threats need not be state organized or state run. It can be a well-resourced and well-established underground group that really knows what it’s doing. It’s perhaps made of ex-government Special Forces and really ‘good’ people that just want to make a ton of money by robbing everyone blind.

Let’s get back to the problem of secure protocol. (Slide 38) The problem with SSL and the secrecy is that everyone is a CA nowadays. There’s really no accountability for CAs, unless they cause an entire government to fail. And authenticity – you could probably just ask for someone else’s certificate and you might get it. There’s no harm in even asking – they’re going to tell you yes or no.

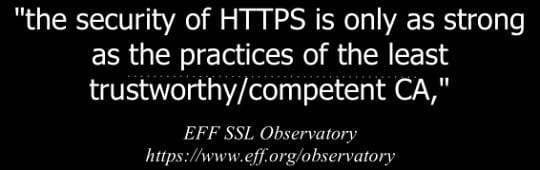

This is a good quote that I want to end this section of the lecture on is that “the security of HTTPS is only as strong as the practices of the least trustworthy and competent CA”.

The EFF’s SSL Observatory posted this map (see right-hand image) claiming that these are the countries that can intercept secure communications. Basically, these countries can strip SSL and decrypt SSL on any communications they want.

The flaw with this is that – take back to those rumors of these CAs getting hacked. We can’t actually adapt to those problems. We can’t change anything. We’re locked into these trust relationships. When Comodo got hacked, the browsers could have dropped Comodo. However, they probably would have looked worse than Comodo, because they just broke 25% of the Internet.

Also, the problem with this is that market forces reward the cheapest trust vendors. And it’s only natural that we’re seeing an increase in CAs getting hacked. Now, just imagine the ones that don’t get detected and don’t get reported. The problem with the current model is also there’s no agility. We cannot adapt to disturbances in the force, and the trust becomes forever, basically.

There are some advisories on how to defend against the broken CA system, but in summary it’s really complicated. It means: “Just be prepared to use another CA”.

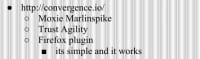

I have to take a quick mention of convergence.io (see right-hand image). It’s a Firefox plug-in that uses trust agility, which, basically, allows you to be free from the CA system.

So I will quickly end the lecture with covering attacks on SSL without having to pick on the easy targets of the CA system.

To recap: basically, you have this first handshake; then you establish the symmetric key using asymmetric key encryption, the public key crypto, and then you begin asymmetric-key encrypted communication.

There are tools for breaking this (see right-hand image). sslstrip is what I had a demo for today, but the Wi-Fi here broke it, so I’ll release the demo on my own. It basically uses ARP poisoning to perform man-in-the-middle attacks on ignorant users. It reduces HTTPS to HTTP, so you no longer get that lock and green icon on the top of your URL bar saying: “This is trusted”. It just looks like normal HTTP, so there’s no warning.

sslsniff is you get a CA cert; say, you’ve hacked Comodo on the other cert; you plug it in to sslsniff and you can decrypt all SSL and TLS traffic as long as you see that established mode of the symmetric key.

BEAST and CRIME are worth mentioning, because BEAST was proposed by Juliano Rizzo and Thai Duong. It attacks TLS 1.0 and 1.1. CRIME came out in late 2012 and attacks all versions of TLS and SSL, and the details are unknown; they’re working with the browsers to fix them.

sslstrip is a simple tool. To attack a target you need 5 commands. You need to be on the same network as your victim. So, usually an attacker would be in a coffee shop, somewhere with open Wi-Fi. They need to be able to ARP spoof a victim, and what they’re going to do is they’re going to impersonate the gateway. Now, this can be a problem in Wi-Fi if the signal is stronger close to the victim, and it’s naturally messier in Wi-Fi. However, I’ll show you a demo without Wi-Fi.

Basically, what you do is, once you ARP spoof and you impersonate the gateway, the victim starts sending all this traffic to you, thinking you’re the gateway. What you do is you basically forward it to the legitimate gateway and you have proxy control over the traffic. So, once you’re able to intercept all the traffic, what sslstrip does is it looks for outgoing GET requests to a web server that has HTTPS in the packet and simply replaces every match of HTTPS with HTTP.

Basically, the first thing you need to do is you need to establish your IP forwarding. Essentially, what happens when you’re not attacking the user is you go to a website that doesn’t have strict transport security enabled and the login page will say HTTPS, as normal. You have username and password to log in, right? So, what happens when you ARP spoof them, run sslstrip and degrade the communication from HTTPS to HTTP is when that user sends out the username and password, if they don’t notice that: “Hey, this isn’t HTTPS in the URL” in plain text, you will get the username and password either of the GET or POST data sent from the client to the server.

So, what’s going on in this window (see right-hand image) is that he’s performing ARP spoof – basically sending ARP packets saying: “Who is the gateway? I am the gateway” constantly, directly to the target. This is a targeted ARP spoof, and what it’s doing is it’s overwriting the ARP cache for the victim, so the victim has cache running of ARP responses, so it can keep an efficient glimpse and snapshot of the network. So he floods it with bogus ARP packets, it’s going to replace the value in the cache with whatever is most recent. You send in enough packets – this is guaranteed to happen.

Now you see the victim is trying to log in, and he’s sending all this traffic to the attacker. When the user types in google.com/accounts, instead of returning HTTPS it returns HTTP. The user enters in his username and password if he falls for it, and this gets logged. The attacker is here, printing out the log, and you can see email and password being sent in, essentially, the GET requests. So, essentially, he can log in as the user.

The way to defend against this is to be a smart user. But for a website the defense is to have your website run strict transport security. This means SSL on all pages. Many websites do not do this, and there is no excuse for them not doing this. The only reason they don’t do this is because they’re completely incompetent. There’s been a lot of debate of “I can’t; I have a business, if I have SSL on all my pages it’s going to slow down all my traffic and everyone is going to leave me for my competitor, and they are not running SSL, and everyone knows that lag kills business”.

So, about a year or two ago Google considered this argument and implemented SSL on all of its services. It’s a less than 0.02 impact on all of its performance. If your engineer is telling you that you can’t do the SSL, it’s because everything’s been engineered, basically, wrong. You need to rethink things, and it shouldn’t impact your performance in any appreciable way.

With strict transport security, even if I’m running sslstrip and I’m intercepting your traffic and I’m modifying it, say: “Hey server, get me HTTPS and replace it with GET HTTP”. The server will respond with HTTPS requests anyways, because it doesn’t serve up HTTP plaintext.

Another thing is sslsniff – like I said, if bad guys hack a certificate authority and get a certificate, basically, a private key, they can plug it into sslsniff and just decrypt SSL and TLS traffic on the fly and get the symmetric keys. It cannot be defended against. Basically, the defense is to not have a broken certificate authority system. We can all hope for a miracle.

BEAST was a crypto attack on SSL, or rather TLS versions 1.0, 1.1 back in 2011. It can strip HTTPS cookies from a session and it takes less than 10 minutes on paypal.com. The defense is to use the latest versions.

CRIME – I don’t know anything about. It came out just last year. It’s the same team and it works against all versions. So, that is the end for today.