Jim Aldridge of cyber security firm Mandiant helps organizations investigate and respond to security incidents. His areas of expertise include security incident response, penetration testing, security strategy, as well as secure systems and network design. Jim has significant experience working with the defense industrial base, technology, and industrial products sectors. In the Summer 2012 Jim spoke at Black Hat USA. Privacy PC asked Jim several questions regarding his presentation: “Targeted Intrusion Remediation: Lessons from the Front Lines”.

– Jim, in Mandiant experience, what proportions of security budgets are allocated to preventing attacks, detecting and remediating them?

– There are several factors that can determine how much an organization allocates to security – from the size of the company, to the perceived value of their assets. What’s true for all is allocating resources toward security to detect and stop an attack is significantly less expensive before an intrusion then after, when valuable IP has already been stolen.

– Are most organizations able to plan the remediation correctly and perform it quickly? Overall, how are organizations successful in remediation nowadays?

– Organizations are successful in remediation when they use an appropriate approach for their particular situation. Success doesn’t necessarily mean never having another intrusion but rather removing today’s attacker and implementing measures to respond more quickly and effectively the next time it happens.

– Seems like failing with a single step in your plan may kill all other efforts. What happens if an organization is not able to perform one step like disconnecting systems from the Internet?

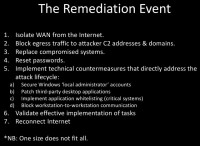

– Remediation approaches are not “one size fits all”. I have worked with many organizations that successfully remediated without disconnecting from the Internet. The key is tailoring the approach to the situation. Good visibility into the attacker’s activities and understanding the scope of compromise provide crucial inputs to effective decision making. For example, if the attacker is very interested in the environment, and is currently active, disconnecting from the Internet will likely be more important. If an organization cannot fully disconnect, it may be possible to isolate certain networks where compromised systems reside.

I also advise building some redundancy into the plan, such that it doesn’t fail catastrophically if one step is not executed correctly.

– To quickly detect indicators of compromise, organizations should know what is normal in their environment and flows and what is abnormal. How often do they know it?

– The size and complexity of modern IT operations makes this area challenging. Some organizations have an understanding of “abnormal” for specific parts of their operations – e.g. abnormal traffic originating from specific servers that are highly monitored.

Is a user visiting a webpage that belongs to a local newspaper an indication of compromise? I’ve seen backdoors that obtain their instructions from the attacker by doing exactly that. Without the right intelligence, i.e. that a particular website is part of an attacker’s command and control infrastructure, how would you identify that?

Experience is crucial, as it enables one to spend time looking into truly suspicious conditions, ignoring red herrings.

– What are the best ways to quickly detect intrusions?

– The most effective incident response teams have knowledgeable, motivated people that are passionate about computer security hunting for intruders in the network. These people should be equipped with tools that provide them the ability to quickly ask questions about the environment. What systems out there have artifacts A, B and C? What systems resolved the domain name abc.123.com on January 8, 2013 between 05:00 and 08:00 UTC? The more quickly they get answers, and the more of the environment the queries cover, the better. Our Mandiant Intelligent Response (MIR) tool is something that we have developed over the years to help such teams solve exactly this problem.

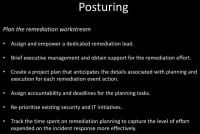

– Your method of remediation suggests typically 4 to 8 weeks of planning and 1-2 days of executing. But what do you observe in reality with organizations which do not use your method? How do they organize their time and how long does it take them to remediate?

– It depends on several factors. One is the size of the organization. A company with 135,000 systems on six continents will require more time to prepare than a small company with one office. Another is an organization’s willingness to support solving the problem.

An organization will ideally be ready to contain and eradicate the threat at the same time they understand the scope of the compromise. Organizations must determine what systems are infected with malware and how the attacker is accessing the network to develop an effective containment strategy. Proceeding with an incomplete understanding of the intrusion often means attackers retain access to an environment.

One to two days is typically the maximum amount of time organizations can feasibly be essentially disconnected from the Internet. With appropriate preparation, that is usually enough time to perform key tasks like replacing the infected systems and changing passwords, even in large, global companies.

– A lot of successful targeted intrusions have involved various red herring techniques. For example: some systems are DDoSed and while all the security guys work on it, a spear phishing is used to get in from another end. Do you see this as a growing issue?

– In my experience, attackers have no need to create a diversion. Most organizations fail to detect targeted intrusions.

– How difficult is it to find all of the attackers’ entry points?

– Academically speaking, we could build a list of all the potential ways you could get code to execute on a particular computer system and that would equal all the entry points. On a typical Windows system, there are dozens of executable files that may load hundreds of libraries. All of these are potential entry points. A global corporation with thousands of servers, dozens of business partners and hundreds of suppliers – each with entry points of their own – could potentially have hundreds of thousands of entry points.

All indications of attacker activity must be investigated thoroughly to find the actual entry points.

For example, if you’re an IT administrator, and have just noticed an antivirus alert for “pwdump” on your domain controller, you could hit the “clean” button and move on with your day. I’ve seen organizations take that approach and it doesn’t work.

Or, you could ask yourself how “pwdump” came to execute on that system. Perhaps two seconds prior to that file executing, a particular user account logged into the system. Then you would find out from which system that connection originated then take a look at that system. Perhaps there you would find a backdoor. As you investigate, you identify indicators of compromise, for example attributes related to the “pwdump” file, the name of the account used to log on to the domain controller and the registry key that the backdoor creates to maintain persistence.

If you want to understand all the entry points, you would continue that process iteratively until you had a complete story that explained how the attack happened. In the process, you would also have determined the information you need to successfully remediate.

– You mentioned attackers follow specific sequence of events. What types of dedicated attackers usually follow steps described in your “attack lifecycle”, and can you describe an attacker who doesn’t?

– In my experience, most attacks follow at least some subset of this lifecycle. Targeted attacks will typically include multiple phases. More simplistic attacks will follow less phases, for example just an initial intrusion and the theft of data.

– What to do if an organization does not recognize a targeted intrusion and uses standard whack-a-mole method? How difficult is it for them to quickly embrace the need to shift to your method?

– In my experience, it depends on management’s willingness to try a different approach. Often, an organization needs to experience the frustration of an ineffective approach first. A knock on the door from law enforcement, a frequent method by which organizations find out that they have been compromised by a targeted attacker, can also be a big motivator.

– Is poor visibility across systems a huge problem? To what extent do most organizations in your experience have visibility?

– More organizations have poor visibility than have good visibility. By poor visibility, I mean that they do not have the necessary processes and technology to know what traffic is exiting their network, or what indicators of compromise may be present on their systems. From a systems perspective, their primary means of visibility consists of antivirus tools, which provide limited capabilities.

– Why do organizations lack visibility? Is it expensive or does it require specific skills and experience?

– Most organizations “don’t know what they don’t know”. Implementing the right tools, and more importantly, having the right people to make use of the information, requires investment. It can be difficult to convince leadership of the need for such investments. After a targeted intrusion, it is typically easier to make the case for those investments.

– What is the weakest part in the attack lifecycle? Where do you usually start running countermeasures and are always successful?

– I don’t think that it’s possible to always be successful with a countermeasure. Take application whitelisting, which is one of my favorite countermeasures. Consider the example of an application whitelisting tool that has properly been implemented on a domain controller. Even if an attacker gains domain administrator privileges, he should not be able to run a password hash dumper on the domain controller. This is typically effective.

However, the Achilles Heel in that equation is the trust placed in the certificates, which is one of the ways the tool determines whether a program is allowed to run. When you configure the whitelisting tool, most users decide that rather than trusting thousands of Microsoft executables and libraries, they will just trust all files signed with a valid Microsoft digital certificate instead. This provides advantages from an operational perspective since it makes patches and updates easier; if you trusted the files instead of trusting Microsoft, you would have to trust all new files at every update.

A determined attacker that is able to compromise a certificate and sign malware, making it look legitimate, can defeat that countermeasure (I still strongly recommend application whitelisting: no countermeasure is perfect).

I don’t see any parts of the lifecycle that are relatively more challenging than others from the attacker’s perspective. Escalating privileges may take some time and effort, particularly if the initially compromised system doesn’t immediately provide the attacker with privileged credentials. The key from a defensive perspective is to make as many of the pieces as difficult as possible, which provides more opportunities and time to detect and contain the intrusion.

– One of the most critical and difficult parts in remediation is universal password change. Do you have short advice to help organizations with that?

– In short, it is important to understand the purpose of each account. With this understanding, it is typically straightforward to plan for how you would change the password for that account. This doesn’t make it easy necessarily, it merely provides you the information that you need to plan the change.

This presents significant difficulties in large organizations, with dozens to hundreds of service and application accounts, that have never considered the prospect of a massive password change. I advise my clients to plan for how they would execute an enterprise-wide password change as a routine incident response preparation activity.

While gathering the information necessary to do so, it is also beneficial to consider deploying a password vault tool. In addition to making it easier to quickly change passwords, such tools can make it more difficult for an attacker to obtain credentials.

– What are the next most difficult steps, maybe patching / updating software?

– Patching, particularly patching third-party desktop applications, is difficult. In my experience, the main reason for this is that some software requires legacy versions of these desktop applications to function. For example, if the company’s time and expense system requires an old version of Java, that makes it difficult to upgrade users’ systems without impacting important functionality.

Another difficult step, that is typically a longer-term activity, is removing privileges from end users. What I mean by this is that users will not be “local administrators” on their workstations. This has tremendous benefits across multiple phases of the attack lifecycle. The exploit that causes the initial compromise may work, but the attacker may be unable to establish a foothold because the backdoor won’t install. Not all backdoors require privileges, though, so perhaps the backdoor installs. But without local administrator privileges, the attacker can’t easily run the password hash dumping tool. So then the attacker would need to find a local privilege escalation vulnerability to exploit, or look around the network for vulnerabilities while operating in the context of an unprivileged user account.

– After a targeted intrusion, do most organizations start rethinking security and implementing strategic changes? Are there some organizations that stop just after eradicating the threat?

– I would say that most organizations do start rethinking their security posture, to various degrees. Some stop after eradication.

– How often do the same attackers come back? Do attackers follow the pattern after they have been eradicated and use the same tools, techniques, procedures; or do they start from scratch and try to act differently?

– That depends on the attackers’ motivations. Attackers that are motivated by espionage tend to return, using some combination of old and new tools. It’s not always possible for them to completely change things up; tools and command-and-control infrastructures take effort to maintain. The more sophisticated actors change more about their TTPs, the less sophisticated ones try what they know worked last time.

– How to define new indicators of intrusions if the attacker acts differently?

– By looking for the activities that are common to the various phases of the lifecycle, plus applying threat-specific intelligence, then following a solid investigative process to develop an understanding of the new indicators.

– What do you think of honeypots as a protection mechanism which gives good return on investment? Do you use them?

– Honeypots can be interesting in some research scenarios, for example tracking certain automated threats. If you’re worried about targeted threats, the time that you spend building a honeypot infrastructure is time that you could have spent on something more fundamental. Unless you have already implemented extensive countermeasures, I view most honeypot deployment scenarios as diversions.

Diversions that detract organizations from addressing the fundamentals of detecting, inhibiting, and responding to the attack lifecycle are one reason why organizations are generally unprepared for this type of threat.

– Could you please give an example and describe a specific success story of quick remediation after a deep intrusion?

– Here’s a typical example: an organization contacted us because they had identified signs of targeted threat activity. Our investigation revealed that the attacker had initially gained access to the environment around two years prior to the organization’s discovering the attack. Over the course of around 6 weeks, we investigated and worked with them to develop a remediation plan. During this posturing phase, they implemented enhancements like better security log settings, some log centralization and searching capabilities, and determined how they would change passwords.

The attacker had already stolen sensitive information, and was operating in a maintenance mode – he would connect to the environment around every month. He would then dump password hashes from a domain controller (the administrators reset their passwords monthly, so the attacker came back to get the new ones every month). Next he would double check that his backdoors were all installed and functioning. Finally he would check to make sure that he still had valid passwords for a couple of service accounts, which did not get new passwords monthly.

The organization initiated their “remediation event” plan on a Friday evening, disconnected certain networks from the Internet, and pulled the infected systems. Then they began to reset passwords. This went more quickly than they had hoped, due to their preparation, and they had completed the critical containment and eradication activities by around noon on Saturday morning.