In a Defcon presentation, professional web bot developer Michael Schrenk tells an absorbing story of creating a specific botnet to gain competitive advantage.

In a Defcon presentation, professional web bot developer Michael Schrenk tells an absorbing story of creating a specific botnet to gain competitive advantage.

I’ve had the opportunity to do a lot of really cool things in my career with bots, but the one thing that gave me more satisfaction than anything else I’ve ever done is the time I wrote a botnet that purchased millions of dollars with the cars and defeated the Russian hackers.

So, let’s have some fun with this, alright? I’m going to tell you a story that involves hacking; that involves cars – I like cars; that involves Russian hackers, which is just pretty cool; and more than anything else it involves screwing with the system, or, as I like to tell my mother, creating “competitive advantages” for clients – that’s important.

I’ve been writing bots since about 1995. I started out doing remote medicine bots, if you can believe that. I’ve been involved with privacy, fraud detection, private investigations. I’ve done work for foreign governments. And I’ve got a fair amount of my business that is with automotive clients. What makes me a little bit different than a lot of other people that write bots is that I actually talk about it. Unfortunately, the only projects I get to talk about are things that are in-house projects that I’ve been doing. It’s really rare that I get the chance to talk about a specific project that I’ve done for a client.

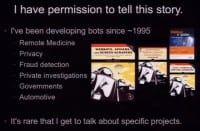

But I got permission to talk about this one, and it came about largely because when my last book was done, they approached no starch – Linux Magazine, and they said: “Can Mike write an article for us?” And I really didn’t have anything ready to write for them, so I approached this old client and I said: “Enough time has passed; it’s been, like, six years. Let me write about this for a change.” And they agreed to let me do this. But that’s really key, because when you’ve got a piece of technology that provides a “competitive advantage”, that allows you to screw with the system strategically, you don’t want to tell people about it, right? That’s your trade secret, really. So, if you want to get a little bit different view of this project, if you can pick up one of the old copies of Linux Magazine (see upper right-hand image), I write about it in a little bit different way than the way I’m presenting it here.

Okay, what are you going to learn? You’re going to learn what makes a good bot project. I’m going to have to give you a little bit of insight in how retail automotive works in order for this whole thing to make sense. You’re going to get an awareness of commercial bots and botnets, and they actually do exist. And I’m also going to talk a little bit about, if I were to do this again today, how I would do this differently, because keep in mind this happened six-seven years ago.

So, what makes a good bot project? The very first thing you need to know is that you cannot be afraid to do something different. If your company has an Internet strategy, assuming it has an Internet strategy, that just involves browsers and things you can do with a browser – you’re really missing out, because you got the whole big wide Internet available to you, and everybody uses the same tool, browser, to access it. And if you expand your scope a little bit and do things outside of the way browsers work, or do things outside of the way websites are presented to you, you can create a lot of really cool things.

How many people here have written a screen scraper? How many people have written a spider? Well, if you’ve got a client, make sure they realize that just because you know how to scrape screens and you can write a spider, it doesn’t mean you can make a copy of the Internet. I get people approaching me all the time with ideas for projects; a lot of them basically want to create a copy of the Internet.

So, if your project requires both batch processing and real-time results, you’ve got a problem; or if you’ve got a project that requires just ridiculous scaling, you’ve got a problem – because unless you’ve got one of these (see right-hand image) your project is going to fail. You’re not going to replicate Google unless you’ve got one of these. And after I say to clients: “You really can’t do this,” they go: “Why not?” And I say: “Well, because Google spends about a million dollars a day on electricity – that’s why.” That’s why your project is going to fail.

I refer to ‘targets’ as the subject server – don’t assume you own that server. For example, I had a potential client approach me a few years ago, and he wanted to monitor prices on Amazon for about 100,000 items. I thought that sounded like a really useful thing to do (this guy is a big time Amazon seller) until I found out that he wanted to do this every five seconds (see right-hand image). That’s not going to work for lots of reasons. If you did something like this, Amazon would actually have to build additional infrastructure to support your project, and you’d end up in court with what they call a “trespass to chattels suit”. You want to avoid that, it’s very illegal.

Okay, number four, and this is maybe the most important thing: you have to have a realistic profit model. You notice I’m saying “profit model”, not “business model”. Why do I say that? This is why (see left-hand image).

And if I’m showing my age here a little bit, you can look at these (see right-hand image). Myspace actually made the list twice; I think that’s pretty impressive. So, why is it important that you have a realistic profit model? Why is it that when people approach me and they want to do something, that could just as easily be done on eBay for example? This is important because the developer has to get paid. That’s very important.

About automotive retailing: just a little bit here; without this the project doesn’t make sense. New car sales are not as profitable as people think they are, even if you combine service with that, because it’s incredibly capital intensive and it’s super-super competitive. But you need to have new car sales so that you’ve got credibility if you want to sell used cars. This is particularly true if you want to sell high-end used cars; nobody wants to go to the corner of your lot for that kind of stuff.

The thing that I learned – I didn’t realize it, I just assumed that the used cars on a car lot were all trade-ins – well, that’s not the case. It can’t be the case because you can’t grow a business if you’re going to do that, and it’s really limiting. Car dealerships spend tons of money acquiring good used cars to put on the car lot.

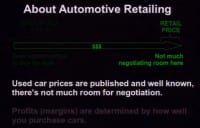

And it’s kind of bizarre, the way it works, because you walk into a car lot and you know what the price should be for a particular car because it’s very well documented – you can go to Kelley Blue Book or any place. So dealers don’t have a lot of space to work on the final retail price, but down on the wholesale side is where the margins are made. If you’re good at buying things for a great price, that’s how you make money with used cars, and that’s what this project is about.

So, a car dealer came to me; he had a great opportunity, found a wonderful website that was part of the national franchise. They were getting in used rental cars, two years old, 12-16 thousand miles, well maintained – perfect cars that you’d want to have on your lot. Unfortunately, there’s a lot of competition for these cars because all the people in that dealership chain wanted the same cars, and the website was horrible and made it almost impossible to buy the cars. So there was a lot of frustration.

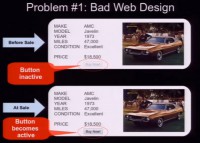

This is kind of the way it worked. There would be maybe 200-300 cars presented every day, and the cars would have little display ads like this (see left-hand image) that gave a little bit of a description. And there was an inactive “Buy Now!” button. At exactly sale time, the button would appear. But the problem with this was it wasn’t using AJAX or anything, so you had to physically sit and refresh the browser constantly to get that button to appear.

Well, this led to another problem: there was an incredible server lag (see right-hand image). My client – and I think he was probably pretty typical of all of them in this chain – he would grab every person he could find, people out of parts, out of the sales floor, administrator assistants, and sat them all in front of computers. Each one of them was assigned maybe about six cars, so they’d have six browser windows open, and they’re all sitting there frantically hitting Refresh button constantly.

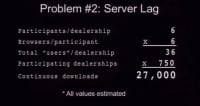

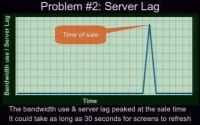

So, if you think about this, this would be roughly the equivalent of 36 users for this one dealership. I don’t know, maybe there were 750 dealers that were doing this. So, that was almost 30 thousand simultaneous downloads that were happening at sale time (see left-hand image). Servers should be able to handle it, right?

But I think there was some inefficiency with the database possibly, some bad queries were being made, and this caused a ridiculous peak in server lag time right at the point where you don’t want to have it (see right-hand image). It wouldn’t be unusual for it to take 15 or 30 seconds for the screen to refresh at sale time. Sometimes it would just time out. So, this was a real problem.

The other problem is that out of these, say, 200 cars that were up for sale every day, there were maybe five that every single dealership in the country wanted, either because they were the right color, probably because they were a really great price, or for whatever reason, I don’t know. But every dealership would want these five cars, so you’d have a lot of competition for the same cars. Plus server lag, bad web design, you had to involve a lot of people to do this.

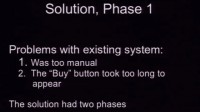

So, this particular client – I had written a number of bots for him in the past – gave me a call and said: “Can you help me out Mike?” I said: “Let’s take a look.” The system was way too manual, to begin with. So, the way this would work was he would have to manually go and select the cars that he wanted to buy; he’d have to distribute the VIN numbers to the various people; he’d have to call people in off of their normal duties that they would be doing; they’d be dedicating probably a good 15-20 minutes hitting the Refresh button every day. So, that wasn’t good, plus the Buy button took way too long to appear because of the server lag. We ended up with two solutions: one of them because it worked; the second one because we had competition.

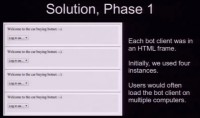

So, let’s look at phase one first here (see left-hand image). And, again, this is not classic bot design. And keep in mind this was done some six years ago, I don’t develop like this anymore. Okay, so here’s what I did. I came up with a web interface for my client, and if you look here this is basically just four HTML frames that were independent from each other. By the way, I say “botnet” but this was all done on computers that we owned, so there’s a difference. In fact, all of the bots that I write are all commercial bots, we own all the hardware; I just wanted to let you guys know that. So, instead of hauling in all these people that hit the Refresh button constantly while they should be doing something else, my client was able to pull up something like this. And quite frequently he would have two or three computers set up with this in the browser, and he would select what cars he wanted.

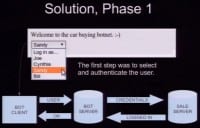

The first step was to log on (see right-hand image). It was a closed sale basically, and they had several accounts they could use, so the first thing they would do is they would pick which account they wanted to use for this particular bot.

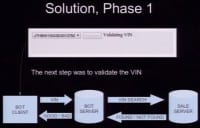

And the next step was you would pick the VIN number of the car you wanted (see left-hand image), and it would go ahead and validate that that was an actual car for sale. That’s important because anytime you’re writing a bot you don’t want to do something that could not possibly be done by a human. And if there’s a car that is not available for sale you don’t want to try to buy that, because some system admin somewhere is going to say: “How do they do that?! What is that IP address? They’re generating a lot of traffic!” So it’s important to validate stuff like that.

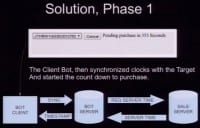

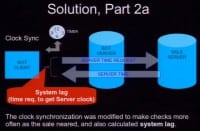

So, as soon as the VIN was validated, a little Start button would appear. Instead of being right on time when the sale was, you could do this hours in advance, hit the Start button, and then it would start to count down. The way it would do this is it was basically synchronizing its clock with the server clock of the sale server, and this is really simple stuff (see right-hand image). In the HTML meta refresh, it would just start refreshing every so often. As the sale got closer and closer, it would refresh more often until right at the end it was like lockstep with the server clock. And as soon as it timed out, it would go ahead and it would attempt to purchase the car. Now, this shows just one bot client. Basically, the bot clients acted as triggers for the server that actually made the purchase. There may have been 16-30 of these running, triggering the server.

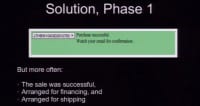

Sometimes we’d miss one. But more often the sale was successful (see left-hand image), and we would send an email confirmation to my client saying: “You bought this car!” And we would also arrange for financing for him. While we were at it, we’d make sure that the car actually was shipped correctly back to his dealership. So the bot provided a lot of utility in that regard.

How successful were we? Well, before, he wasn’t getting anything, and this was really frustrating for him because these were cars he really wanted and he knew he could make a profit out of them, given the price they were selling for. After, we were getting probably 95%-97% of the cars he was trying to buy. So, the difference was phenomenal. It was so much fun, because even after I was done developing this I would get a call every day from my client 15-20 minutes after the sale, and he would say: “Mike, we bought five out of six today!”, “We got seven out of seven!”, “We got nine out of twelve!” And I was like: “Settle down, don’t get greedy here. Don’t kill the golden goose.”

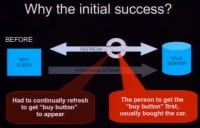

So, why were we successful at this? The main problem with the old one is that people had to wait for that stupid “Refresh” button or that “Buy Now!” button to happen. And there was so much server lag that that was a problem. And usually, whoever got the Buy button first was the person that bought the car (see left-hand image).

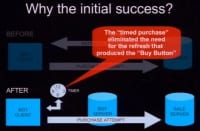

So, basically, what we did is we got rid of the Buy button. We just got rid of it, and we replaced it with a timer that was automated so you didn’t need that person hitting Refresh all the time (see right-hand image). And it would just know what time to buy the car and it would go ahead and buy it. This type of a bot is typically called a “sniper”. I remember back in the day when I was doing this, we were testing and I was going to write in an email that said something to the effect of “I’ve got six “snipers” waiting to hit cars at noon; hopefully we’ll make some hits today”, or “I’ll have some kills” or something like that. And I was just about ready to send that email and I started thinking about Carnivore and some of the stuff that was happening back then, and I thought: “Nah, I’ll just give him a call…” Today I would never send an email like that; I’m not even sure I’d make a phone call. So, yeah, watch your language.

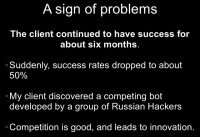

Everything worked great for about six months, and then all of a sudden things weren’t as rosy anymore. The client would call and he would say: “We only got two out of seven today; something’s wrong.” He did some research and he discovered through his connections – he’s got lots of connections – that there was a group of Russian hackers that were hired to write a competing bot, and the dealership was someplace out in New Jersey. Competition is good, right? It leads to innovation, and I was kind of thinking: “Yeah, this is going to be fun now, we’ve got an arms race going on here!”

So, here’s part two of the solution (see left-hand image). What I did different is while I was synchronizing clocks with the sale server I started looking at lag time. And I got to the point where I got really good at estimating how much lag time there would be at the sale time. In other words, what I was essentially doing is I was estimating how many users run the system.

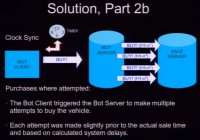

And with that information, I would not set one attempt to buy the car, but for each bot I would launch maybe between five and seven attempts to buy the car. And based on the amount of lag time that I was going to anticipate at the sale time, I would launch them incrementally just a little bit before the sale time (see right-hand image). This was really successful. Now there would be a number of bots and each one of those basically had a warhead that launched multiple attempts to buy the cars.

Our success rate prior to making this fix, during the competition, was about 50%. After, we were back right on the money, we were getting every car we wanted (see left-hand image). It stayed that way through the duration of this program.

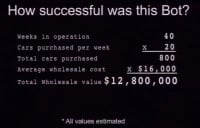

So, how successful was the bot? These are all guesses, because I don’t have any hard facts here. But I know it was an operation for about 40 weeks, and they were buying roughly five cars a day. That’s about 800 cars purchased with this. If you figure the average wholesale cost of the cars they were purchasing, it was probably around $16,000. So, in a 40-week period this bot purchased almost $13 million with the cars (see image above). That has a huge impact on a small dealer like this one. So, this is a great example of not accepting the web as it is, not using browsers where everybody else would, but doing something different and not being afraid to step outside the box a little bit.

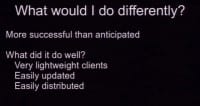

What would I do differently today if I was going to do this? First, those were things that were done pretty well back then, and things that I still do today. I really like having very lightweight clients: the lighter the better. Everything was easily updated because it was all online; and it was easily distributed, I could make changes on the server, it would get distributed everywhere (see left-hand image). These bot clients were, essentially, just web pages with some JavaScript and stuff going on.

One of the things I really definitely would do if I were to do this over is I would build in some analytics and collect metrics (see right-hand image). I would really want to know exactly what our success rate was. I would want to know exactly how much these cars were purchased for. It would be really great to also know how much they were sold for so it could actually show value. That’s something I really wish I had done.

The other thing, I think, that would have been nice if I were to do this over again is build in some process that actually assists in the selection of which vehicles you want to purchase (see left-hand image). In other words, maybe what I would have done is I would have my bot look at Kelley Blue Book and figure out what the good wholesale prices are for cars, and look for discrepancies, look at the ones that are underpriced. That would have been a really good thing to do.

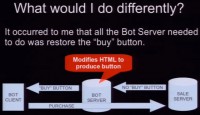

The other thing that occurred to me actually within the last week is probably the only thing I really needed to do here was make that “Buy Now!” button happen (see right-hand image). And I could have done that simply by making the server act kind of like a proxy so that as HTML is coming in with the greyed-out button, I could have just replaced it with a real button and sent it off to the browser. That probably would have worked. The problem there is that, conceivably, you could have bought cars before the purchase time, and that may have been allowed, but that’s something you don’t want to do for the same reason you don’t want to buy cars that don’t exist. You don’t want to show your hand.

The website, the target, was a very traditional website. It used HTML forms which are really easy for me to emulate or submit using just PHP and cURL. Today you don’t find that so often. You find a lot of JavaScript, you find a lot of AJAX. There’s a lot of JavaScript validation of form data before it’s submitted (see left-hand image). It makes it a lot harder to do this kind of thing today.

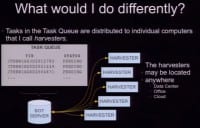

The approach that I take now is to end up with a Task Queue, which is basically a table in a database that keeps track of what needs to be done (see right-hand image). And there’s a web interface into that. In this particular case, my client would, essentially, be loading a Task Queue. That Task Queue would be fed to individual computers, which I refer to as “harvesters”, and they can exist anywhere. They can be in the cloud, they can be in a closet, they can be in your office – they can be anywhere.

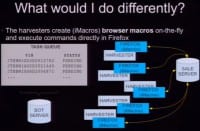

What I have them do now, since there’s so much more complexity in websites and so much more use of client-side scripting, is I do a lot of stuff in iMacros (see left-hand image). That’s the most amazing tool! It’s just an add-on for your browser that, essentially, lets you create a macro for your browser that you can display over and over again. And what I do now is the “harvesters” will dynamically create the macro so you can get them to do some very specific things. Once I learned how to do that, there was not a single website on the planet I could not manipulate. So, that’s what I do now. I actually communicate through Firefox, so it’s very easy for me to emulate human activity now with bots. I would have them hit the sale server. The difficulty there would be to get the timing done correctly, but I think that could have been done. And then the “harvesters”, after they do their thing with the sale server, the target server, report back to the bot server, and the queue is updated – that’s how you can tell what the results were of what you did.

If you’re interested in how that kind of stuff works, go on YouTube and look up my Defcon 17 talk (see right-hand image), because that’s all about manipulating iMacros and that way to do screen scrapers for very difficult-to-scrape sites or difficult-to-automate kind of sites.

So, that’s basically it. Thank you to the CFP Goons. Thank you!