Defcon presentation by computer security researcher Moxie Marlinspike on the past, present and the future of SSL encryption protocol and authenticity as such.

Defcon presentation by computer security researcher Moxie Marlinspike on the past, present and the future of SSL encryption protocol and authenticity as such.

Okay, let’s talk about SSL and the future of authenticity. Really, this talk is about trust, and I wanna start this talk out with a story – it’s kind of a downer, but I feel like it’s illustrative of the situation that we’re in. And the story is about a company called Comodo. They are a certificate authority and, according to Netcraft, they certify somewhere between a quarter and a fifth of the certificates on the Internet today, so it’s the second largest certificate authority in the world.

In March 2011, Comodo was hacked. The attacker was able to make off with a number of certificates – you know, mail.google.com, login.yahoo.com, Skype – basically, everything that the attacker would need to intercept login credentials to all of the popular webmail providers and a few other services. And so immediately after the attack the founder and CEO of Comodo issued a statement, where he said “This [attack] was extremely sophisticated and critically executed… it was a very well orchestrated, very clinical attack, and the attacker knew exactly what they needed to do and how fast they had to operate”. He went on to add that all of the IP addresses involved in the attack were from Iran; you know what this means – cyber. He actually spelled it out, he said “All of the above leads us to one conclusion only: that this was likely to be a state-driven attack”. So he’s painting a pretty complete picture for us here, right? This isn’t just a hack, this is war. Some used to blame Comodo for falling into the full assaults of the state-sponsored invasion, you know, from a cyber army.

And so, ironically it was these statements that really catapulted this story out of the trade press and entered the media. And so a number of reporters called me, and they had the same question: “What does this mean? What can this attacker do?”. And I said “Well, you know, it means they can intercept communication to these websites”. The reporters would say “Well, how? How would they use these certificates to do that?”. I would say “Well, you know, I think that’s commercial solutions, you know, the blue code and a few other kind of scary interception devices out there”. And one of the reporters said “Now, what is the easiest way? What is the most straightforward way that the attacker would leverage these certificates?” And I thought about it and said “Well, the attacker could just use ‘sslsniff’ which is a tool that I wrote to perform man-in-the-middle attacks1 against SSL connections.

Now, interestingly enough, when Comodo published their incident report, they also published the IP address of the attacker, which is somewhat unusual, but I think they were doing this to sort of underscore the Iran-Iran-Iran thing, because this is the IP address registered to a block in Iran (see image).

And so, I was thinking about the reporter’s question – the ‘sslsniff’ and all that stuff, and so I thought, well, I wonder. So I went and I looked at my web logs for my web server where I host ‘sslsniff’ (see image). And sure enough, the morning after the attack the same IP address that Comodo had published downloaded ‘sslsniff’ from by website. Now, there are some other interesting things in here: first of all, the attacker is running Windows; and also interestingly, the attacker’s web browser is localized to US English.

But the most interesting thing was the referrer. So I went back to my web logs and I found the point that the attacker initially made a connection with my website so that I could see the website that they had visited before. And so, the referrer was the Hak5 video on using SSLstrip. For those of you who don’t know, Hak5 is sort of like a set of video tutorials that are pretty introductory material for the people who are just getting interested in this kind of thing.

So just to break this down for you: on the one hand, we have the CEO of Comodo saying it was a “clinical attack”, and on the other hand you see that the attacker is literally following video tutorials on the Internet. I mean, maybe that was a great video, I don’t know. I haven’t watched it yet. They could have turned it into a clinical attack, or I’m not sure.

And then, there were a number of other sort of embarrassing searches that led them to my same website again and again throughout the day, so I sought a couple of Google search referrers which were things like “SSL protocol mitm howto iptables prerouting”. Apparently, he was having some trouble setting up their IP tables.

So I was kind of chuckling about this to myself. And then, the attacker posted a communiqué, and it could not have been more embarrassing. I mean, he alternated between making these grandiose impossible claims about how he’s hacked RSA and all that stuff, well, simultaneously very proudly declaring that he’s capable of doing extremely trivial things like, you know, he could export functions from .dlls and stuff like that. So this could not have been more embarrassing for really anybody involved – you know, the attacker, Comodo… What is worse, he just wouldn’t shut up! He just kept posting communiqués, each one more embarrassing than the last, and I think he posted six interviews with the press – that stuff was ridiculous.

And so the Comodo founder and CEO responded to these events by making a statement where he said “If there were a Secure and Trusted DNS, this issue would be a moot point! We need a Secure and Trusted DNS!” So this guy has just very enthusiastically declared that he does not understand the business that he’s in. On the one hand, he seems to be suggesting that DNS tampering2 is the only way to perform a man-in-the-middle attack, which is just not true; and on the other hand, even if that were true, the reason that we have SSL certificates is to stop man-in-the-middle attacks. If man-in-the-middle attacks weren’t possible, we wouldn’t need the certificates that he’s selling us.

Later that month, they got hacked two more times, and the next month they got hacked again. Now, normally I wouldn’t take this much to be so critical of a company like Comodo, but I think it’s an interesting story because I think there’s an interesting question here, which is “What happened to Comodo?” And after all of this, it couldn’t have been more embarrassing, could not have been worse, really. You know what happened to them? Nothing. The business didn’t suffer, they didn’t lose customers, they didn’t get sued. Really, the only thing that happened to Comodo was that their CEO was named entrepreneur of the year.

A Secure Protocol

– Secrecy

– Integrity

– Authenticity

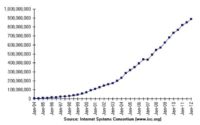

If you wanted to use RSA (the algorithm), you had to license the patent from RSA (the company), you had to pay money in order to just even perform this type of cryptography. E-commerce didn’t exist: the idea of transmitting your credit card number over the Internet was totally foreign. There were no such things as web applications really – you know, people weren’t really transmitting their login and password credentials through websites. And the Internet itself was tiny. You know, in 1994 – according to ISC4 – there were less than 5 million hosts on the entire Internet. Compare that to today where there’s over 4 billion. At the time, there were probably less than ten ‘secure’ sites that you can think of – less than ten sites that for some reason you wanted traffic to be encrypted to these websites, whereas today there are more than 2 million certificates on the Internet, more than 2 million sites that are using SSL.

At the same time, you know, it’s worth remembering that SSL was developed at Netscape, and this was an environment of really intense pressure. The race was really on then and this is the same place where the series of 4am decisions gave us JavaScript, and we’re still dealing with that today.

So, you know, actually when you look at it, the designers of SSL were actually pretty heroic. They didn’t have a lot to work with, and they were working in circumstances that were totally different from the circumstances today, and yet it served us pretty well. You know, when it comes to these first two things – secrecy and integrity – they did okay, there’ve been some problems and there’re still some problems, but the piece that has always cost a real fortune and is now causing real problems is the authenticity piece.

Authenticity is important of course, because normally, if you establish a secure session with a website, the problem is that if you don’t have authenticity, someone could have intercepted your connection to that website to establish a secure session with you – they make their own secure session with the website and just shuttle data back and forth, logging it in between (see image). But what’s easy to forget is that a man-in-the-middle attack was entirely theoretical in 1994 or 1995. The network tools didn’t exist, this wasn’t the kind of thing that was actively happening, this was thought of as an academic thing. You know, it’s like – oh well, there’s this other thing called the man-in-the-middle attack, and we need to design something theoretically to prevent against that.

And so the designers came up with a solution that was certificates and certificate authorities, where every site has a certificate and it’s known to be authentic because it’s signed by a certificate authority which is just some organization that we’ve decided to trust. I have this hypothesis that we’ve outrun the circumstances in which SSL was originally imagined, and that it’s a different world today.

And then I thought – well, I wonder if that’s true, I wonder what they were actually thinking. And so I thought – well, I should talk to the people who designed SSL. I did some research and I figured out that SSL was originally designed by this guy Kipp Hickman who was a Netscape employee back in the day, and the last thing that Kipp Hickman posted to the Internet was in 1995. It was difficult to find him, you know, I talked to some people at Netscape who would point me in the right direction, and eventually I tracked him down, I basically just cold-called him. You know, I talked to him on the phone, and he’s a great guy. He was like “Oh, SSL! Yeah, I haven’t thought about that in a long time!” Yeah, okay, you know… I was like “So, certificate authorities was the deal”, and he said “Oh, that whole authenticity thing… We just threw that in at the end. We were designing SSL to prevent passive attacks1 for the most part, you know. We heard about this thing – the man-in-the-middle attack – and so we just threw that in at the end”. He’s like “Really, that whole thing with certificates, it was a bit of a hand wave. We didn’t think it was gonna work, we didn’t know”.

The idea back then – you could say it made sense. If you look at the number of domain names on the Internet back in 1994, when that number is approaching zero (see graph), you know, it made sense that, okay, maybe you have 10 sites that you could identify as secure sites, so you have one organization that just looks at those 10 sites really carefully and makes a decision and signs the certificates. But, you know, if you try and scale that up over time to today when there’s almost a billion domain names on the Internet – and ideally, we’d like all of them to be secure – it seems a little bit unrealistic to think we’re gonna have an organization or even a set of organizations that’s gonna look appropriately, closely at all of these domain names.

So I asked Kipp about how they saw the scaling over time. He’s like “Oh, the scaling – we didn’t really think about that, because you got to remember that at the time this was designed, Yahoo! was a web page with 30 links on it – that’s what Yahoo! was.” Yeah, that’s different.

And history has really born us out. I’ve been analyzing all the possible problems with SSL that have dropped up in the past. There have been some issues with secrecy and integrity, but this managed to sort of squeak by over time. There have also been some problems with user interaction – these are things like ‘SSLstrip’. But in terms of the protocol itself, the stuff about the authenticity piece has been where all the real problems are. And I think, you know, looking back at the Comodo thing, your lesson from these events shouldn’t be that this was cyber war, because I think, pretty clearly, it wasn’t.

But this is happening every day – that’s the real story. You know, one of these domains the attacker got – login.live.com – I mean, we should remember that Mike Zusman got this just by asking for it. He didn’t have to export functions from .dlls or whatever – he just sent in a request. Eddy Nigg got mozilla.com with no validation at all, he just asked for it. VeriSign issued a code signing certificate from Microsoft Corporation to attackers that are still unidentified, they were never discovered. I mean, this kinda thing happens all the time. Just recently, I needed to get an SSL certificate, so I went to this website SSL-In-A-Box.com – you know, straight to the bottom of the barrel. It’s one of the things where you have to create an account in order to submit anything. So I go to create an account, and when I click ‘Create’, it just logs me into someone else’s account. I didn’t even try to hack this, I just want a certificate. So, you know, I logged out and tried to create an account again, and it logged me into someone else’s account, and every time I did it, I just got a different account. And the thing is I didn’t even bother emailing them about it because I’m sure that they don’t even care.

One of the certificate authorities published the key to their certificate in the public directory of their web server. And the thing is you might be able to understand how it’s possible that someone could have made this mistake, but it’s still there! It’s not like “Oh, crap!” – it’s since 2009 that the key to the certificate has been available to the public.

You don’t even have to hack anybody. If you got the money, you can just buy a certificate authority. You can get a CAcert from GeoTrust2 – I think it’s 50 grand (see image). Anybody have 50 grand to spend? You’re on CAcert, intercepting all the communication on the Internet. I really like their iconography in the top-right corner, because it really is just like “We’re giving you the key to the world”. They’re not hiding anything.

And what if this were a state-sponsored hack – this whole Comodo thing? I think it’s worth realizing that the only reason that Iran would have to hack a certificate authority in order to issue certificates is because they don’t have a certificate authority of their own. But many other countries do. The EFF3 put together an excellent project called the ‘SSL Observatory’, where they scan the Internet, and they put together a map of all the countries in the world that are currently capable of issuing certificates and thus intercepting secure communication – and it looks like this (see image). I mean, I don’t know if you can see, but way out in the middle of the Atlantic, there’s a little red speck – that’s Bermuda. Bermuda can issue certificates. The good news is that the vibe around this sort of thing seems to be shifting: from the old vibe of the total ripoff, which I think was the general perception of certificate authorities, to the new vibe of total ripoff and mostly worthless. There’s been a lot of talk about moving forward and replacing certificate authorities with something else, but I think that if we’re gonna do that it makes sense to really accurately identify the problem and figure out what it is that we’re trying to solve so that we don’t end up in the same situation again.

Now, there’ve been a few sort of general perceptions of what the problem might be. The first is people look at the EFF ‘SSL Observatory’ data, so the EFF scan the Internet and they put together a graph of all of the organizations in the world that are currently capable of signing certificates, and it’s a lot of organizations – in fact, it’s 650 different organizations that are currently capable of intercepting communication. And so, I think one simplistic reaction to this is just to say, well, the problem is there’s too many certificate authorities, there’s just too many of them, what we need is fewer certificate authorities. But I feel like this might be a little simplistic. Remember when there was only one (VeriSign), and they could charge as much and do really whatever they wanted? And part of the problem here is really a scaling issue where we’ve gone from maybe 20 secure sites to 2 million secure sites, and ideally we’d like a billion secure sites. You know, it seems like less is not really the answer.

General perceptions of SSL problems:

– Too many CA’s

– A few ‘bad apples’

– Scoping issue

Another idea is that it’s a scoping issue, that the problem is that the authorities are all in the same scope. For instance, the two authorities who can sign certificates and thus intercept secure communication on the Internet today are the Department of Homeland Security and the state of China. Well, the problem is that the DHS can sign Chinese sites and China can sign U.S. sites, and if you just separated the scope so that China could only sign sites in China and the Department of Homeland Security could only sign sites in the United States, everything would be cool. I feel like it’s kind of a low bar. I think there’re plenty of people in China that probably don’t trust the state of China to certify sites even within their country, and likewise I feel there’re plenty people in the United States who don’t trust the Department of Homeland Security to be certifying their communication either.

So what is the answer to this question? What is the problem?

I think it’s a good idea to look back at what happened to Comodo. Well… nothing happened to Comodo. But why? Why did nothing happen? What could we have done? If I decide that I don’t trust Comodo – and I don’t – the very best thing that I can do is remove them from the trust database (trustdb) in my web browser; I could say, okay, they are no longer a trusted authority. The problem is that if I do that, somewhere between a quarter and a fifth of the Internet just disappears, totally breaks, I can’t visit those sites anymore. And sure I could take an ideological stance to never visit those sites again because they are mixed up in the Comodo cabal of whatever, but really that’s no appropriate response. And the thing to remember is that this is as true for browser vendors as it is for you or me: you know, a browser vendor cannot remove Comodo from their trust database, because they’re just gonna be breaking somewhere between a quarter and a fifth of the Internet for all of their users. They are in the exact same situation that you and I are. The truth is that somewhere along the line we need a decision to trust Comodo, and now we are locked into trusting them forever.

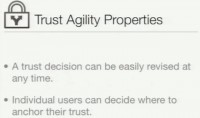

And I think that this is the essence of what we’re looking at today, that we can buoy down all the problems that we had with certificate authorities to a single missing property, and I call this property trust agility. The idea is that trust agility provides two things: one that a trust decision can be easily revised at any time. You know, there’re many people that say “Oh, Moxie doesn’t trust anybody”. That’s not true. I mean there’re plenty of organizations that I could identify today that I trust to secure my communication – for me, you know: Tor, Riseup, EFF, Carnegie Mellon. But what seems insane is to think that I could identify an organization or a set of organizations that I would be willing to trust not just now but forever, regardless of whether they continue to warrant my trust and without any incentive to continue behaving in a trustworthy way. The second property of trust agility is that individual users can decide where to anchor their trust. This could be the same thing as saying individual browsers can decide where to anchor their trust. And I think this is important.

Right now, there’s this idea it’s a scoping problem, that VeriSign and Comodo are in the same scope and that if we just separated this scope, then if VeriSign did something particularly egregious, a site like Facebook could switch to a different certificate authority, and this would actually have some significance because VeriSign would be unable to continue signing certificates for Facebook, which is currently not the case. But, you know, I think if it’s been a struggle to get websites to deploy https for SSL, to begin with – it seems a little bit farfetched to think they’re going to continue making really active decisions in our best interests. And what’s worse in this increasingly globalized world, it doesn’t seem like it’s really possible to make trust decision for everybody; that, you know, different people live in different context with different threats, have different needs and probably trust different individuals. And so, what’s more, it’s our data that’s at risk – not the site administrator, not the company that’s operating this web service. It’s the users’ data, and I feel like it should be the users or the browsers who could decide who to trust.

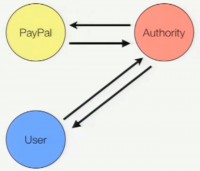

This property that individual users decide where they can anchor their trust is really just a simple but powerful inversion of the way the things already work. Currently there’s three entities involved into one of these transactions: there’s the client, the server and the authority. And this trust relationship is initiated by the server. The server talks to an authority and says “Hey, please certify me”. The authority responds and the certificate is eventually given back to the user through the site. And what we are talking about here is just doing a simple inversion where it’s the user – or the client – that initiates this trust transaction and talks to the authority saying “Please certify this site for me”, the authority certifies that site and responds back to the user (see image). The reason this is so powerful is because now this means the users can decide what authority they need to talk to, which means this issue of scoping is not such a big deal, right? The fact that the Department of Homeland Security can sign sites in China is not an issue because users in China will just ignore it and talk to some Chinese authority, or they might decide they don’t trust China either and they talk to some NGO2 or something else instead. I think that these two components of trust agility are really powerful, and I think that they are exactly what’s missing from the CA system today, and that is where all the problems have come from.

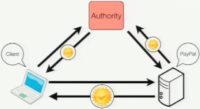

So I want to take a few minutes to talk about DNSSEC because there’s been a little bit of talk recently about using DNSSEC to replace the authenticity piece of SSL. And the basic idea is this: you take your SSL certificate on your site and you shove it into your DNS record. So you have a cert, you put it in your DNS record, and when a client goes to contact a site, it does a DNS lookup, it gets back a DNS response with not only the IP address, but also the server’s certificate embedded in the DNS response (see image). That way, when they connect to the server, the certificate they see is the same thing they got in the DNS response. And this thing is going to be authentic because it’s signed, because we’re using DNSSEC.

Now, this scheme has a really immediate appeal, and I think it’s because people tend to mentally associate DNS with the word ‘distributed’, and ‘distributed’ sounds really good right now, it sounds like exactly what we need. After suffering under the centralized yoke of certificate authorities for all these years, it would feel good to just wipe them off the page or replace them with a distributed system instead.

But when you start to look closely at the way that DNS works and DNSSEC works, it’s information that is distributed, the information in the DNS records is distributed across the various zones on the Internet. But the trust is incredibly centralized and hierarchical, and this is actually exactly how the CA system works today, right? The information, the certificates are distributed across the web servers of the sites that are serving them on the Internet, and the trust is highly centralized in this hierarchy of certificate authorities.

The first is the registrars. I feel like the CA’s are sketchy, these people are taking it up a notch. Firstly, I think it should be laughable that the current first step in deploying DNSSEC is to create an account with GoDaddy – I think that should be laughable.

Trust Requirements:

– The Registrars

– The TLDs

– The root

The second class of people that we have to trust here are the TLDs – these are the companies that manage the top-level domains. So, in the case of .com and .net – the largest TLDs on the Internet – the company that manages those is VeriSign: same player, same game. If you look at other TLDs like .org and .edu, the companies that manage them are probably not companies that you’ve ever heard of. I would at least suggest that if you were to think who’s a really trustworthy company, who really has a strong sense of integrity, these companies are probably not the first that would come to mind. Take a minute to look at the organizations that manage the other TLDs and look at the executive boards, look at the people managing operations and ask yourself – are these the people that I want to trust with all of my secure communication in the future? There’s also the country code top-level domains, so does everyone that’s using TLDs like .io, .cc, .ly trust the corresponding governments for these countries to secure all of their communication? What about TLDs like .ir and .cn? Should the citizens of these countries have to trust their governments with all of their secure communication to local sites? You know, we’ve seen the current picture of what countries around the world are capable of intercepting secure communication based on the EFF ‘SSL Observatory’ data. That picture would encompass pretty much all the countries in the world under DNSSEC. And if the recent domain seizures are an indication of the future, it seems like these TLDs could be dangerous.

And the third class of the people that we have to trust here is the root, and that’s ICANN3. While ICANN has made a great effort to be a sort of global organization, as far as I know – and I could be wrong – fundamentally, they’re just a California 501(c)(3) non-profit, which, as far as I know, means that they have to abide by laws in the United States. And, you know, this legislation that’s been coming up recently, like COICA4, PROTECT IP5 and this kinda thing – to me a real lesson here isn’t whether this passes or not, because there’s been some kind of heroic efforts to prevent this legislation from going through, but I think the thing to take away from this is that they’re trying to pass legislation that messes with this stuff, and maybe one day they’ll succeed, and I think ICANN would be subject to regulation in that case.

The worst part about all of these organizations is that this system actually means reduced trust agility; that today – even as unrealistic as it might be – I could still choose to remove VeriSign from my list of trusted certificate authorities, but there’s nothing that I can do to stop VeriSign from being the company that manages the .com and .net TLDs. So if we sign up to trust these people, we’re signing up not to trust them just now, but forever, regardless of whether they should continue to warrant our trust, with no ability to change our mind about whether we should continue trusting them, without any incentives to continue behaving appropriately.

‘Perspectives’ model

So, let’s talk about things that I’m a little bit more inspired by. There’s a project called ‘Perspectives’ which came out of Carnegie Mellon University, and it was done by Dan Wendlandt, David G. Andersen and Adrian Perrig. It was originally a paper that was published on using multi-path probing in order to provide authenticity for SSH1 and SSL. And the concept is fundamentally about Perspective.

The basic idea is this: you connect to a secure site, you get back a certificate, and you think “Well, I wonder if the certificate is good or not, how do I validate it?” Well, what you do is you contact an authority, then you say “Hey, what certificate do you see for PayPal.com (in this case)?” The authority makes its own connection to the website, gets its own certificate back, just like a normal web browser would, and then sends that certificate back to you as the client (see image). Now, you compare the thing you got from the authority with the thing you got from this site, and you make sure they’re the same. And so, what you’re essentially doing is you’re using some network Perspective to get a different view on the same site – you know, you have a different network path from wherever the authority is communicating from. We call these authorities ‘Notaries’, and you don’t have to talk to just one Notary, you can talk to any number of Notaries, and they can be distributed around the world so that each has their own unique network path at the same destination. We’re essentially building a constellation of trust.

This idea of using Perspective is actually not new, it’s how SSL works right now. You know, right now, if a site administrator wants to get a certificate for a site, what does the administrator do? They contact an authority and say “Hey, could you please issue a certificate for my site?” And what does the authority do? They send an email to the site with a verification code in it. And if the administrator can receive the verification code and send it back to the authority, the authority issues the certificate (see image). So it’s just using another form of network Perspective to do the same thing, we’re just trying to invert this relationship, so that instead of being site initiated, it’s user initiated.

Now, Perspective – one that was released – came with an implementation, but the implementation was kind of limited. It was initially designed for self-signed certificates, and so it has had some challenges.

Perspectives’ challenges:

– Completeness

– Privacy

– Responsiveness

The second problem is privacy. If every time I make a secret connection to a website I have to make another connection to a Notary, I’m now leaking my entire connection history to the Notary, and that seems a little bit unfortunate.

And the last problem is responsiveness. Perspectives suffer from this idea of ‘Notary lag’. What would happen is you get a certificate, you contact a Notary and you say “Hey, what do you see for PayPal.com?” And the Notary would make a connection to PayPal.com and see the certificate. The problem is that the Notary would cache the response so that it wasn’t constantly having a connection to all of these sites, and then just periodically at some interval pull the site – you know, like once a day or something like that – all the sites that it had certificates for, in order to see whether the site had switched to a different certificate. The problem is that if a site did switch to a different certificate, your responses from the Notary would be invalid for duration of the transaction.

‘Convergence’ model

So, what I’ve done is I’ve taken this concept of using Perspective and I’ve built on it to create a system that I call ‘Convergence’. Convergence is a new protocol, a new client implementation and a new server implementation of this concept. The first thing that we do is trying to address the Perspective’s challenges. We eliminate Notary lag – basically, when you contact a Notary you also send what you saw. So now, the Notary doesn’t have to do any pulling, it just has to contact the server in the case of the cache miss or cache mismatch – so there’s no more Notary lag.

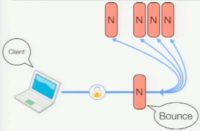

The next thing that we did was add privacy. This is two parts: the first part was through local caching, so now whenever you contact a Notary and you say “Hey, what do you think of the certificate?”, if it says “Hey, this is okay” – you go ahead and cache that certificate locally. That way, the next time you connect to the site, you get the same certificate back and all you have to do is check the local cache and see if this thing is good, and you don’t even have to talk to the Notary. So now, you’re only leaking your connection history the first time you visit a secure site, or whenever the secure site’s certificate changes. That still doesn’t seem that great, so the next thing we do is implement Notary bouncing. The idea is that you have a set of Notaries that you have configured as the Notaries that you trust, and you want to talk to all of them. And the first thing that you do is randomly select one of the Notaries and assign it as a bounce. You connect to that Notary, and then you tunnel SSL through the Notary to all the Notaries that you want to talk to (see image). So the bounce Notary is just the dumb proxy shuttling bytes around, and it doesn’t have any visibility into what you’re querying about; the Notaries that you’re talking to know what you’re asking about but they don’t know who you are, and the bounce Notary knows who you are but it doesn’t know what you’re asking about. These SSL connections to the destination Notaries are done using static keys that are configured whenever you add a Notary to begin with, and your browser is just likely a certificate authority now.

Convergence is a Firefox add-on, and it looks exactly like the normal Firefox experience, the only difference is that in the upper right-hand corner you get this little Convergence button (see image). If you click this button and enable Convergence, you are completely divorced from the CA system. Everything – foreground content, background content, the certificate authority’s certificates in your web browser – are completely ignored. Everything looks exactly the same, the only difference is that normally when you visit a secure site and you put your mouse over the favicon, you’ll see a little tooltip about who has certified this communication – the only difference with Convergence is we are taking the certificate authorities completely out of the picture, everything else works the same.

Convergence is a Firefox add-on, and it looks exactly like the normal Firefox experience, the only difference is that in the upper right-hand corner you get this little Convergence button (see image). If you click this button and enable Convergence, you are completely divorced from the CA system. Everything – foreground content, background content, the certificate authority’s certificates in your web browser – are completely ignored. Everything looks exactly the same, the only difference is that normally when you visit a secure site and you put your mouse over the favicon, you’ll see a little tooltip about who has certified this communication – the only difference with Convergence is we are taking the certificate authorities completely out of the picture, everything else works the same.

The Notary implementation is available for the open source, anybody can run their own Notary, it requires very little resources, and it’s designed to be extensible. The protocol is a REST2 protocol, and the idea is to design a protocol that would support a number of different back-ends. So by default, the default back-end for the Notary is to use network perspective, but you could write any number of other back-ends for the Notary, for instance if you like DNSSEC, the Notary could do DNSSEC to validate the certificate on the back-end, you wouldn’t have to use network perspective. If you’re crazy, you could use CA signatures to validate certificates. You could even use Notaries as front ends to other services, like a Notary front end to the EFF’s ‘SSL Observatory’ which the EFF has volunteered to run. And you configure Notaries that do different things, you can have a set of trusted Notaries, each one does a different thing. And Convergence implementation also has a threshold that you can set on what percentage of the Notaries have to agree in order for them to be sure and meet this consensus. What this means is that with the current CA system you have a certain number of certificate authorities, and if one of them is a bad actor – you’re completely out of luck. And we’re inverting that here, where the more authorities that you have, the more Notaries that you configure – the better off you are, because it means that all of them have to be in cahoots to misbehave or intercept your SSL communication. We have full trust agility, if we don’t like one of these people, we can just remove it, and there are no complications, everything continues to work exactly as it normally would, nothing breaks. And if you like, you could replace it with a different one that does the same thing because you think they’re more trustworthy.

Other nice things here are that the servers do nothing. You know, people don’t have to make any changes, which means we don’t have to migrate the Internet to anything else. All we have to do is implement Convergence in the four major browsers – and be done. That would be it, that would be the end of the CA system right there. We don’t have to make any changes across the Internet anywhere else. Other nice things are you don’t get any more self-signed certificate warnings; the concept of the self-signed certificate does not exist in the Convergence system. Certificates are certificates – that’s it.

There are a few problems. The first is what’s known as the ‘Citibank problem’. Right now, if you’re running Convergence and you visit Citibank.com, you will get a certificate warning – you know, an ‘untrusted certificate’ warning (see image). And the problem is that Citibank apparently has, like, a couple of hundred different SSL certificates, and each one is on a different SSL accelerator, so every time you connect you get a different certificate, which means that all the Notaries see different certificates, your browser sees a different certificate, and it looks identical to the case of being attacked. The good news is that there aren’t many sites like this on the Internet. In fact, Citibank was the only one that I could find, I’m sure that there are others, but they’re pretty rare, so while we might not need to migrate the Internet, we might have to ask a few of these sites to use same practices – like not having hundreds of different SSL certificates.

The other problem right now is captive portals, so if you’re running Convergence right now and you’re like in an airport or in a hotel, you know, you want to connect to the Internet and you get redirected to this captive portal where you have to type in your credit card number before you can actually access the Internet. Now, you want to secure this connection with the captive portal, but the captive portal’s not letting Internet traffic out, so you can’t contact your Notaries. So right now, you have to actually just unclick Convergence in order to deal with this thing, but the good news is that almost always in these captive portal situations we only have to build a Convergence protocol over DNS, and it will work in a captive portal situation.

You can download the software I listed on convergence.io – try it out, it’s a Beta. Look at the server stuff if you want to run a Notary, set one up; talk to people who might trust you and ask them to configure you as a Notary. Even if you’re not going to try Convergence, you’re not into it, the one question that I want to leave you with here today is whenever someone is proposing another authenticity system, I think the question that we should all ask is “Who do we have to trust, and for how long?”. If the answer is “A prescribed set of people, forever” – proceed with caution. In the meantime, try Convergence. Thank you.

nice