Lin-Shung Huang from Carnegie Mellon presents a study at USENIX Security about clickjacking attack vectors and the defenses to deploy for evading this issue.

Lin-Shung Huang from Carnegie Mellon presents a study at USENIX Security about clickjacking attack vectors and the defenses to deploy for evading this issue.

Hello, I am David Lin-Shung Huang from Carnegie Mellon. Today I will be talking about clickjacking attacks and defenses and will introduce three new attack variants to show how all existing defenses are insufficient and present a new defense to address the root causes. So, this is joint work with Alex, Helen, Stuart from Microsoft Research, and Collin from Carnegie Mellon. This work was done while I was intern in a Microsoft Research.

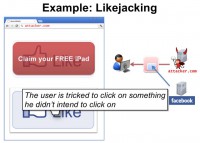

So, to begin let me give an example of clickjacking on the web. Likejacking is type of clickjacking attacks that targets Facebook’s ‘Like’ button. So, suppose the user visits the attacker’s website. The attacker can embed Facebook’s ‘Like’ button on his page and the attacker wants to trick the user to click on the “Like” button, so, how can he do that? First, he can create a decoy button that lures the user to click on it to claim a free iPad.

Then, he can reposition the ‘Like’ button exactly on top of the decoy button and, finally, he can make the ‘Like’ button completely transparent using CSS, so, when the user tries to click on the decoy button he ends up getting tricked to click on something he didn’t intend to click on (see right-hand image).

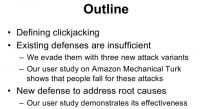

So, in this talk first I’ll define clickjacking and characterize the existing attacks, then I’ll explain why existing defenses are insufficient and demonstrate three new attack variants that evade them. Finally, I’ll present a fundamental defense that addresses the root cause. We evaluated both attacks and defenses using Amazon Mechanical Turk. So, we’ll tell you how many people really fall for the fall for the attacks and how much the defenses can help.

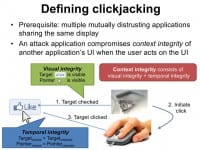

So, you’ve seen an example of clickjacking but let’s define clickjacking in a more general way. Clickjacking may occur when multiple distrusting applications are sharing the same graphical display. So, an attack application compromises the context integrity of another application’s user interface when the user acts on the UI.

Let me explain what context integrity means with an example of a user clicking on the ‘Like’ button. First, the user is checking the target, and if he intends to click, he initiates the click. After a couple of hundred milliseconds the target is actually clicked. So, what is the context integrity in this case?

When the user is checking the target he recognizes that the target object, which is the ‘Like’ button, is what he intends to click, furthermore, he checks that the cursor feedback is where he intends to click, so, these two things are the visual integrity.

In addition, the target and pointer shouldn’t change from when he initiated the click to when the target is actually clicked. This is the temporal integrity. And we know that this type of problem is similar to the time of check to time of use problem except that now it’s the user performing the check. So, context integrity consists of both the visual integrity and the temporal integrity combined.

So, we surveyed a bunch of existing attacks, and now let me show you some examples of how they compromise context integrity. I’m using a PayPal checkout dialog, just for example (see left-hand image). One simple strategy to compromise the visual integrity is to hide the target. So, browsers allow web applications to make objects completely transparent using the CSS opacity. In some cases, the attacker may not need to or want to hide the entire target, the application can partially overlay important bits on the target to trick the user, and we provided more examples, such as propping, in our paper.

Another way to compromise the visual integrity is by manipulating the cursor feedback (see right-hand image). Browsers allow web applications to set custom cursor icons or even completely hide them, so, the attacker can set a cursor icon that displays the pointing hand away from the actual location of the pointer to mislead the user. So, when the user clicks on the decoy button the real pointer is actually clicking on the ‘Like’ button.

And as mentioned, context integrity consists of visual integrity and temporal integrity, so even if visual integrity isn’t compromised it’s still not enough to prevent all clickjacking attacks. The attacker can compromise temporal integrity using a technique we call Bait-and-Switch. The attacker baits the user to click on the decoy button which is the ‘Claim your FREE iPad’ button. When the user’s pointer hovers over the button the target is instantly repositioned under the pointer, and note that the display of the target object is fully visible and the pointer wasn’t altered but the user doesn’t have enough time to comprehend the visual change.

Lin-Shung Huang now describes the current clickjacking defenses and outlines the new attack variants that were evaluated using the Amazon Mechanical Turk.

Existing Defences

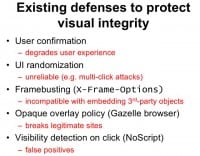

So, I talked about the existing attacks. Now, what are the current defenses to protect visual integrity? One method is user confirmation. The idea is simple, just to ask the user again but, of course, it’s really annoying. Another idea is to randomize the sensitive UI so that it makes more difficult for attacker to correctly position the decoy button under the target. However, it’s not reliable since attackers may trick the users to perform multiple clicks, and eventually the attacker will succeed.

Framebusting is currently the standard defense against clickjacking. The idea is to disallow embedded or framed objects and cause the browser to render an error frame. Unfortunately, this is incompatible with third-party objects such as Facebook’s ‘Like’ button. And also browsers can adopt an opaque overlay policy that disallows transparency. However, it breaks existing sites.

Finally, there is a type of defense that allows embedded or framed objects and detects the visibility of the target object at that time of the user click. This is implemented in Firefox extension called NoScript however it often triggers false positives due to its on-by-default nature.

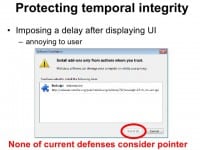

So, other existing defenses to protect temporal integrity. Well, one common approach is to impose a delay after displaying the UI, such that users have enough time to make an informed choice before performing the click. For example, when the users install an extension on Firefox, they are required to wait a few seconds before they are allowed to click. We think this is actually a good technique, although sometimes it may be annoying to the users. And at the time of study, none of the current defenses consider the integrity of pointers.

New Attack Variants

In the next section I’ll introduce our three new attack variants that can evade the existing defenses and cause severe damages such as accessing the user’s webcam, stealing the user’s email and revealing the user’s identity.

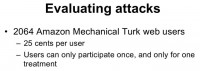

For each of the attacks I also want to show you how effective they are. So, for evaluating the effectiveness we recruited roughly 2000 web users on Amazon Mechanical Turk, we offered 25 cents per user only allowing each user to participate once, and only for one treatment.

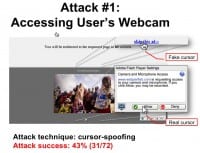

So in our study we actually observed that 51% of users skipped the ad. So, suppose the user moves the cursor over to the ‘Skip Ad’ link and clicks. How many of you noticed that the real cursor was hidden all the time and now the cursor is on the ‘Allow’ webcam access button.

Our study shows that 43% of the users fell for the attack, and if you think about it this is quite serious – getting webcam access to over 40% users is actually really bad. And if we consider that 51% of the users tried to skip the ad, that’s over 84% success rate on those users. And note that in this attack temporal integrity is preserved, no Pay-and-Switch techniques were used, however, the visual integrity of the pointer isn’t preserved.

As a result, the attacker was granted access to the user’s Google account. We found that 47% of the users fell for this attack and, again, this is extremely serious as almost half of the users’ emails would have been accessed. Note that this attack works even though Google deploys framebusting defense.

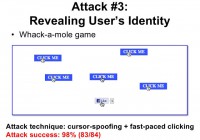

The attack combines cursors spoofing and fast-paced clicking techniques and was the most effective attack that we have; we found that 98% of users fell for it. So, once the user clicks on the ‘Like’ button the attacker can instantly reveal the user’s identity, we described how this is done in our paper.

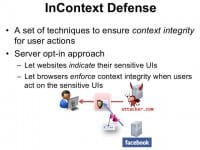

Now, we know that current defenses are insufficient in one way or another. The question is: can we design a better defense? We set a few design goals: the defense should support embedding third-party objects such as Facebook’s ‘Like’ button; the defense should not prompt users for each of their actions and force users to make bad security decisions; it should also not break existing sites and should be resilient to new attack vectors.

So, InContext is a set of techniques to ensure context integrity for user actions (see left-hand image). Since we don’t want to break existing sites, we use a server opt-in approach. We let the websites indicate their sensitive UIs, for example, Facebook can mark their ‘Like’ buttons as sensitive UI. Then we let browsers enforce context integrity when users act on those sensitive UIs.

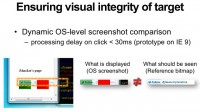

So, how can we ensure visual integrity of the target UIs? The basic idea is to ensure that what the user sees is what the sensitive UI intends to display (see right-hand image). We render these sensitive objects off screen in isolation of other websites and applications and we make sure that the screen image displayed to the user matches the reference rendering pixel by pixel.

We implemented a prototype of this on top of IE 9 and measured that the processing delay during each click is less than 30 milliseconds in the worst test case. So, without any code optimization the performance cause is pretty reasonable and only occurs when the user clicks.

Now, how can we ensure visual integrity of the pointer? This is trickier (see right-hand image). One strategy is just to remove cursor customization. So even if the attacker renders a fake cursor – the real cursor is always displayed and as you can see, again, there are two cursors moving on the screen.

We found that this reduced the attack’s success rate from 43% down to 16% but still some users may be confused. Another disadvantage of this approach is that legitimate sites won’t be able to hide the cursors, for example, when playing a movie in a full screen showing a cursor would be annoying.

So, are there better defenses that can still allow cursor customization and provide visual integrity for the pointer? One method is to freeze the screen outside of the target display area when the real pointer enters the target (see left-hand image). So, for example, when the pointer enters the red area which is the sensitive UI, the screen freezes, including the video ad and the fake cursor, so you see the fake cursor is hanging outside of the ‘Skip this ad’ link.

The attack’s success rate reduced down to 15% and it’s similar to removing cursor customization. We tested this defense again but enlarging the target area with the margin of 10px. And this defense reduced the attack’s success rate down to 12%. We tested this defense again, now with the margin of 20px. This defense reduced the attack’s success rate down to 4%, which is lower than the baseline. The baseline is that around 5% of the users, we found that they would click on the webcam access button even under no attacks.

So, this suggests that having a sufficient margin around the sensitive UI is very important in addition to screen freezing and it allows the users to notice when their pointer enters the sensitive UI.

We also tested a more obtrusive defense by rendering lightbox effects when the pointer enters the target (see right-hand image). Notice that the pixels of the grey mass are randomized, otherwise an attacker may be able to adjust its intensity to cancel the lightbox effect.

And to our surprise, we didn’t observe significant improvement using the lightbox effect, perhaps it’s because the screen freezing itself was already very effective. So, since screen freezing is less obtrusive, we leave the lightbox effect as an optional defense.

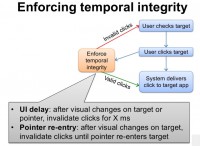

Now, how do we enforce temporal integrity? (see left-hand image) The normal flow of user click is like this: the user checks the target, the user clicks the target and then the system delivers the target, the click to the target application.

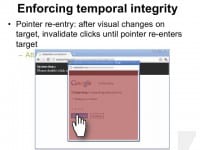

We enforce temporal integrity before the system delivers the click to the target application. We can use two techniques. One is the UI delay, which is, after visual changes on target or pointer – we invalidate clicks for a number of milliseconds. Another technique is pointer re-entry. So, after visual changes on target we invalidate clicks until the pointer re-enters the target.

So, first let’s see what UI delay does (see right-hand image). When the target UI or pointer changes we invalidate clicks for X amount of milliseconds. In the case of double-click attacks, the second click would be invalidated because the target or dialog just appeared. We found that using UI delays up to 250 milliseconds and 500 milliseconds were both very effective against this specific attack. Only less than 2% of the users would still fall for the attack. This suggests that most of the users are double-clicking faster than 500 milliseconds therefore they are protected by this defense.

In some cases setting the right amount of UI delay is difficult, and so we have an alternative solution to enforce temporal integrity. Whenever the UI changes, we invalidate clicks until the pointer re-enters the target (see left-hand image). So, as you can see in this example, after the double-click clicks are not delivered if the pointer did not explicitly enter the target. The dialog is enabled only after the pointer re-enters the target.

We found that the pointer re-entry was extremely effective, it prevented all of our users from falling for the double-click attack. This approach does not require applications to set a certain amount of UI delay, and advantage of pointer re-entry is that it cannot be gamed by attacker who can anticipate the time of user clicks, for example, the attacker might try to display the target 500 milliseconds before the user clicks, that would probably defeat a 500 millisecond UI delay. However, this technique doesn’t apply to touch screens because touch screens don’t have a cursor feedback.

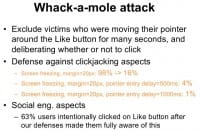

So, we’ve shown that our defenses are effective against cursor spoofing and double-click attacks individually. We wanted to see whether our defenses would still hold against a combined attack. So, as I mentioned the Whack-a-mole attack (see right-hand image) combines cursor spoofing and fast-paced object clicking techniques.

We excluded victims who were moving their pointers in and out the ‘Like’ button for many seconds and deliberating whether or not to click. This is because clickjacking defenses have no chance of stopping scenarios where users intentionality decided to click on the target.

So, we found that our defenses combined were able to reduce the attack down to 1%. The pointer entry delay presented here is a UI delay that invalidates clicks for X amount of seconds after the pointer enters the target. We observed that this pointer entry delay is crucial to reducing the attack success rate, and a larger amount of delay is definitely better.

To our surprise, we observed that the social engineering aspects of this attack are still very effective although they are out of scope.

So, to sum up, we demonstrated new clickjacking variants that can evade current defenses. Our user studies show that our attacks are highly effective and our InContext defense can be very effective against clickjacking. We are currently working with NoScript and PayPal to propose new UI safety browser API – W3C – using some ideas of this work. So, thank you for listening and I welcome any questions.

Questions:

Question: Hi, I like the work and I am just curious about the annotation that you rely on from the server and I am curious how likely it is for an application developer to be able tо decide a priori which elements are sensitive and which aren’t? Because it seems to me that like in Facebook’s case, any link inside my site is valuable therefore it would need this type of protection, but as for ads, well, I’m a little bit less concerned about that 3rd party content, there is no way for me to completely control that, right? But ad fraud is another big class of clickjacking, right? And so, I’m just curious whether we will be able to achieve this sort of annotation throughout the web in anticipation of any kind of future clickjacking attack?

Answer: So, is your question: “How do the servers determine which UIs are sensitive?”

Question: Right, it seems to me that it relies on the knowledge of the threat model, and as the threat evolves it’s possible that servers will not be able to annotate correctly. So clickjacking will continue to take place because this defense mechanism isn’t in place, right?

Answer: I can give some obvious examples of what should be sensitive like Google auth dialog, Facebook ‘Like’ button because it reveals the user’s identity, the webcam access dialog is certainly bad, so, these types of UIs, once the users click on them, they are revealing a lot of information to the site. I probably don’t have a very good answer to how servers decide which elements are sensitive or which are not.

Question: Thank you for the great talk! That was wonderful! I guess I was also wondering about a similar question about marking which elements are sensitive, and I was wondering if you considered other alternatives like maybe trying to automatically infer which elements are sensitive, I don’t know, maybe treating any cross-domain element as sensitive, or did you consider other options like that?

Answer: Not in this work but we can start from checking user click events and especially the clicks that are initiated by users and not initiated by scripts, so those are possibly more likely to be vulnerable.

Question: I was a little curious on the technique of that defense where you said you had to remove the pointer outside of the object and then back in – pointer re-entry. It seems like that’s something that might be confusing to a user because it’s not a requirement they might be familiar with, a necessity to actually move their pointer out of and then back into an interface element. Did you look at the ways that you could make that requirement clear to the users and whether they are confused by the necessity of actually moving their pointer out of the object and back in before it would be active?

Answer: I’m not an expert on that. Some ideas would be just graying it out or showing the countdown timer or something like that to hint the user, maybe there are better solutions to that.

Question: Did you measure explicitly if the users realized right after their click that they might have done something that wasn’t what they wanted to? Was there something after the actual activity where you asked the users, you know, did you really do what you intended or something like that?

Answer: So, in our paper we actually mentioned that we did after-task survey, and we found that those answers were not consistent to what the users did, like even when we asked the user: “Did you click on the Like button?” They clicked on it but they answer they didn’t. Or something like: “Did you intend to click on it?” They clicked on it but they said they didn’t intend. We found it very confusing and so we based our results on what they actually did because we captured how their mouse was moving and we checked that data.