At DerbyCon event, Metasploit core developer David Maloney aka “Thelightcosine” presents the ins and outs of making payloads undetected by antivirus software.

David: Good morning DerbyCon! That’s a lot of people for 10:00 in the morning, so I am just going to throw this out here. I can do this talk or we can have group nap time. What do you think?

Audience: Nap time!

David: Alright, alright; really happy to be here. I go to DerbyCon every year. This is my first year speaking here. I am really proud to be here. This talk is “Antivirus Evasion: Lessons Learned”, or my alternate title “1000 Ways not to Make a Lightbulb”, off the famous Thomas Edison quote of course.

So get this out of the way real quick on who am I and why do you care what I have to say (see right-hand image). I can at least answer the first one, you will have to answer the second one yourself. I am David Maloney aka “Thelightcosine” on the Internet. You can find me on twitter and IRC: @TheLightCosine and I am on freenode on the Metasploit channel.

I am a Metasploit core developer. I work on both Metasploit Framework and the commercial products. I am a member of the Corelan Team, which is my distinct privilege and honour. I am a sometimes member of the FALE Association of Locksport Enthusiasts. Any of my FALE guys in here? No? Oh, that’s sad… I am one of the founders of Hackerspace Charlotte and a former pen-tester; yes, former pen-tester. I took an arrow to the knee.

We don’t have enough time for everyone to introduce themselves, but how many people in this room are noobs? Alright, everyone who didn’t put their hand up – you are deluding yourselves. So, I like to start my talk these days with a quote from HD Moore. This is one of the first things HD ever said to me when I started hanging out in the Metasploit channel. I wanted to be upfront and said I was really interested in doing this stuff but I was a total noob, and HD said: “If you don’t think you are a newb, you’re not trying hard enough.”

I think that is a really important thing for the InfoSec industry and community. If you are not pushing yourself to do new things, things that make you a little scared that you are going to fail, then you are doing it wrong. You’ve got to keep challenging yourself to do new things.

The process for learning new things, in my mind, is first you learn it. So you get somebody to help you learn, you read up on the subject, you find all the information you can, you internalize it. Then you do it, you practice it over and over and over again till it really starts to sink in, but you still don’t actually know it yet. When you finally know it is when you start teaching it to other people. And that is really important, you have to do all three of these steps to really truly know something, and if you haven’t done all three of these things you’re probably still a noob.

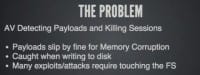

Let’s move on. Why are we talking about AV Evasion? If you are a pen-tester, this has probably happened to you at some point. You have gotten a shell, your exploit worked and then 20 seconds, 30 seconds, a minute later something happens and your shell dies. Chances are it was the antivirus that caught your payload on the system and nuked it.

Now, where this tends to be a problem is when we have to touch the file system. When we have a straight up memory corruption exploit, our payloads tend not to get detected. You can upload that payload to any AV vendor you want and you can see the detections, but when we are just inserting it right into memory AV is terrible at finding it. We haven’t put a lot of effort into AV evasion in the past, partially because Metasploit and exploitation in general were very focussed on memory corruption exploits, but the exploitation landscape is changing a lot lately.

Where we are getting caught most of the time is when we are writing to disk. This is things like using PsExec, I hope most people have at least a familiarity with using that module. Any command injection, exploits, anything that is going to write that payload to disk and then use some sort of method to execute it from disk – that’s where we are really running into our problems. A lot of the exploits and attacks that we are seeing now require that. Memory corruption is not dying completely but it is becoming less and less common to run into.

How do we get around this problem? Well, Metasploit has a series of its own unique challenges when talking about payloads. Number one is that we are a high profile target. One of the reasons Metasploit gets flagged all the time right now is because all of our executables have been downloaded by all of the vendors and they write a signature for it. You can take the default executable template for Metasploit payloads and check it on VirusTotal. There is maybe one vendor that isn’t flagging it even without a payload in it.

The next challenge is it must be redistributable. There can’t be any licensing requirements, it has to fit with our licensing scheme. It has to be something that works within our code base and within our Framework, and it has to make sense to deploy on a large scale.

I’ve had a lot of people over the past year come up to me and say: “Oh, well, I am using this crazy technique where I generate the payload and then I do all this stuff, I do this thing and this thing and then the payload doesn’t get caught.” That’s great and that works for an individual pen-tester. Where it doesn’t work is in the automated process of the Framework. We can’t easily shell out and do all these other things and then come back, it creates too many problems. So, what works for an individual on an engagement-by-engagement basis, and there are plenty of things that do, doesn’t necessarily translate into something that we can work into the Framework itself. So I was going to try and find the clip from Monty Python “How Not To Be Seen” here but unfortunately I couldn’t find a non-YouTube clip for it, so hopefully you are all familiar with it but…

Lesson 1 is don’t stand up, right? We just said: “If you don’t touch the file system you are not going to get caught,” so avoid touching the file system if you can, and I am just getting this out of the way. Memory corruption exploits are always a better way to go if AV is a concern, and if it’s not there are a lot of other tricks out there. The most prominent and successful one lately is the use of PowerShell, and I am not going to go too in-depth in that because there are a lot of smarter people doing a lot of cooler stuff with PowerShell than me. You can check out the PowerSploit guys, Carlos Perez did a training class at the beginning of DerbyCon, but PowerShell gives us a lot of opportunity to execute code without ever writing anything to disk.

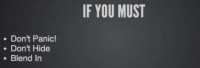

If you absolutely must touch the file system – don’t panic, don’t hide, blend in. That is going to be kind of the theme for this whole talk (see left-hand image). A lot of people, when they do antivirus evasion, are trying to hide, and what they really need to be doing is blending in.

A lot of people use obfuscators, encoders, encrypter packs. It gets them good results, at least at first, and this is what they think they look like (see leftmost image to the right). Here is what they really look like (right-hand part). Yes, it is going to work for a little while but pretty soon you are going to get spotted and then you are right back to where you were.

So, real quick we are just going to define some terms (see right-hand image), hopefully everyone is familiar with this. In the antivirus world we are going to be talking about signatures, which is basically just pattern recognition on recognizable pieces of code. Heuristics is an AV detection mode where it is basically going to emulate the execution of the program in some manner and try and come up with a match for the behavior of the program. And then there is sandboxing, and sandboxing is where basically it just creates an isolated environment where the program can execute but doesn’t really have full permission to do all the things that it might.

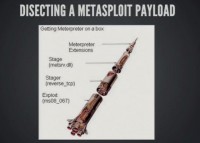

So, some Metasploit terms (see left-hand image). A payload is the code that an exploit actually delivers and is executed by the exploit. When we are talking about a payload it is often broken down into a stager and a stage. So a stager is the initial shellcode that we send, that is small enough to fit in whatever buffer, like a buffer overflow or any kind of memory corruption exploit, and it connects back to Metasploit and pulls down the rest of the payload, which is significantly larger, and that is what we refer to as the stage.

So this distinction between stager and stage is very important when we are talking about AV evasion, because what initially gets sent down is the stager, and that is one of the key areas where we are getting flagged.

Here is a nice little analogy for how you can think about a Metasploit payload (see right-hand image). You have got your delivery system, and in this case I am throwing ms08_067 up as an example because it is everybody’s favorite exploit. Then the exploit is going to put the stager, in our case reverse_tcp, into memory. The reverse_tcp stager creates a reverse connection back to our Metasploit handler and pulls down metsrv.dll, which is the stage for Meterpreter.

Metsrv.dll then has a bunch of other extensions that it comes and pulls down and loads into memory on that box. The stager creates executable memory and then connects back, pulls the stage down, puts it in that memory and executes it.

Now, in an EXE this works slightly differently (see right-hand image). The exploit method is just creating that RWX memory segment from the executable and then pulling our payload down and shoving it in. One of the big problems we have currently is that whenever we drop in the EXE that executable is just storing our shellcode in a variable somewhere. We send that executable and the payload is right there recognizable inside a variable somewhere stored in memory.

So the goal of my research and this talk was to discover specifically what detections are triggering on. Everyone has been talking about different techniques. Talk about packers, encrypters or some clever little things they are doing. What I wanted to know is why some things work and why others don’t. So I took all the ways that people have talked about doing antivirus evasion, broke them down piece by piece, and said: “OK, how many detections do we get when we do this thing? How many do we get when this, so that we have a real understanding of what’s going on when we get detected.”

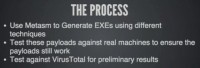

The process that I went through (see right-hand image) is that I used what’s called Metasm. Is everyone here familiar with Metasm, anyone? Metasm is a wonderful Ruby library that is an assembler, a disassembler, a C compiler, a debugger – all in one. It’s an amazing piece of software and we can use it for wonderful metaprogramming and rubying, we use this in Metasploit.

What I did is I took each technique and generated C code with Metasm, compiled it into an EXE and then automatically uploaded it to VirusTotal and pulled those results down and sorted them based on what the detection was. Nobody is screaming yet, that’s surprising.

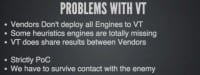

I said VirusTotal. There are some problems with VirusTotal (see right-hand image). The vendors don’t deploy all of their engines to VirusTotal, so your results on that site actually are not 100% accurate. Some of them don’t put heuristics up at all, you are only going up against signatures, and the thing that everybody loves to scream about is that VirusTotal shares those results with the vendors. So if it gets flagged by one vendor but not some of the others, VirusTotal will redistribute that information to all the vendors so that they can sig the same thing.

Why this doesn’t actually matter for our process is that this is all strictly proof of concept, and if we can’t survive this then our technique is broken anyways because we are doing the same thing everybody has been doing.

Antivirus evasion, malware – all of this is sort of an arms race. We come up with something new to get past antivirus, and antivirus finds a way to detect it. We find something new and we just keep going in this pattern back and forth and nothing really breaks the cycle. So what I am looking for is some way that we can break that cycle and do something that really just gets us beyond doing this same routine, the same song and dance over and over again.

So the first question you have to ask is: “Where are these detections coming from?” Well, the one we have been seeing for years and telling people is the biggest problem is the executable template. When we build an EXE in Metasploit Framework or Pro today, we have pre-built executables that are used by default. It shows our payload in there, takes that executable, sends it to the file system and it just runs.

Well, like I said, you can upload those templates with no payload whatsoever and it still detects it as malicious on 42 vendors, which is really hilarious because our default template in Metasploit is the ApacheBench software which is a completely legitimate tool. The Apache guys probably love us for that one.

The next place it is getting caught is the stager. Reverse_tcp is an incredibly recognizable piece of shellcode at this point and it contains a common piece of shellcode called BlockAPI that was written by Stephen Fewer, and most shellcoders use BlockAPI.

What BlockAPI is for – is because when you are doing a memory corruption exploit you don’t know what the addresses of any of the Windows API functions are. You need a way to call those functions reliably and get the addresses for them. So BlockAPI goes through and actually tries to find a way to call those functions without already knowing what the addresses are. It is great piece of shell code, it is highly optimized, it is very small, but the problem is everybody uses it, so every AV vendor knows to look for it.

One of the things that I thought might be a problem is creating RWX memory. Creating executable memory at runtime sounds a little suspicious, right? I mean it is not that easy to think of a scenario where a legitimate program at runtime is going to create a whole new section of memory and mark it as executable. Why would you do that?

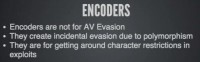

The last possible place that we are getting flagged is the encoder, and this is going to be a little important later but I am going to say this upfront – encoders do not evade antivirus, once again – encoders do not evade antivirus. If you come into the Metasploit channel and say: “My payload is getting flagged, I don’t understand, I ran it through an encoder,” – I’m going to hurt you.

Alright, so how do we get around the problem of the EXE Template? Well, like I said, the default template with no payload is 42 detections. We have the ability for users to supply a different EXE Template, and any of the exploits that do executable drops actually have an advanced option on there called EXE Templates. So if you do Show Advanced in Metaspolit Framework you will see that option. You can point it to a totally different template. The problem is this still only cuts the detection rate in about a half. Part of that is because of other things that we are doing to the executable template, and some of it is because of the stager and so forth.

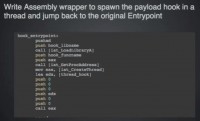

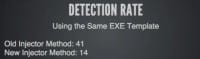

So the first thing I set to do was fix our injector method (see left-hand image). The way our injector works is it creates a new segment in the executable, which then calls VirtualAlloc to create RWX memory. We have a couple of different ways of building EXEs, one of which is just to inject, which preserves the original functionality of the executable. And what we did before was we would have this new segment and the segment would call VirtualAlloc to create RWX memory and stick our payload in it and execute it.

Well, that was getting flagged pretty quickly. So this is actually now in Metasploit Framework, this is the only piece of this talk that is already implemented in Metasploit Framework. A lot of this stuff is not going to be directly implemented but that’s why I am sharing the concepts with you guys.

What we do is we use Metasm to create a new RWX stub inside our executable. So executables are broken down into sections, one of which is the text section that contains the actual code that is executed, so we just basically create a new text section in that executable (see right-hand image). Then we take our existing payload and just basically decode it back

into assembly instructions and shove it into that section (see left-hand image). We have the ability to set a buffer register which is part of the encoders for polymorphic encoding. This basically tells our encoder where the payload is in memory.

And then we just write a little assembly stub around it, and all it does is create a thread, execute our payload in it and then jump back to the original entry point of the executable (see right-hand image).

What does it look like if you run this executable? Let’s take calc.exe, it is actually executing a totally different section of code that we have created but all it does is spawn off a little thread in the background that you are probably not going to notice, our payload runs in it, and then it jumps right back to the original part of the code the developer wrote. So your calculator pops up and everything works as normal, but as long as calculator is up you’ve got a Meterpreter shell running in the back. Pretty fun stuff.

There are some more technical details, but this cuts the detection rate from 41 detections for the injector method down to 14 (see left-hand image), pretty nice, pretty nice, if you are not dealing with one of those 14 AV vendors. It gets us a little bit closer but doesn’t really solve the problem.

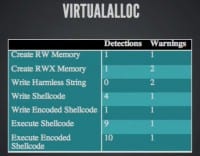

So, what I set about doing was to cut through and just do some basic stuff. What’s getting flagged? Is it the RWX stub creation, is it executing the memory, what specifically is getting detected here?

I ran through and created a bunch of different executables. The first one just creates read-writable memory at runtime and that actually got flagged, which seems a little odd. Then we created read-writable executable memory, still did nothing with it, we are not writing anything in there, we are not executing anything; still gets flagged. What if I write non-executable data into that memory but don’t try and execute it, don’t do anything with it? Well, all the serious detections drop off. We still get a couple of warnings, these are, like, suspicious programs, potentially unwanted programs, that kind of thing, but the actual detections start to drop off.

What if we write some actual shellcode in there? Well, we get detections but not that many, right? Let’s try an encoder – that dropped the detections down but we are still getting detected a little bit. When we actually start executing it though – then we see those detections spike back up into the areas that we were expecting to see around nine, ten, plus some warnings.

So, from this we can conclude (see left-hand image) that creating RWX memory is not the primary thing that it’s flagging on. And there are safe ways to use VirtualAlloc and there are unsafe ways to use VirtualAlloc in terms of getting detected. It seems to be very contextual, which says that we are not actually getting flagged by pattern matching, we are actually getting flagged by heuristic analysis, so actually running or emulating the run of that executable and detecting behaviour.

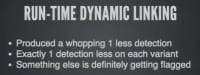

I was going to have a whole section on this but it turned out to not be all that useful. For anyone who doesn’t know run-time dynamic linking (see right-hand image), Windows provides the ability to, at runtime, load a DLL, grab a function address and call it without having to have that function in the import address table.

This is a way of obfuscating what Windows API calls that we are actually making for static analysis. Unfortunately this only dropped one detection across the board. I applied run-time dynamic linking to all those previous methods and it uniformly dropped one detection. So it turned out to not be all that useful. But it does clue us in that the old signature-based detections that we used to worry about that pattern matching, where you say: “If you just make the code look a little different, you will get past it,” – that obviously doesn’t hold true anymore.

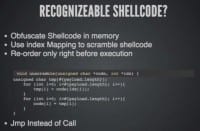

So my next thought was: “Maybe it’s just recognizing the shellcode in memory,” because we are just shoving all the shellcode in straight order into memory somewhere, whether it’s in a new code segment, whether it’s in a variable (see left-hand image).

What if I scramble that shellcode up? So go back to putting in a variable for a minute, and instead of writing the bytes in order, we write them all out of order, and then we create an index map that just tells us where the bytes actually belong, and then we just unscramble it right before we execute it (see right-hand image).

It’s basically an obfuscation technique. The shellcode can’t be pattern matched because it’s just a random series of bytes at the moment. I also experimented with: “Well, maybe if we use a Jmp instead of a Call,” so what we typically do is we write our shellcode and we dereference it as a function and then we call that function. What if we instead use an assembly Jmp instruction to just jump into it as if it weren’t a function? Does anyone think that made any difference? No. It didn’t make any difference.

Not starting to feel too smart at this point, really. Some of these ideas worked a lot better about a year ago when I first started doing this and have since pretty much dried up. So the index map made no difference, the Jmp versus the Call made no difference, again it’s not pattern recognition that is working here, that’s pretty much a given.

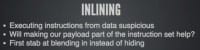

Alright, what about inlining it? Like a said, typically we have been shoving stuff into a variable, then doing some dereferencing tricks in C, and then executing it as a function. Executing instructions from data is inherently suspicious, it’s not a normal thing to do, it’s not a normal thing that executables do. What if we instead replace the actual instructions of the executable so that our payload is the instruction set, it is the text section of that executable? This is sort of my first stab at this idea of blending in instead of hiding.

So the analogy that I think most aptly describes this concept is, imagine you’ve got a guy who wants to rob a jewellery store. There are two different scenarios. In one, the guy is waiting for everybody to clear out and he hides in an alleyway somewhere, a cop walks by and sees this guy standing around in an alleyway not doing anything. He is not doing anything that is immediately recognizable as bad. But do you think that cop is going to come check him out? Yeah, he is.

Now imagine if instead our thief, in the second scenario, dresses up as a meter maid or somebody fixing the street lights, somebody that cop expects to see, something that is completely normal. The cop is going to walk right by because he has already preconditioned to see that guy and say: “No, it’s cool.” That’s the same problem with antivirus evasion: everybody is trying to hide in the alleyway when what they need to be doing is looking like something the antivirus already expects to be there.

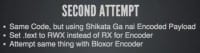

Basically, what I did is I took Metasm, took the payload and just wrote it in as raw assembly instructions and recompiled in the Exe. This (see left-hand image) is what our first attempt looked like.

The second attempt (see right-hand image) is to basically do the same thing but we tried encoding it a couple of different ways. Because we control the generation and the executable, it’s really easy to set everything as read-writable executable so our polymorphic shellcode can write over itself, and encoded shellcode works really well.

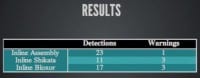

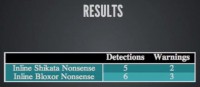

So we tried this with Shikata Ga nai, which is the most common one that everybody uses and knows and loves, also Stephen Fewer’s new Bloxor Encoder. Results weren’t really good (see left-hand image). Doing inlining actually increased our detection rate by about double where we were right before this. Shikata dropped it but only dropped it back to the same level that we had a problem with in the first place. And Bloxor was almost as bad as not encoding it. That was somewhat disheartening but gets back to – encoders do not evade antivirus. I am going to keep driving this home because I keep seeing it.

That got me thinking as I was looking at those results, every time we encoded the shellcode there were a certain set of detections that were always there when we used an encoder, that’s a little weird. Encoders have always been incidental in their AV evasion usage; they are actually there to remove bad characters for an exploit. Because it makes the code look different, we have gotten past signature detection but, as we said, signatures really aren’t our problem.

So my next thought was: “I wonder if the encoder itself is generating additional detections beyond the payload itself.” What I did was I took a series of meaningless assembly instructions, and it was just: push eax, push ecx, pop eax, pop ecx; basically, swapping two registers around, four or five bytes of shellcode, run that through our two encoders and push that up to VirusTotal – still get a whole bunch of detections.

This should be the final nail in the coffin. If any of you do not believe me that encoders do not evade antivirus, encoders cause antivirus detections, they are suspicious. They look like packers, people have been using packers for, I don’t know, twenty years now, they do not work, do not use them. They are great for exploits if you are doing a memory corruption exploit because you need to get away from bad characters, but if you are doing PsExec and you tell me: “I don’t understand why my payload got caught, I ran it through Shikata Ga nai five times,” – I will kill you!

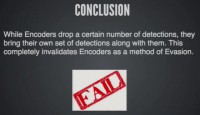

Conclusion about encoders: they do drop the number of detections but they come with their own whole set of detections that they bring all on their own, so it’s a complete fail.

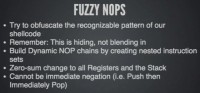

What can we do if we are not using encoders? Well, we still want to break up the way that code looks, right? So I came up with this idea of generating fuzzy NOPs (see left-hand image). These are NOPs that are not your standard NOPs, they are not your 0x90. It’s actually a series of instructions that at the end have a zero sum. So the registers in the stack all must be in the exact same state at the end of the instruction set as they were at the beginning. And you can just nest these as deep of a level as you want as long as you always back everything out at the end.

Immediate negation doesn’t work because that’s really obvious, and heuristics can easily just, basically, ignore it. So if you inc eax and then dec eax, AV is going to be smart enough to realize that: “Hey, that is a no operation really.” So we nest about four levels deep: inc eax, push ecx, do something else, pop ecx, dec eax.

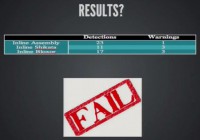

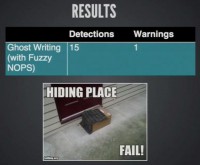

It looks like legitimate assembly instructions. It’s a series of operations but in the end we have done absolutely nothing. Then you take these and you insert them between all the recognizable sets of instructions in our payload, and hopefully that’s going to break up detection. Nope, it didn’t do a darn thing, we ended up with the exact same results that we got without doing this (see right-hand image). So, unfortunately that’s a big fail.

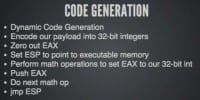

One of my co-workers said to me: “What about doing code generation, what if you never put your payload in the executable at all; instead, you have your executable generate the code for your payload?” Basically, the way this works (see right-hand image) is you take a register, any register, in this case I use EAX; zero it out; you encode your payload to a series of 32-bit integers which you have to align the payload first because a lot them aren’t aligned properly for that. But you zero out a register; you set ESP which is your stack pointer, it tells you where the top of the stack is. You take ESP and you point it into executable memory to where your code is, to some placeholder function that you have that has instructions you don’t care about. And then you start performing math operations.

You know that for your first 32-bit integer which will be the last set of bytes in your payload, and you add EAX that number. Then you push it to the stack, only now your stack is in executable memory. Then you look at your next 32-bit integer and you take the difference between that and the value in EAX, and you do a math operation to get EAX to equal that next byte. You keep doing that over and over, pushing each byte to the stack.

So our payload is never actually a part of the executable, it’s never in memory until the end of execution, and it just builds that code on the fly. That seems like it should work, right, your payloads are not there to be seen. I see people shaking their head… And then we ‘jmp esp’ at the end of course.

Nope! Totally didn’t work (see left-hand image). This kind of blew my mind because I’ve still got in my head this idea that signatures are still a big part of antivirus evasion. That’s obviously not true.

We are still doing this, still hiding, because what we are doing still looks suspicious. We are moving the stack into executable memory and then we are writing stuff there. What program does that? I mean, what legitimate developer uses that as a programming technique? You show me the developer that does that and I will show you a shitty developer.

Ok, let’s talk about Ghost-Writing, oh sorry, not that Ghost-Writing (see left-hand image), 80’s reference, hopefully some people get it.

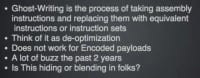

Ghost-Writing (see right-hand image) has been a big thing that people have been talking about, especially the past two years. Some guys did a really good talk on it last year, I believe. And there have been a bunch of blog posts on it this year. Ghost-Writing is basically this idea of – you take your payload or whatever assembly that you’ve got running, and you go through it and you say: “What can I replace this instruction with? Ok, I have increment EAX. Well, what if I do something else that has the same effect?”

In our case it may be multiple instructions to replace a single instruction. One of the big problems with Metasploit payloads when we are talking about dropping to the file system is that they are written for memory corruption, which means they have a bunch of broken assumptions when you talk about dropping to the file system. One of which is that the size of the payload is really important. So we optimize everything to be the fewest bytes possible.

We don’t have that concern when we are dropping in an executable. We have as much space as we want. So let’s replace all these instructions with multiple instructions that end up doing the same thing but don’t look like the code that you’re expecting to see.

So, what do you think, is this blending in or is this still hiding? It’s still hiding (see left-hand image). And appropriately, Ghost-Writing with fuzzy NOPs, because why not, still gets us nowhere. And this is really funny to me because I have seen a lot of people saying: “This is the solution. This is it right here, you do assembly Ghost-Writing and you will never get caught by antivirus!” That’s obviously not true.

Again, we are hiding the bad, we are trying to hide the obviously heinous thing that we are doing in memory rather than trying to make it look like a legitimate program. So, pretty much all these techniques have been hiding: obfuscation, encoding, doing code generation tricks – they are all things that are the guy standing in the alleyway out of sight. Yes, he doesn’t get seen from the street but as soon as somebody walks past the right way, they are going to be a little weirded out by that guy in a hoodie just standing in an alleyway somewhere.

What we need to do is we need to camouflage ourselves (see right-hand image). We need to look so close to a legitimate executable that even if antivirus does figure out what we are – they can’t flag us because flagging us would mean that they would risk flagging legitimate programs. Because if they start doing that they are broken, nobody will buy their stuff if the legitimate programs start getting killed by antivirus. So we want to get right up next to the tools that everybody uses every day and make ourselves indistinguishable from them. Then we can just look at AV and say: “Come at me bro!”

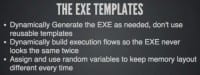

These are the problems we have talked about (see left-hand image). Our templates get flagged, the stager gets flagged, encoders get flagged, and our very behaviour with these techniques is just completely suspicious. No legitimate program would do any of these things.

So, how do we get around the executable template problem now? Well, in Metasploit Pro what we have done and anyone can do this on their own if they want to take the time for it – is we dynamically generate those templates now (see right-hand image). We use Metasm to write EXEs on the fly. We generate code paths that are completely random and dynamic, we create random variables and use them to do math operations or whatever so that the memory layout of the executable is different every single time we create it, the execution path is different every single time we create it. There is no way to create a signature like that. You sig one of them, you are not going to catch the next one or the next one and so forth. So, that one is really easy to get around.

The encoders – just don’t use them, that’s all this one breaks down to, don’t use encoders when you are dropping to the file system, it’s not worth it, it gets you nothing (see left-hand image).

The stager (see right-hand image). Like I said, all of our shellcode was originally designed for memory corruption exploits, doing a buffer overflow. They are highly optimized, smallest bytecodes possible. Everything uses BlockAPI because we don’t know where our API functions are, right?

Well, if we are generating an executable, we are a developer at that point. We can use the same techniques that developers use to do linking, and we can get those functions. Payloads from Metasploit, as they exist for the stager, are really not necessary and dangerous for dropping to the file system, we are using them in a way they were never intended to. So, what’s the solution for that?

We are not going to use stagers anymore, that is to say, we are not going to use the ones that come as payloads in Metasploit Framework. We are going to implement our own real quick, so why not? We are generating an executable from scratch, we have full developer powers – we can do anything we want.

This idea originally came to me because some of my friends who I now see back there from FALE had this idea of, basically, implementing a stager for the stager, where their code would actually go and get the stager from another source and put it into memory, and then the stager would go back in multiple levels.

I said: “Well, cut out the middleman and just implement in your executable the stager itself.” I wish I could take all the credit for this idea, but then it turns out that Egypt and Raphael Mudge had been talking about this, like, two years ago.

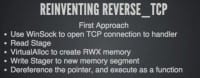

So I decided to go take another swing back through it and implement our Reverse_TCP stager strictly in C code (see left-hand image), which was a couple of days of me wanting to cut my wrist because if anyone has ever tried to program through the Windows Socket API, it is the most painful thing ever.

But all we do is we create a typical C program that creates a socket back to whatever address and port we specify, pulls down a big buffer, VirtualAllocs some RWX memory, shoves the payload in it, dereferences the pointer to that memory, and calls it as a function – pretty straightforward actually, doesn’t take too much effort beyond wanting to kill yourself for Windows API programming.

The second approach did the same thing, except it’s using that RunTime Dynamic Linking I talked about earlier, and this becomes a little bit more important here because all of our Windows API function calls are then removed from the import address table and they are just called directly from memory (see right-hand image).

So, what do you think guys: is this hiding or is this blending in? This is blending in, this is what a legitimate program does. It’s opening a socket to something, pulling down data, and putting it in memory. There is nothing particularly suspicious about that, the only suspicious part is where it executes it.

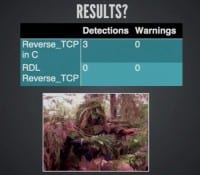

So we are getting really close to that Promised Land. Here is what our results are (see left-hand image). Reverse_TCP without the RunTime Dynamic Linking generated a whopping three detections. I can’t remember what they are but they are all vendors that you are almost never going to see in a pen-test; they are, like, obscure Who-the-hell-are-these-guys on VirusTotal.

Implement RunTime Dynamic Linking, get those things out of the import address table – goes to zero detections, zero warnings. All we had to do was implement that same functionality in C and you can spin that a bunch of different ways; we talked about dynamic executable generation. You apply that same principle then to generating the stager executable, you break up the way that code looks, you do a bunch of other things that are harmless, you kill time so that you get out of a sandbox.

How are they going to catch up to this? The only thing left that is at all possible for them to distinguish from a legitimate executable is the fact that we create RWX memory at runtime and then execute that memory we allocated.

So, this is success at this point. But why stop here? We’ve got a little more fun to have, and this guy right here in the second row came up with this idea (see right-hand image) about a year and a half ago. Mubix says to me: “What if, instead of sticking our payload into RWX memory, we have the executable and have a buffer overflow in it, and then we just have it exploit itself?”

So, Egypt came up with the name for this, he decided that it should be called “Auto-Erotic Exploitation”. I am going to roll with it, I think it’s fitting, it’s unnecessary at this juncture as we saw because we got zero detections, but this is a fun little trick we can do and this, again, goes to blending in, right? We are not creating RWX memory and we are not executing it, we just have a buffer overflow. From an outsider perspective, that doesn’t make you malicious, that just makes you a shitty programmer. There is no end to those; you are going to see that all over the place.

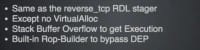

So we did the same thing as the Reverse_TCP RunTime Dynamic Linking stager, no VirtualAlloc, but we just do a buffer overflow, we copy our entire stage, all eight hundred thousand bytes of it, to the stack and then we do a simply string copy, buffer overflow to control EIP, and what’s great is you don’t even have to take Peter’s class to be able to do this one because we are writing the program ourselves (see image above).

All you do is in your buffer you figure out what that offset is, and then you ‘printf’ the actual pointer to your buffer into that section of your string that you are overflowing, and you already know that you have a precise location for where your payload starts, it will execute every single time.

Now, the part I haven’t gotten to get to yet, because I ran out of time, is to get around DEP doing a built-in Rop-Builder, so we build dead code paths. They test a condition and it never passes true, so you never execute that code, but it just emits assembly instructions that are Rop gadgets at various places. So we set our Rop gadgets in locations that we know and control already, and then we have a Rop-Builder that just goes to those labels and builds our Rop chain for us and adds it in to the overflow. Done! What do you think the results of that are?

That’s right, that’s bad ass! Fortunately, I seem to be right on time here. Special thanks to a lot of people, in no particular order: Egypt, HDM, Mubix, corelanc0der; a bunch of the guys I work with, FALE, a lot of people at AHA, DerbyCon for having us here again, it’s been amazing, and everyone else whose name I don’t remember who has talked to me while I was drunk at a conference and giving me ideas, there are a lot of you, thank you! And now for something completely different.

Thank you!