This is a study conducted by computer scientists and well-known cryptographers Moti Yung and Adam Young on the two-way relation between cryptography and malicious software. The research was presented by Moti Yung at 26th Chaos Communication Congress (26C3) in Berlin.

This is a study conducted by computer scientists and well-known cryptographers Moti Yung and Adam Young on the two-way relation between cryptography and malicious software. The research was presented by Moti Yung at 26th Chaos Communication Congress (26C3) in Berlin.

Yes, we can’t! Yes, we can or yes, we can’t? This was a nice introduction. This is a joint work with Adam Young; we’ve been doing it for 15 years, started it about 15 years ago.

It’s a little bit prospective and a little bit retrospective. When I was being introduced, the guy said: “I don’t know what that is, can’t even say these things.” Sounds like nonsense, indeed, admittedly. Alright, what is it?

Cryptovirology is the study of applications of cryptography – and I’m a cryptographer – to malicious software, and we started publishing it in 1996. And kleptography is, in some way, the other way around: it’s applying malicious software to cryptography. So, in some sense, it’s investigating how modern cryptographic paradigms and tools can be used to strengthen and to improve new malware. And just to give you the perspective of the time of initiation of this work, it’s the mid-90s, the big Schneier’s book, cryptography is going to save the world; the big equalizer – everybody can write cryptography and have secure systems. Applications are to defend computers against all evil and so on. The adversaries are the bad guys and we use the crypto against them.

If everybody thinks this way, you might as well think the other way. This is what we did. How to use cryptography on the attack side of computers? Not that we wanted to attack, but it’s interesting to see the scope of what can be done with technology like cryptography.

So, we started in 1996; and 2004, shamefully, was kind of commercial break here. We wrote a book in 2004 that is called: “Malicious Cryptography: Exposing Cryptovirology” (see left-hand image), and that was about 9-10 years into the investigation, but I’ll cover it a little bit more here.

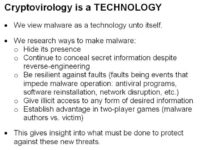

And the way we decided to look at it is as a technology. So, we view malware or software like viruses that people consider bad simply as a technology, neutral view. And then the idea was to look at malware that tries to hide its presence, conceals secret information despite attempts to reverse-engineer, can withstand certain faults, like people trying to trace what it does or where it comes from, and so on.

The idea was that this would give the insight into what must be done to protect against these threats, because it’s upon security professionals and hackers. And I love hackers because they always teach me new things. You have to always look for threats, and if you have any sense of responsibility, you also have to look for countermeasures to those threats, and that was in this line of work.

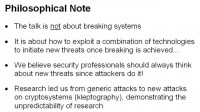

What we did is not something about breaking systems themselves – you know, a virus needs to penetrate a system in some sense. But it’s about exploitation of this combination of technologies once breaking into the system has been achieved.

We started simply with studying the application of cryptography to viruses and other malware, and somehow during this investigation we also got to investigate the opposite: how cryptographic Trojans can be inserted into crypto systems and what attacks they can do. That’s an interesting demonstration of how you get to something you don’t start with.

So, a little bit about the history of malware. I’ll be very brief here and I’m going to omit a lot of the history; just a few lines of important development, because, as I said, we treat malware simply as technology; we don’t say it’s bad or good – just what it is.

In fact, we can trace malware all the way back to (John) von Neumann and the reproducing finite automata idea that he had, where automata produces output, and the output is the description of the same automata. This was the first replicating program, and this was already in the 1940s when almost nobody here was alive. These viruses were alive, but we were not.

In the 1950s, especially in the laboratories, it was the hobby of various geeks. Core Wars is an example of viral software that was used for games.

And then, in the 1960s, malware was recognized as a threat to integrity and availability of classified information: the documents from the U.S. Department of Defense, the notions of access control, mandatory access control and so on. If you read the documents from the 60s, you’ll see that they were very much motivated by these conceived threats.

In the 1970s, advanced malware design begins, and also advanced realization that crypto systems can carry information they were not intended to, by Simmons; and maybe this information that can be carried inside the crypto system can be a Trojan.

In the 1980s, viruses started to appear in the wild, so this comes together with the PC revolution, home computing. Cohen started investigating viruses from an academic point of view, and a famous event was the Morris worm spreading across the Internet and taking out parts of it. And the idea of the global threat of something that can start as an experiment in one location and can spread globally was realized.

In the 1990s, major viruses with major impact – when I say virus, I mean worms and so on – on commercial systems; and people start measuring using money, how much you lose on the virus, just because of disruption.

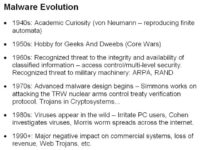

I want to point out one interesting design – actually, implementation in design from the 1980s, and this is the survivable Trojan horse of Ken Thompson created in 1984. He described it in his Turing Award lecture.

What he designed is, effectively, a survivable password snatching Trojan horse. Password snatcher is a program that tries to infect the Unix password program, and the design goal is to show how systems are getting complex and threats are getting serious. He designed a Trojan horse that survived recompilation and reinstallation of the password program on the host machine.

He demonstrated that it’s not just the application itself that needs to be protected, and I have the description here but I will skip it, but I’ll get to the bottom line. The bottom line was that, essentially, the Trojan was using the C compiler to increase its survivability; and then if you replace the C compiler or the password program, just the smart operation of this Trojan made it survive and replicate inside the system. So, the bottom line of this was that one cannot just scrutinize the trustworthiness of a program by analyzing the source code and by compiling it – in some sense, the entire machine must be scrutinized, because he used something that was hiding in the compiler, therefore the source code, the compiler, linker, assembler, operating system need to be analyzed. When the threat comes from one source, you cannot just go there; really, it spreads around.

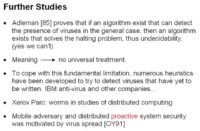

The academic studies by Cohen in his PhD thesis in the 1980s: he started to investigate viral countermeasures and bypassing these countermeasures. What he noted is the idea that a virus can change itself in the replication process. He produced some viruses that had no common sequence of over 3 bytes between each generation, and he called them evolutionary viruses, and nowadays we know about polymorphic viruses.

Also in the 1980s it was shown that the problem of whether a program or a set of programs has a virus or doesn’t have a virus is undecidable; the meaning is there is no universal treatment, and at the same time the antivirus heuristics based on scanning and signatures started to be developed.

Another thing that happened in the 80s is worms were invented as a paradigm for distributed computing in Xerox PARC, among other innovations.

And, as I said, polymorphic viruses are now known threats, and they employ cryptography, to begin with. I’m not getting into details, but there is a decryption header that decrypts the program (see left-hand image), the program can execute when there is a replication. The decryption can be done with another key. So, you can see how cryptography can generate many viewpoints of the same encrypted piece of software.

This was already something that existed as application of cryptography to malware when we started. So, you can do many changes, and it’s easy to exponentially explore the space of possible viruses, and this was noticed in the early 90s.

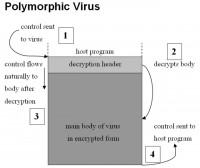

This was the state of the art when we started; the other piece of the picture that we wanted to include in our technology is public-key cryptography (see right-hand image). For the sake of this presentation, I’m not going to dwell a lot on public-key cryptography.

But all you have to know is that there is a public key denoted Y there, and it has a corresponding secret key X; X is kept by the key owner. Everybody can encrypt E of Y in the message, get the ciphertext CI, everybody can decrypt it. But the knowledge of Y does not enable the decryption of the ciphertext back to the message. Only the one who knows X, this is called Trapdoor Information, can take the ciphertext and the secret key X, apply a transformation denoted D, which is the reverse operation to the encryption, and get the message back.

So, this is the idea from the late 70s, and the first example of it was the RSA which was based on factoring big numbers: there is a number N that is a multiple of P and Q, two big numbers, and you cannot factor them, you cannot take the root; therefore you can take the message and raise it to the E mod N; only if you can factor, you can recover them. Applications for this are encryption, key exchange, digital signatures, and various protocols that have been suggested in the last 30 years, like playing poker over the phone, etc.

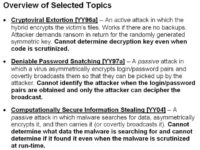

Now we’re going to get to the subject of cryptovirology, and I will review three topics (see image). The first one is cryptoviral extortion; this is an active attack. The second subject that I will cover will be deniable password snatching, which is a passive attack where the combination of cryptographic technology and other available modern channels, that are available in the infrastructure, enables us to provide the attacker with deniability of being identified. And the third topic that I’ll just mention is computationally secure information stealing: kind of when you know the virus, you have all the traces of the operation, and still you don’t know what the virus was stealing.

So, these are the types of things you can do when you start combining these technologies: this public-key cryptography on the one hand, and viruses on the other.

How can such research be justified? Well, actually I don’t think this is the right conference; I should have erased this slide (see left-hand image). Everybody understands: you have to hack systems, you have to break systems, you have to think about threats, and this will help society if you get the right cooperation, and I’ll talk about it later.

An Insight into Cryptoviral Extortion

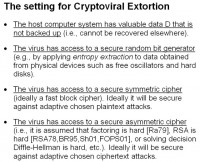

Let’s go directly to the setting of the cryptoviral extortion (see image to the right). Assume the following setting: the host computer has valuable data, I call it D, and due to the usual laziness it’s not backed up, or not fully backed up at the moment. And assume you wrote a virus that has access to secure random bit generator; it has entropy extraction in it, it can read the environment and get kind of truly random bits from the physical devices. The virus has a code for secure symmetric cipher like AES. And the virus has access to code for secure asymmetric cipher; this is the public-key encryption, so this is a program for, let’s say, RSA encryption.

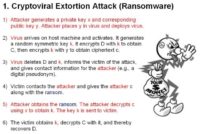

Here is, more or less, the cryptoviral extortion attack (see left-hand image), and this is a protocol between the attacker and the virus. Let’s go see what’s going on here. So, the attacker generates a private key x and corresponding public key y, and the attacker places y in the virus, but keeps x to himself in his own machine not connected to anything, nothing, not the Internet, not anything.

And then the virus arrives at the host machine, and, as I said, this is not a part of the talk: “how to get there,” but we got it, it’s there. So, what does it do there? It activates, it generates a random symmetric key k, a key for AES, decrypts the big file D with this key k, obtains C, so C is the encryption of D under AES, and then encrypts the key k that was used in this encryption with the public key y to obtain the ciphertext – c.

The virus deletes the data D and the key k, and then informs the victim of the attack, gives contact information to the attacker, hopefully not directly, but some digital information, some anonymous Swiss bank account. And then the victim contacts the attacker and gives the attacker c along with the ransom, because it asked for some money in step 4.

Step 5 – the attacker obtains the ransom, and since he wants to play nice, at this point he can run away with the ransom, but if he’s smart and he wants to be in business, he should take c, use x which is the asymmetric key that he has, that he is the one that has the ability to decrypt with it, and from c using the key x obtains k. The key k is sent to the victim, and then the victim in step 6, having obtained k, can decrypt C and get the data D. So, you see what happens: somehow there’s this game that we designed.

So, first of all, security of this attack (see right-hand image): analyzing the code of the virus reveals the public key y and not the private key x, because it’s not there. Just knowing the encryption key y is not sufficient for decryption. As long as the symmetric key k is not captured and the virus is written right, then in short time it is erased after the decryption, and there’s no way to recover k, because it exists only in encrypted form. The encryption can also be performed incrementally if you want to avoid detection, this is virus technology. And then there are variations: you don’t necessarily have to extort money, you can get data and the like. Communication can be done in an anonymous way, and so on. So, this gives security to the attacker.

That was a way to show that the power of public-key cryptography is such that there are really unequal powers here: the attacker has the decryption key, and he’s the only one that has the decryption key. And he has to be involved in the recovery.

So, before the involvement of public key such imbalance of power between the attacker and the attacked host did not exist, and this is the right tool for this imbalance of power. So, previously the virus could disrupt, could delete things and so on, but never move the power to release the information to the attacker who is remote and is not even present while the virus is operating. Ok, that’s the first idea.

The Classic and Deniable Password Snatching Attack

The second idea that I will cover is password snatching that we did. A typical password snatching scenario – we did it in 1997, so the examples are of that technology, but technology just worked in favor of this attack that I will talk about. So, password snatching was conceived in the multi-user machine environment, and the purpose was to steal login and password.

The classical solution is that the attacker installs a Trojan, like a keylogger that collects login and password pairs, stores them in a hidden file, and the attacker later downloads these files to get those things. The drawback of this classical attack is that the attacker is at risk when they install the Trojan, and also when retrieving the login and password information.

The idea of the deniable password snatching was to use the power of cryptography and more or less public channels to make the password snatching attack deniable, so that all public actions by the attacker do not necessarily tag the attacker as the attacker (see left-hand image). Anybody else could have done the same actions.

So, the attacker first writes the virus to spread the Trojan, hopefully remotely; then the attacker releases the virus. And the idea in step 2 is to release the virus in a kind of anonymous spread. So, the example that we did when we thought about it is to store it on some floppy disk and leave it in a public Internet café. It was the technology of that time, but you can think of better anonymous channels nowadays.

And then in step 3 the virus infects the machine, installs the Trojan; the Trojan stores the asymmetric encryption, namely the public-key encryption Trojan, and when it collects login and password, it encrypts it with this public-key cryptosystem.

And then the idea in step 3 is to broadcast back this information to the Internet, or when we worked on it the idea was to unconditionally copy it to anybody who copies information from this machine. So, at that time somebody puts a floppy in the machine, copies the information, but you also put the hidden file there. In modern technology you can think about the Trojan posting the file on some bulletin board or some other things like this.

The idea is that the information that was collected is sent back to the public, and the public includes the attacker: many people can download this information, but they don’t have the decryption key, only the attacker does. So, the attacker, when he gets the information, can decrypt and get the login and password information; and for the others, because of their use of public-key cryptography, this encrypted information is useless.

So, if the attacker is caught and he has the public file, many people got copy of this public file through the broadcast encryption and they downloaded it, but they can’t do anything with it. So, anything in the public record does not tell you who the attacker is. And as long as the distribution of the virus was successful and the broadcast back of the information was successful, only the attacker can take the information that it got back, put it on his own machine that is not connected to anything, and then get the login information. And this was the way to use public-key cryptography, plus these distribution channels and collection channels, to hide the attacker.

And only the attacker gets it: there is confidentiality to this attack because he’s the only one who can decrypt the information. Everybody else who gets the encrypted file cannot decrypt it. And as I said before, these broadcast channels have just been improved over time: botnets, mixnets and so on.

Computationally Secure Information Stealing

The third threat that I wanted just to mention is this: computationally secure information stealing. And the idea is to take a virus that searches for specific information and then steals it, puts it in some storage or stores it in itself, and then moves it back to the attacker. And you get the code of the virus, you get full trace of everything that the virus was doing, and you don’t know what it was looking for. Let’s say it looks for a salary of a certain person in an organization, puts it into the virus itself, starts copying back, and it gets back to the attacker.

But nobody, just by looking at the virus, looking at the trace, is able to see what was done. So, again, with the power of cryptography you can process the entire salary file, encrypt everything, but actually encrypt only the right thing without anybody knowing. Even if you have full trace of what was done, it’s possible to do with cryptography, with cryptographic techniques.

So, there is a way of stealing information without anybody in the world but the guy who wrote the virus, the thief, knowing what was stolen. I will not get into details here, it’s a little bit more complicated; but it’s possible.

Scientific Retrospection

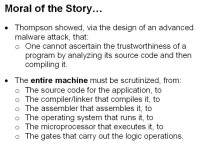

I will not cover more attacks of this nature, but I will give a little bit retrospection of what this development shows us. Thompson, as I said, showed that you cannot clean the system by considering the application layer only or by considering the virus and the password and the infected program itself. You have to scrutinize the entire system component.

In open systems the entire system, with the Internet and with viruses, can be beyond our control; it’s not necessarily the one machine that you have control over. And then, with cryptovirology, this combination of public-key technology and viruses in these open systems, sometimes after the attack the only way to recover is by considering the attacker himself outside the system.

You have to take care of the example of the recovery where you need the attacker, or the fact that you don’t know what information was stolen, and only the attacker can tell you. So, we can’t do it on our own, and it doesn’t matter how much power you are going to put in it – you have to break the crypto in order to do it. Theoretically, of course, you can, but you have to break the crypto, so from the complexity or the power of crypto you cannot scrutinize the system; you need the attacker in order to scrutinize the system. And that’s the step that was shown here as a retrospective into those types of attacks.

Reactions to “Malicious Cryptography – Exposing Cryptovirology” Book

We got some reaction to what we described in our book. It’s good to see the reaction. I told you before that the book came out in 2004, so here is a reaction from a famous virus book writer himself who decided to write a critique of the book (see image). Since I wrote a virus book, I will never write critiques of other people who write virus books, because for me it’s a conflict of interest. Ok, but let’s see what this fellow wrote.

He wrote: “Is the volume supposed to be a serious warning against new forms of malware? …” So, he’s doubting the goal of this book. And then he says: “In addition, much of the material concentrates on building more malign malware, rather than dealing with defense against it.” So, he noticed that we really wanted to have fun – good. And then, instead of criticizing us, he’s criticizing the community of virus writers. He says: “I’m not too worried about vxers (virus writers) getting ideas from Young and Yung: implementing crypto properly is a painstaking task, and from almost twenty years experience of studying blackhat products and authors, I’m fairly sure there’d be lots of bugs in what might be released.” Ok, so he doesn’t criticize us, he criticizes the virus writers, hackers, because they don’t know crypto, whatever; ok, good, fair enough.

Some director in some funding agency told us: “We do not consider these attacks as high priority or immediate.” And then we had reactions to the reactions (see left-hand image). I pointed out to some people that what we do is kind of a very cheap way to do denial-of-service using this power of asymmetric, public-key cryptography. Adam took the criticism a little bit more seriously and he said: “Wow, who needs to know cryptography?” So, he implemented a version of the active attack, this ransomware virus employing CAPI – Microsoft’s cryptographic API – to verify that you don’t really need to know cryptography, you just call the API: you have to know the verb Encrypt, you have to know the word Random – good.

I told him not to worry about such things and not to worry about criticism: criticism is healthy and challenging, and that’s fine. And I told him that the only thing that really worries me is we suggested obvious countermeasures: extensive backup and recognizing crypto operation where it doesn’t belong, and I was worried that the antivirus forces, those that claim to do antivirus, don’t pick on it. I don’t see them getting ready to what we pointed out. So, as a result we had some other ideas and we didn’t even bother to publish them in this direction.

And then, around 2007, strong ransomware employing public-key cryptography started to appear. So, virus spreading started using botnets, which is very similar to the password snatching channel where you don’t know who the attacker is, and ransomware that asks you for money. So, the conclusion is: the bad guys’ business is to try to make money, and they ignore recommendations from heads of funding agencies and book writers that write criticism of other books. Simply, they want to make money. And, of course, there are various discussions about whether these attacks are severe, important, what they are, how serious they are, whether there are alternative attacks. I’m not getting into this; I’m just pointing out what we did and what was done, and you’ll be the judge.

Dissecting the Kleptography Side of the Coin

So, this is the current state of affairs regarding these cryptovirological attacks. Then we kind of abandoned writing about cryptoviruses and concentrated more, and still concentrating, on something that we call kleptography. It’s a play on words: it’s a combination of cryptography and klepto, which is theft.

So, we noticed that in those malware designs we always have kind of a crypto Trojan horse, core, that was doing cryptographic war, and then there was a spread mechanism that was a virus replicating through the system to get access. At some point of the investigation, and we don’t remember when, how and who, we raised the question: what if the crypto Trojan that does the crypto work, instead of putting it in a virus, put it inside the cryptosystem?

Simmons observed already in the 1970s that certain ciphertext and public key values have redundant information in them, and they can carry information that was not intended. So, we said: “Aha, maybe.” The thing we tried to investigate is: if a cryptosystem is in tamper-proof hardware, which people thought to be the most trusted way to implement cryptosystem at the time, then no one knows what algorithm runs there.

So, the manufacturer of such highly trusted tamper-proof hardware, instead of putting the benign cryptosystem, the RSA cryptosystem, can put the Trojan cryptosystem. And I’m very worried nowadays when people talk about trusted computing modules, and some of them have certain tamper-proof properties. And I’m also worried about open source code, that even though it’s open source, nobody reads it. With open source, when it’s cryptography, read it! It should be open window source, not just open source that everybody ignores.

The fundamental idea is that there are ways to implement cryptography in a way that looks to everybody like it is doing the right thing, but not necessarily so.

So, what we did as the first step is we generated the crypto Trojan that runs RSA key generation that randomly generates composite numbers N, which is really a multiple of two strong primes p*q (see image). It uses real randomness, it’s not monkeying with weak generator and things like this, it’s really strong. It looks random to everybody, and it is secure, so it has first proof of security; this is as good as any other RSA key that you generate. I’m talking just about composing.

But the Trojan’s creator has a trapdoor that from N itself, namely the bits of N, looks at N, and it has an algorithm to read the factorization of N, read the inside N. Any other reverse engineer cannot factor – yes, we can’t, right? The attack is exclusive, and here you need the second security proof: security of the attacker, exclusivity of the attacker. So, the bottom line is you have to trust the producer of the tamper-proof hardware or the producer of the software, if you don’t look at the software, not just the fact that the device looks good. Keep the bottom line in mind. I’m not going to cover this, it requires another talk, but keeps the moral of the story.

I will now move to the conclusion. I showed you several malware attacks, either general malware or Trojans, I mentioned just Trojans inside cryptosystems. In each attack the public key of the attacker was used by the malware to make the attack robust in some sense: keep the attacker anonymous, hide the malware, what it is actually doing, that is, stealing; give the attacker exclusive ability to decrypt data, and so on. So, it uses the cryptographic power of public-key cryptography, the technology that was invented in the late 1970s, in a very specific way.

Some of the attacks are active in nature, like extortion or leaking the factorization of the RSA number; and some are passive, like hiding channels or information stealing so that you don’t know which information was stolen. And probably there are other attacks of this nature that exploit this imbalance of power between the guy that creates a public key and knows the trapdoor, and the rest of the world.

Technology can be used and abused; technology is neutral, it’s what we make out of it. CryptoAPI was not generated by Microsoft for my collaborator Adam to write a virus, but you can do it. Public-key cryptography was generated for encryption in signature, not for these attacks, but you can use it for attacks. Ransomware has been used; I think also the deniability has been used in some way.

And for those of you who are in the area of detecting such attacks, the use of availability of technology should be well controlled and managed, not from the point of view of government control, but as an operational control on a system, access control: who does what. It’s not that if you run your system and you manage it you have to know which parts of the system are allowed to do what.

There’s something about threats: always look for new possibilities of attacks. It’s very easy to come after the attack and say: “Ah, there was an attack.” The idea is to predict attacks 10 years before they occur and respond to them before they occur; not just be reactive. 0-day attacks – be ready for them if you can, if somebody told you. In everything around us: system, Internet and so on – attackers are getting more sophisticated, we need to be proactive.

I titled this talk: “Yes We Can’t!” Why “Yes We Can’t”? Because crypto has positive applications, and then cryptovirology showed negative applications; so we can do great things with crypto, but it can also prevent us from foiling certain attacks, so it has negative application. But then we can take cryptovirology and turn it into malware and crypto as a technology employed in a positive way. So, you can turn cryptovirology to produce distributed agents that are robust, survivable for example; or nobody knows what they do, so you do distributed computing, and even though somebody does the work for you, they don’t know what you do. And you can turn kleptography to key recovering methods that don’t use additional work and are just generating the key. So, the negative applications have positive applications, if you wish. It’s a strange world, you know.

Further conclusion: assurance of systems’ components is a tricky business: kleptographic cryptosystems show this. There are implications for testing: impossibility of black box testing and complexity of trust relationships in a system. And when suggesting new trusted hardware or new software you want to trust, be careful, as I already said before, but it won’t hurt if I say it again. And we need to be ahead of the attackers if possible.

Talks usually end with conclusions, but I will end with inconclusion. What is trust? Again, Ken Thompson already in 1984 showed us a problem with trust; the title of his paper is “Reflections on Trusting Trust”. Cryptovirology – some breaches of trust; recovery is at the mercy of the attacker. Yes, we can do it. Yes, we cannot do it on the inside of the system. Cryptography and trust relationship go beyond the boundaries of the system. You have to trust offline elements: the designers, the manufacturer, and so on. Yes, we cannot just trust black box component. Trust is a very tricky notion, and if somebody claims they understand it, they probably don’t. Thank you very much!