For years, cybersecurity has been framed as a defensive discipline: build the walls higher, patch faster, detect sooner, and respond quicker. To be fair, that mindset made sense when attacks were largely manual, predictable, and bounded by human limitations. But, as AI reshapes both sides of the cyber battlefield, that purely defensive posture is no longer enough.

In our recent podcast interview with Tim Chang, Global VP & GM of Application Security at Thales, one theme came through clearly: organizations need to rethink how they use AI in cybersecurity. The question is no longer just how to defend against AI-enabled attacks, but how to proactively use AI to anticipate, disrupt, and outmanoeuvre adversaries. That requires a fundamental shift in mindset, from reactive defence to active offense.

Threat actors have already made that shift

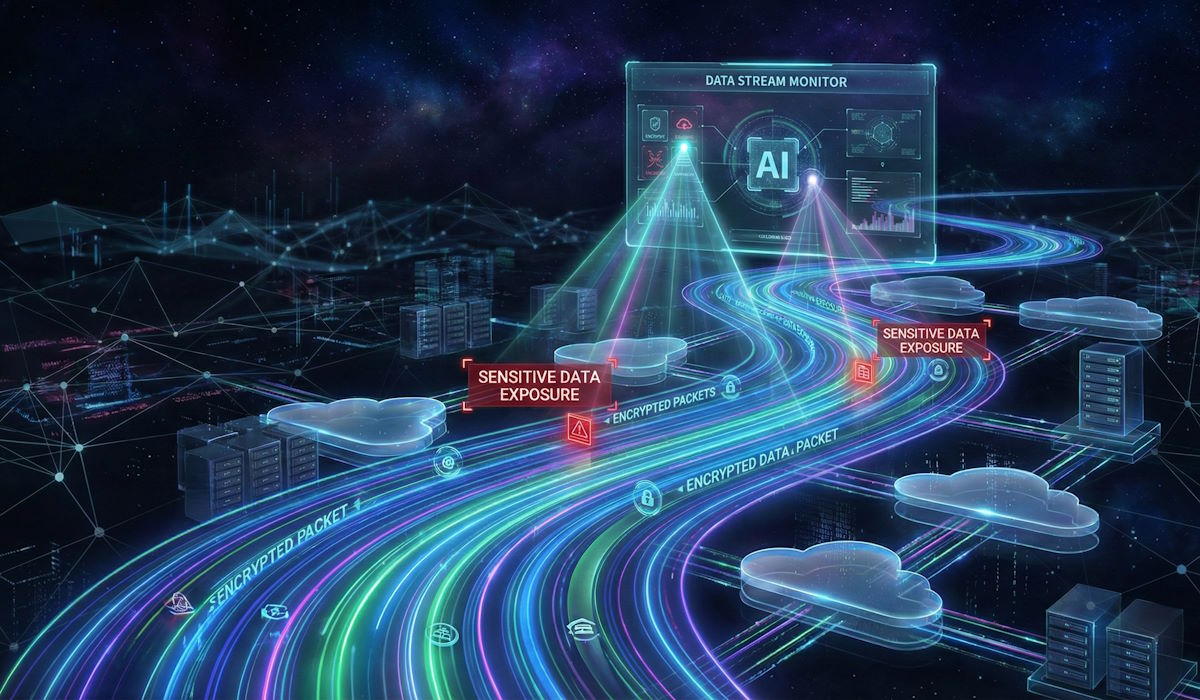

As Tim said, today’s threat landscape is increasingly shaped by AI-powered predator bots that never sleep, constantly probing applications, APIs, and cloud environments for weaknesses. These systems don’t get tired, don’t lose focus, and don’t work on business hours. Moreover, they adapt in real time, learning from failed attempts and immediately trying new approaches. At the same time, organizations are experiencing an explosion of API traffic as modern applications become more distributed, more interconnected, and more dependent on machine-to-machine communication. APIs are now one of the most attractive attack surfaces in the enterprise, and they are being targeted at scale.

Defending against this kind of threat using traditional, rule-based, perimeter-focused security models is like trying to stop a swarm of wasps with a checklist. Admirable in effort, perhaps, but ineffective in practice.

Recognising opportunities

AI changes this equation, but can only do so if deployed differently. Many organizations are still using it defensively to improve alert triage, reduce false positives, or speed up incident response. Those are valuable gains, but they are incremental. What Tim points to is a more profound opportunity: using AI offensively, in the sense of proactively hunting risk, simulating attacker behaviour, and continuously testing systems the way real adversaries would.

Offensive AI in cybersecurity doesn’t mean “hacking back.” It means adopting an attacker’s mindset and automating it at scale. It means using AI to continuously analyse how applications behave under stress, how APIs are being abused, how identities and permissions drift over time, and how data is exposed in ways no one intended. Instead of waiting until the fire alarm sounds, security teams can use AI to surface weak signals early, before they become incidents.

Redefining successful security

This shift also forces a rethink of how security teams define success. For too long, security has been measured by compliance milestones, audit outcomes, and checkbox-driven controls. Those metrics say very little about real-world resilience. As Tim put it during our conversation, “The organizations that succeed next year will be the ones that treat security as an engineering discipline, not a compliance checkbox.”

That distinction matters. Engineering disciplines are iterative, experimental, and measurable. Engineers assume systems will fail and design accordingly. They test constantly, automate relentlessly, and learn from every outcome. When security adopts that mindset, AI becomes a force multiplier rather than a bolt-on tool.

The impact on modern AppSec

Nowhere is this more evident than in application security. Modern applications are dynamic, cloud-native, and deeply interconnected with third-party services. Code changes occur daily. Dependencies update automatically. APIs are published, deprecated, and repurposed at speed. In this environment, static assessments and annual reviews are obsolete the moment they’re completed. AI-driven approaches can continuously map application behaviour, identify anomalous patterns, and surface risks that would be invisible to human analysts alone.

The same applies to data security. As data moves across clouds, SaaS platforms, and AI pipelines, understanding where sensitive information lives and how it’s accessed becomes a moving target. AI can help organizations discover, classify, and monitor data exposure in real time, turning what was once an after-the-fact audit exercise into an ongoing, proactive capability.

Redefining culture

Of course, adopting an offensive AI mindset isn’t just a technology challenge. It’s a cultural one. It requires security leaders to be comfortable with experimentation, with imperfect answers, and with shifting from rigid controls to adaptive systems. It requires closer collaboration between security, engineering, and data teams. And it requires acknowledging that attackers are already using AI creatively and aggressively, whether defenders are ready or not.

The good news is that the same forces empowering attackers can empower defenders. AI can scale expertise, reduce noise, and allow human teams to focus on strategic decisions rather than endless triage, but only if organizations are willing to move beyond the idea that security is about keeping things out, and instead embrace the idea that security is about understanding systems deeply and continuously challenging their assumptions.

As we move through 2026, the most resilient organizations will be those that stop thinking of AI as a defensive add-on and start treating it as an active participant in their security strategy. The future of cybersecurity belongs to those willing to think like attackers and build like engineers.

Listen to the full episode on the Thales Security Sessions Podcast entitled “The Predictions Episode with Tim Chang,” on your preferred podcast platform.