Content:

Head of Recurity Labs Felix ‘FX’ Lindner presents an in-depth research at Black Hat Europe 2012, dedicated to differences in technical implementation and security architecture of the world’s leading client platforms used on Apple iPad and Google Chromebook devices.

Head of Recurity Labs Felix ‘FX’ Lindner presents an in-depth research at Black Hat Europe 2012, dedicated to differences in technical implementation and security architecture of the world’s leading client platforms used on Apple iPad and Google Chromebook devices.

Hi, my name is FX, or Felix – whatever you like more. This presentation was developed together with two guys – Bruhns and Greg.

In general, what we are looking at is, you know, if you look around you, every cool person sits there with an iPad, possibly connecting to the company network. So far I haven’t seen this thing called Chromebook here, but they might exist. So the question is these are new client platforms, so they actually differ massively from what we used to have – we used to have PCs, personal computers that we had full control over. But nowadays the trend is more towards devices that you buy from your ‘religion’ – favorite vendor; and you use those devices, and you trust them inherently. At one side, it’s a good thing because you stop worrying about installing software and stuff. On the other hand, we are computer security persons, so we consider what the security of it is. So we wanted to have the comparison between two massively different devices that differ in their background, their motivation, the general idea, as well as the technical implementations.

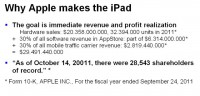

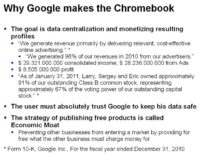

So what we looked at is the iPad (1st generation) and Google Chromebook. What’s often overlooked, especially with hackers, is that people, namely companies, don’t do what they do for religious reasons or because they are such good people, but because they make money. So when you look at the security architecture of a device, it always pays off to understand how this company makes money with their device. So in the case of the iPad, I’m sure most of you have read that last year Apple actually made more money and sold more iPads than HP sold PCs, worldwide. So there’s more people buying this thing than people buying an actual proper PC that they have control over.

Apple iPad (1st generation)

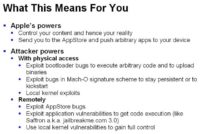

Apple’s business model is centered around selling individual devices, like everyone is supposed to have their own device. And then, 30% of the AppStore sales go to Apple no matter what; and 30% of all the money you give to your mobile phone provider goes to Apple as well. So they have very, very high interest in selling as many devices as they can to make the over 28,000 shareholders of Apple more happy. It works quite well: as you can see in the numbers, the iPad 1 made them about $29 Billion, so it’s worth doing it certainly.

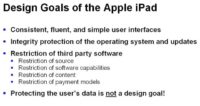

And from this business model comes the actual design goal of the security system. What they want is a consistent, fluent user interface which you all love. They want integrity protection, but they want the integrity protection of their operating system and of the applications, and not of your data for example. They want to restrict where software comes from, what software does, the capabilities, what’s in the content; they want to control all that because they want their 30% share. Protecting the user data is actually not on the agenda. That is very important to understand because that doesn’t make them any money. And, you know, if it doesn’t make you any money, then you are not investing any money in it either.

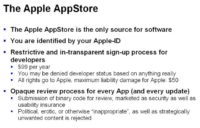

Where the software comes from – everyone knows the AppStore, you have an Apple ID. Apple goes ahead in that if you try to sign up to the AppStore, you get a heap of contracts. If you are into contract reading, I highly recommend, just for the sheer fun factor of it, to sign up as a developer in the AppStore, because this contract is the most ridiculous you will ever see – all rights go to Apple, and if anything goes wrong Apple’s damages are limited to $50. The sign-up fee is $99, so you already paid them for that. And when you submit software to the AppStore, it goes through this review process that supposedly is about security and usability – in fact, it’s completely damn random. I mean, you are submitting binary code, and as Chris Wyposal from Veracode outlined how hard it is to automatically check binary code for any properties, that is essentially impossible. So essentially, it’s really random, and you can’t actually submit code that for example makes things in the iPhone better than are sold by Apple, so if you want to submit a new email client (because the one on the iPhone sucks), it’s not accepted because Apple doesn’t want that.

Google Chromebook

On the other hand, look at Google. Why does Google now come out and make the Chromebook which pretty much is in competition to the iPad? Google makes 96% of their income by selling ads and ad profiles. So essentially, the whole company centers around selling your profile to someone who wants to display ads to you. So they have a completely different motivation of coming out with a client platform. Also, what’s fairly important with Google is, in contrast to Apple where you have 28,000 different shareholders, at Google 67% of the total share is controlled by three people. So they actually control the whole company, and they can do whatever they want basically. Since they want to sell your online behavior, the information, the content that you produce – it’s of paramount importance to them that they actually secure the client platform. Because only if they secure the client platform, you as the user have enough inherent trust in the client platform to put all your important data in there.

And this is how they designed the Chromebook. So the Chromebook is actually an operating system that is only there to run a single application, which is the web browser. They don’t want you to store any data locally; they want you to store the data in their Cloud obviously. It prevents any type of third-party software from running, and, you know, it’s designed to be fast and to be good, and also to appeal to the hearts of nerds. Google figured out very, very early that if you want to win over general population, you have to win over the nerds first because they are the technology lead. If the nerds say: “This is cool technology”, many people are going to buy it.

So for example, take the Google DNS service. When Google came out with their DNS service, none of the nerds said: “Well, why would I use Google DNS where Google knows every single NSlookup that I do?”. Everyone said: “Look at the IP addresses, it’s 8.8.8.8, it’s so cool!” And that works. The same with the Chromebook: all the software on there, with very few exceptions, is open source, it’s very well written software, it’s really well designed software, so everyone goes like: “Look at this cool software!”, forgetting about the fact that they are just putting all the data into Google now.

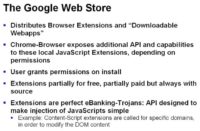

Google of course has the Chrome Web Store, everyone has an AppStore or Web Store nowadays. But because their motivation is so different from Apple’s, the whole process with the Web Store is really simple. You have a 5-dollar payment once so they verify that you actually have a credit card – and that’s about it. You submit your content, it’s directly available, but the fact being that you can only submit stuff that’s essentially HTML and JavaScript. The Chrome browser would support Netscape API (NPAPI) binary plugins, but for the Chromebook you can’t actually have them. So yeah, it’s essentially just HTML/JavaScript, which, from the security point of view, makes it a lot easier to handle the binary code.

Let’s talk about Apple in more detail. So iPad security architecture – nothing new, I guess. It’s a standard XNU (Mach+BSD) kernel. There’s only one user – the user ‘mobile’, that runs all the applications. The root user has a fixed password, we all know that. There’s additional kernel extension called ‘Seatbelt’ that essentially hardens the operating system a bit more. You have binary code signatures in the actual binaries, so they can make sure that they know the binary they’re executing. You have the keychain – central storage for user credentials; and ASLR (Address Space Layout Randomization) and DEP (Data Execution Prevention), whatnot.

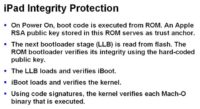

The integrity protection, and this is similar for both devices and this is something you will see on pretty much any handheld client platforms, is they now have trusted boot. What trusted boot does is, at first stage it verifies the second before starting it, the second verifies the third before starting it, and so on. The iPad and the iPhone do the same thing, so they have the ROM, and they have the next bootloader (LLB), and the next bootloader loads the iBoot, and the iBoot loads the kernel, and the kernel loads everything else.

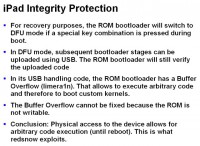

What’s kind of bad is if you actually have vulnerabilities in the bootloader, the first stage, then this whole trust chain is kind of broken. So for recovery purposes the bootloader would for example use something that’s called DFU – the Device Firmware Upgrade, I guess, or the ‘Device Fuck U Mode’, which you can turn on and then load the second stage over USB. It really-really sucks if you have a Buffer Overflow in that process, because then everyone can load whatever they want over USB, which of course everyone does for the jailbreaks. That essentially means, if you are losing sight of your iPad even for a very short amount of time, someone can jailbreak your iPad and do whatever they want with it.

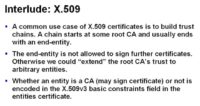

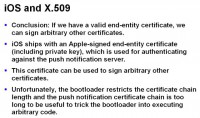

And then there’s X.509 that has never in its entire lifetime fixed a security problem, but it produced a lot. So the way Apple actually does the verification on boot is they have Apple Root CA, then they have some intermediate CA that is signed by the Root CA, and they have yet another, and then there’s an End-Entity. So the End-Entity for example is the signature on your second-stage booting. So we looked at the bootloader and looked at the code. And it turned out that Apple actually didn’t even feel to implement a crypto themselves. They actually took an open-source crypto library and just baked it into the code.

If you do that, this issue is involved with that if you don’t understand the code, you are baking it. So a short explanation for those of you who don’t know the details about X.509: the idea with that is everyone can sign other stuff, but at some point you don’t want to give out a certificate to someone, like when you send out a certificate request for VeriSign, saying: “I want this key to be signed for my web server, for SSL”. VeriSign obviously doesn’t want you to be CA afterwards, so what they give you back is not something you are supposed to use to sign other people’s certificates. This is called ‘basic constraint’, so there is a flag in the certificate that’s signed, that says: “This certificate can be used to sign other stuff” or “It cannot”.

So Apple actually managed to not see that point. iOS actually ships with a signed certificate, where they also ship the private key, it’s on your device. Everyone has an Apple-signed certificate and the private key to that. This is used for the push messaging which actually needs to authenticate, so you have the private key and you have a certificate on it. So we looked at it and we found out that the bootloader actually doesn’t verify the basic constraints. So we could use that certificate that is on the device to actually sign firmware that’s been booted. However, unfortunately for us, the bootloader is coded so crappily that the certificate chain cannot be longer than three steps, because they didn’t actually code it in a loop, but they actually coded like “Check this”, “Check that”. So unfortunately, three is too short for the certificate that you have on the device.

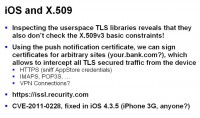

However, we then turned around and said: “Well, how about userland?” Okay, did they do the same mistake in userland? It turns out – yes, of course they did. So essentially, this private key that you have on your device you can use to sign arbitrary sites and other X.509 content, including HTTPS certificates or email server certificates, or VPN stuff, or whatever. So SSL was inherently broken on iOS until version 4.3.5.

We told Apple, and we’re like: “Oh my God, now they have to backport this to all the devices they ever made, because there are people who do mission critical shit on iPhone 3G”. Well, see this: Windows XP is over 10 years old now, you’re still getting security updates. Apple fucks up SSL completely for all apps, for all browsers, everything on the iPhone and the iPad, and they are just shipping fixes for the latest version – if you don’t like your SSL to be broken, go buy a fucking new iPhone! That is so ridiculous, but that’s how they do it. So if you still have an older device, you might want to check out the https://issl.recurity.com website which will give you a nice little lock and tell you that everything is fine although we have an Apple-signed certificate for the domain ‘*’ that we’re running on this web server.

Speaking about doing crypto and doing it wrong, Apple also has a signature scheme on the binaries, and this is all well known. Essentially, they sign the binary with what they call the ‘Mach-O’ signature. It’s a really smart idea to go ahead and build a signature mechanism that actually signs parts of the binary individually, like the code section and the data section, and the data read-only section, because they believe there will never be any metadata that is important for execution. Of course there is, there’s a bunch of exploits that are used mainly in jailbreaks. Essentially, what it all comes down to is you always take metadata; the easiest one, the first one was, you know, what they don’t sign – the entry point. So the header in a file says: “This is the entry point”, and of course you can change that value and the signature is still correct. So you just slap some stuff on the back of the binary and say: “Here’s the entry point”, and then it jumps there, executes your code, and everything is good again. This is all known – that it’s essentially unfixable, the whole process is pretty much unfixable.

Now, the update story is also something that’s fairly interesting when you talk about doing your client platform. Apple’s update story basically means AppStore, and nowadays they can also do over-the-air updates but we really haven’t looked at them yet. AppStore has its own story about it, we’ll come to the AppStore later, but keep in mind that in their review process nobody knows what they are actually reviewing. This also applies to security updates. So if I find a vulnerability in your iPhone app or in your iPad app, and even if I’m a nice guy and I tell the publisher, like “Here, you fucked it up, go ship a fix”, the fix can take up to four weeks, because someone in the AppStore review team was just sitting on it. There is no way to publish, republish an app and, say, flag “This is a security fix, ship it fast”; it’s the same process as if you updated the content, like you changed an image or whatever.

What they also do is over-the-air mobile phone carrier updates. They are pretty interesting, we haven’t looked into the details yet; they’re supposed to be signed somehow, but we’ve seen how ‘well’ signatures work on Apple in general. And they can set things, like they can set your proxy – pretty useful thing to do, especially if you want credentials that the user has.

Now, to the more fun part. What does the AppStore client actually look like? What is it architectural-wise? Essentially, it’s WebKit – well, fair enough; a lot of JavaScript; a whitelist of domains that can install stuff on your device; a little bit signed stuff like signed PLIST/XML – entitlements, widgets, it’s covering all that.

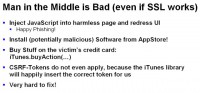

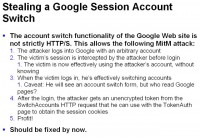

So let’s concentrate on the main thing. If you build a custom JavaScript library around WebKit, you essentially build a browser. The security model of the browser heavily depends on the fact that the user has a browser UI, so you can actually see if the site that you’re surfing at actually says HTTPS and not HTTP. It depends on you seeing that there is a pop-up coming from JavaScript and not from the browser. Well, that’s not the case if you are building an AppStore application and just, you know, bake in standard web security stuff and think this is going to work. But that’s exactly what Apple did.

So if someone sits, let’s say, at Starbucks and doesn’t realize that he’s just paying $4 for 80% hot water, and he’s surfing around with his Apple device, you can just man-in-the-middle his connections. And anytime he hits Apple.com or other Apple pages, you can just inject JavaScript like iTunes.buyAction(…) which, as you would suspect, can be used to buy shit in the AppStore. So there’s a CSRF Token which is there to prevent you from doing that stuff, however it’s so ‘nicely’ coded that whatever you inject, the requests that come out afterwards will actually auto fill in this token for you.

So the programmer of the AppStore JavaScript doesn’t have to remember that there is a token to be passed. That’s pretty hard to fix actually, because that’s the client side and the server side being broken at the same time. So you can do simple things like this. This looks pretty normal on an iPad – so “Please give me your Apple ID”, the difference being that because that’s not a browser, it doesn’t tell you that this was actually produced by a little piece of JavaScript that you just injected. Note there’s going to be a difference on a real browser; Windows would say: “This is a JavaScript message box”. Here, it just looks the same as the legitimate one.

Even the standard web security wasn’t really done right, they have insecure cookies that get leaked over HTTP when you surf, so it’s not strictly HTTPS only. You can take over simply sessions because the tokens are not solid. You have cross-site scripting all over the place. And there’s this whitelist that says: “Those domains can install software on your device, and those domains can actually charge you”. This whitelist, of course, includes HTTP-only sites, and from anywhere in the world, from any web page in the world, you can just redirect the browser to itms-apps://xss.url, and that essentially fires the AppStore app. So first of all, you can start this AppStore app. Now, the only thing you need is control over something that’s in the whitelist. What do we call that in security terms? Cross-site scripting. So what did we do? We looked for XSS on Apple.com domain, we went as far as the first front page because the Search field actually had cross-site scripting.

So, XSS in the Search field – are you kiddin’ me? Yes, that’s the way it is. And then, on a same regular browser now you would have the issue of injecting all the code that is needed to download, buy and install an application. A regular browser just ignores stuff that’s in a data:URL. And in data:URI you can actually encode the content right in the URI; the same browser does not use that as a source for an iFrame for example.

Now, the thing is the AppStore app isn’t the same browser, so what you can actually do is you can create a very long data:URL that you put into this cross-site scripting, and encode all the stuff that is needed to buy, download, install and run an application. This is how that looks (see image to the right). You build a regular link, it could be auto-forwarding or whatever; and then you base64 encode everything in here, and that actually buys and installs an application. Now, when we told Apple about this, guess how they fixed it. They fixed the cross-site scripting. So if you want to use that same attack today, you need to find a cross-site scripting anywhere on Apple.com – and you’re good. They only fixed the XSS in the Search field and were done with it. Okay, well, that’s a way to go.

What that means is, obviously, Apple has a lot of power about your devices, and if you’re using those devices – fine, but keep in mind that because there’s someone controlling the content and the availability of applications, they control your view of reality on that client platform. So unless you have another computer (real computer), that is actually a lot of control of your reality view. For the attacker, it just means physical access is always gonna be a bitch, like there’s no damn way that Apple in a foreseeable future will secure the devices against physical access. And also, don’t be surprised if you have more applications installed after you visited Starbucks than you had before. It’s just too easy to do.

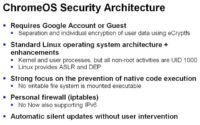

Now, for the old-school people like I am, that want to kill everyone who comes even close with a finger to the screen, Google makes the Chromebook. The Chromebook actually has a keyboard, so you are not touching on a sensor face that you are trying to read from. Essentially, the architecture of the Chromebook is completely different. You have a Google account that you sign on to, or there’s a guess account. The whole Chromebook is designed to be shared, quite in contrast – and this is why the business background is so important – to the iPad. The iPad is designed to be “one user – one iPad”, so they sell more devices. The Chromebook is actually designed to be shared, so you can log on with as many Google accounts as you want. It’s in standard Linux operating system; it has pretty strong prevention of running third-party code (anything that’s not shipped); it has a personal firewall – once we told them, they actually turned on IPv6 firewalling as well; and it also does automatic silent updates, so you can’t actually prevent the thing from updating, it will always be online and it will always check for updates and update itself.

The only thing you are supposed to run is the Chrome browser, I’m pretty sure everyone is familiar with the Chrome browser. It runs in separate processes, rendering a main process. It has several sandboxing mechanisms that were pioneered by the guys. It hides the full file system from you, so if you click on something that is not displayable in the browser, the dialog where you can save stuff will only tell you – here’s your home directory, and here’s your temp directory; and that’s it, you don’t see the full file system. It’s not a security feature, it’s just meant to prevent you from saving it anywhere else where you can’t. Finally, of course they have Flash in the Chrome browser, they compile it themselves; and of course Adobe Flash completely ignores this, so if you have a Flash app then you see the whole file system, and you can save stuff anywhere.

Anyone remember the MD5 collision CA certificate from 2008 by Alex Sotirov and the guys, where they made an SSL certificate based on the MD5 collision? Most browser vendors went the way of saying: “Because it was signed by Equifax” (and Equifax actually is a valid CA, as valid as CAs go); so they went ahead and took this collision certificate that was obviously evil, and included it explicitly in the browser so they could blacklist it. Google did the same thing, but forgot the blacklisting part. So they explicitly included this certificate, but it’s not blacklisted. So if you manage to convince the Chromebook that today is 2004, you have a valid SSL certificate – if you are Alex Sotirov, because you’ll need the private key on this one.

What they also have in contrast to the Apple products is the ‘developer mode’. So there’s a little switch in the device that you turn on, and then it’s developer mode. You get a big scary screen saying something like: “Warning! You’re about to fuck up the Universe!” and then you actually have root access and you can play with the device.

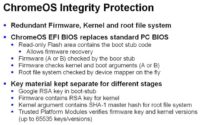

This is a trusted boot process. So, pretty similar to what Apple does, however Google is a bit smarter in the devices because they have the whole chain of boot stub, of firmware loader, of firmware, of kernel and file system – they have two of those. That is really smart because that means while they’re updating one, they’re running the other. So if something goes wrong, they’ll always have a backup to forward back to. Both actually work this way, so you have read-only memory with the root key, that one verifies the firmware keyblock, that one verifies the firmware preamble, that one verifies the firmware body. This loads the kernel and verifies the kernel and the kernel preamble which is additional metadata information, and then the kernel gets loaded, and the kernel makes sure that the file system is cleared. That’s actually a pretty solid thing.

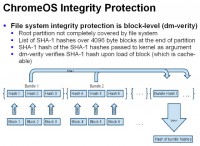

Also, their file system integrity protection is really smart: if anyone of you builds embedded devices, this is how you do it. So what they do is, instead of signing individual binaries, they sign the entire partition. And by that they actually take hashes of the individual blocks of the partition, and then the hash of the hashes gets signed. So you end up only having one hash as the integrity protection of the entire file system. Every time you read block from the block device which is below the file system, you verify that the hash is correct. If it’s not, then you go “Please connect to the Internet, I need to reset myself completely”. But this is really smart because every time you read block, if you read it before, it’s most likely cached, so you don’t have to verify the whole thing again. This is automatically coming from the caching mechanism of your block device driver, so you don’t have to do anything about it. And you only verify crypto on demand, so if you are building small embedded device and you want to make sure that your firmware is intact, this is how you do it. It’s actually really good.

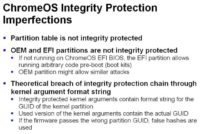

However, even Google makes mistakes. The partition table for example is not integrity protected, so if someone or something changes your partition table, it’s not going to show up. Also, there is OEM and EFI partition, where the vendor of the Chromebook hardware can actually include code for some update or whatever. This is also not integrity protected, so if you are putting an EFI backdoor on the EFI partition, then you own the Chromebook before you even start it, and nobody is going to notice.

Also, I looked at the details of this whole thing and I read the code, which nowadays not many people seem to do in security research. So this is what is actually signed. You see here the partition information is missing, so essentially they have something like a format string, where it would put the ID of the root partition and the ID of the kernel partition in when booting. But this is what they sign. Now, obviously (and you see the root hash here at the end), when they start they actually have to fill in the blanks and have the actual IDs that they are booting with. So I haven’t found a way to exploit this, but I recently read a talk announcement that is going to come out later this year from some French guys, that claimed to actually have found a way to exploit this. Because basically, what’s not cryptographically protected is where it will take the hashes from. So you can redirect the hashing algorithm to a different partition than the partition you are actually running from. So apparently they’ve found a way to do it. I was too dumb, but that’s how it goes.

Also, keep in mind what I said initially – that the Chromebook is meant to be shared. Now, for this whole trusted boot to work, this read-only bootloader in the beginning has to be really read-only, right? Well, if you have a couple of minutes with the device and a little bit of soldering iron, then the read-only can be made less read-only very quickly, because the chip is well documented and essentially you have to connect two little pins on the flash chip, and then you can ‘tell’ the software to stop being read-only – and the chip will say: “Yup, okay! I’m no longer read-only”; and you can replace the initial firmware loader which, let’s say, in the universities scenario where everyone is sharing Chromebooks, can be quite successful and fruitful.

The Chrome OS, in contrast to the Apple devices, actually needs to encrypt the user data; and, again, Google is cram full of very smart people, so they actually developed the pretty decent encryption design for the user content. So even if you have root access to the device, there’s very little way for you to actually get to the user content, unless of course you get his Google ID password – then you’re in, obviously. I won’t bore you with the details. This is how they do the user home encryption. What’s important about this is actually that it’s bound on your user password and the machine, so the key is actually depending on both things. So stealing one doesn’t really help you.

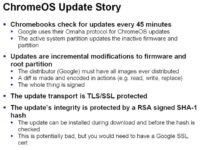

The update story with the Chromebook – it checks every 45 minutes; they developed their own protocol, it’s called ‘Omaha’. Updates are incremental, so what they do is, because of the block device signatures that I explained, the update protocol will actually send you new blocks for the block device. So they diff the old hard drive image, so to speak, with a new hard drive image, and send you the difference. What’s really interesting is they also check the integrity when they download the update. What’s interesting about it is they check it after they applied it. So the short of it is they download individual blocks that they write to your hard drive, and then once they are done they check whether the checksum was good. So if anything goes wrong in between…Well, that’s an interesting way to do it; but it’s also wrapped in SSL, so it’s hard to get in there. There are a couple of theoretical attacks that you can do if you have an SSL certificate.

Google Web Store

And then you have the Web Store. The Web Store is downloadable web apps, it’s all JavaScript – the same procedure as with all other devices. You click on an app, it says something like: “I want to have access to all your user data and your porn, and your address book”, and you say: “Yeah, whatever”, and then you get it. Extensions can be free or they can be paid for. Extension scripting in Chrome, if you’ve never done this, is almost like it’s invented for banking trojans, because a Chrome extension can be what’s called a content script. A content script is triggered for particular domains and is there to rewrite the content you see in a browser. So everything that the banking trojans on Windows do with really hard work, injecting code into Internet Explorer and stuff – now it’s built into Chrome, it’s a lot more stable and better. So if you actually have a malicious extension, it can actually rewrite web pages for you in a very convenient way. It’s almost like designed for that.

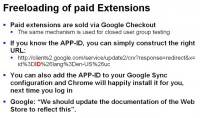

What’s interesting is the paid extensions for example. How you get the paid extensions is you have to go through Google Checkout and then pay for the extension, and then you get a link with the extension ID. We noticed that the link is always the same thing except for the extension ID. So we looked up expensive extensions, put in the extension ID – and you know what? They get installed, we didn’t have to buy them.

In your Google account, you can also say: “I’m a Chrome user, and every time I start Chrome and a log into my Google account, I want you Google to install me all the extensions I have on all the other Chrome instances that I run on a different computer”. That’s very nice, you can also just include extension IDs of the extensions that you want it to run, because when you start Chrome it will automatically install them all for you, no matter if you paid or not. What I liked the most was when we talked to Google about this they looked at each other and said: “Well, we should probably update the documentation to reflect this”. Again, it’s a question of your business model: they don’t care whether some extension developer that tries to make a living off his extension actually gets ripped off or not, because that’s not in their interest, they are not taking a 30% cut as Apple does. So they update the documentation instead of updating the code – as easy as that.

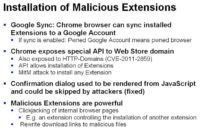

Also, how can you install malicious extensions? Well, one thing obviously is you break into the Google account and you just tell Google Sync: “Here’s another extension”, and then the next time the user logs in, the extension gets installed. But for that you would need his Google password, so that’s more theoretical than practical. What was really practical was that the web API that they use is also exposed over HTTP. So as in the Apple scenario we could actually inject JavaScript into regular pages, here you can go download this extension and install it, which Google fixed. They’re pretty quick in fixing stuff. Also, the confirmation dialog, like: “Do you really want to install this extension?” was rendered in JavaScript and there was the possibility to use more JavaScript to automatically click ‘OK’, so you wouldn’t actually see it. And as I mentioned, once you have a malicious extension in your Chrome browser you’re pretty much fucked. That also holds true for the Chrome browser on other operating systems, so if you are using Chrome browser make sure that you are checking your extensions once in a while, because it is really easy to make them self-installing due to the fact that the extension management dialog is also rendered in JavaScript.

Google Apps

But then, you have this comparably really-really secure platform. Now, the question is: “Okay, I put my data into the Cloud (in the Google term, it’s Google Apps) – is it any safer or not?” One of the rules for the Google Apps game that you have to keep in mind is: if your account is compromised, if someone learns your Google ID password – you’re fucked, that’s it, game over. There’s very little you can actually do, and there’s very little you can do to even notice. The same holds true, and this is for all Cloud applications, for your session cookies: if your session cookie goes away, at some point in its lifetime it’s as if you lost your password; you have to understand that. There needs to be one single HTTP request in this whole HTTPS site that you have not controlled – and oops, there goes your session cookie, and it goes over a wireless LAN in Starbucks, and someone else is making funny faces.

Also, you should actually read the terms when you’re using stuff, so if Google doesn’t like you for any reason anymore and they simply close your account, the stuff is gone, it’s unrecoverably gone. If you can live with that – fine. However, if someone else can make Google not love you anymore (US government for example), then the same holds true. As long as you keep all those things in mind, feel free to use the Cloud. But this is what is often overlooked. And as I said, the protections must not only cover your account, but also all the session information to your account that comes with third-party Cloud applications working on your account. We’re gonna see in a minute what that looks like.

Google Docs

Fun fact in between – we looked at Google Docs, and Google Docs allows you to write macro in something that’s close to JavaScript, and you essentially run them on Google Docs, so they run on Google’s server. There’s, again, like an AppStore for it, so you can use someone else’s macros. The fun part is one of the most common macros to be used is a Russian macro for checking credit card number validity – it’s actually not malicious! We looked really hard, it’s actually not malicious. However, the idea of blindly importing JavaScript code from a Russian guy that I have no control over, into my Google Docs spreadsheet to check credit card numbers, struck me as brave, so to speak. That’s the thing – when you import someone else’s macro you look at the macro once, you say: “Okay, I wanna use that”, if he changes the macro you don’t get any notification. So if that Russian guy actually turns to cybercrime at some point, he’s gonna have a really good life.

Also, what we found interesting is that the macros can actually issue HTTP requests. Only Google can DoS Google; you reach something like 500 GBps transfer rate if Google talks to Google. That’s pretty fast. We stopped playing at that point, but let’s just say source IP address based firewalling really doesn’t work so well if the attacker actually runs code in your Cloud.

And then, of course there are web security issues. This is an issue that we found, where you could switch the account, when you switch from one Google account to another, which you’re not supposed to do because you’re supposed to have everything in one account, right? They had, again, a session that was not only over HTTPS, but also over HTTP, so you can log into a Google account; then when the victim wants to log in, you intercept the victim; you use your session to Google and claim to the victim that he just logged into your account; and when he really logs in, he effectively switches account. As the victim, you will see a website that doesn’t look like the regular sign-in, but like the account switching web page, but that’s really hard to notice. There are many tokens that come with a Google session, but one of them is unencrypted, so you can steal it, and then we’re going back to the scenario where if you have the session cookie, you own the account. They fixed that by now, but it shows the general pattern: if in this whole conglomerate of web applications there’s one single HTTP request that doesn’t look weird to send a cookie to – you have a hole in your Cloud.

Well, you would think Google, being full of smart people, will not have standard web security issues. Of course they do. For example, on Google Sites they try to prevent JavaScript, so you can’t put JavaScript into a Google Site; but of course you can put it into an

Also, here’s the issue with third-party Cloud providers. So let’s say eve.com has this really cool addition to your Google account that needs only a little bit of access to your data. Google wants to make sure that you actually gave eve.com permission to access your data in your Google account. Now, the way they do that is unfortunately using GET parameters. GET parameters are (and it’s no news) very-very nice, because they are included in the referrer field.

So you can construct something like this, where you could ask: “The site called google.com wants to access your calendar and your Picasa pictures”. Sounds legitimate, doesn’t it? And you see in the URL (we’re not faking it, it’s all Google) that it’s a search request and the ‘I’m Feeling Lucky’ button. What it does at the end is it accesses our website, and in the referrer there’s this whole thing, including the access token, that allows our Cloud service now to steal all your data from your Google account.

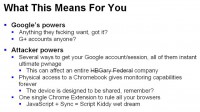

So, what that means for you is Google can do whatever they want. They’re putting all your eggs into their bag. If the whole G+ account story of the people being kicked out because their name doesn’t sound like their real name hasn’t taught you a lesson, then nothing will. I’m sorry, that’s just the fact.

There are several ways to get your Google account owned, and that’s not just the password. While the client platform, the Chromebook, is a role model in device security, they really did a pretty decent job on it, that doesn’t protect your Google account automatically. So if only one thing, like one token, one password gets lost, it can mean entire company ownage, as some people (HBGary Federal) experienced. What I hear, interestingly enough, is when HBGary was owned, they knew that their emails were being downloaded, and they tried for eight hours to reach someone at Google to close their accounts. And nobody did. So, how was that for a service-level agreement? Physical access to the Chromebook is the same as with other client platforms: if there’s physical access – that’s really bad, so the security of the device plays big role. And Chrome extensions are evil.

Summary

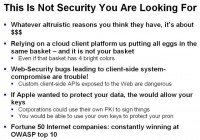

So what I’m trying to say is whatever fanboy you are, whether you are Apple fanboy or Google fanboy or whatever, that’s not the reason they built those things, that’s not the reason they offer you the service. The reason they offer you the service is money. It’s not a bad thing per se, but you have to keep in mind what the business model behind this is and understand how much they care about your security and why. If you rely on a Cloud client platform, you are putting your eggs in someone else’s basket; even if that basket has bright primary colors, it doesn’t mean it’s a better basket than your own. So it’s a thing for consideration whether you want to trust another company that you probably don’t even have legal connections to with your critical data, or not. What I find interesting is web security bugs even exist with companies where they’re pretty sure they know what they do, especially in a Cloud scenario it’s extremely hard to get everything right and have not just one little web page that leaks your tokens or that leaks your cookies. So even Google has issues doing that, this is also important to keep in mind when you look at other Clouds.

If Apple really wanted to protect your data, you would have full drive encryption by now, and they would allow you to use your own keys from your own PKI, and not their keys. Everything else is just, you know, simulation of encryption. Even the big Internet companies still think that the OWASP Top 10 is something they need to comply with, so they need to have them all instead of not having them. That is quite fascinating.